With the widespread application of large language models, how to achieve efficient deployment in resource-constrained environments has become an important topic at present. To solve this challenge, the lightweight big model series DistilQwen2.5 based on Qwen2.5 was officially released. The model adopts an innovative double-layer distillation framework that optimizes data and parameter fusion techniques not only preserves the performance of the model, but also significantly reduces the consumption of computing resources. This breakthrough provides new possibilities for deploying large language models in resource-constrained environments.

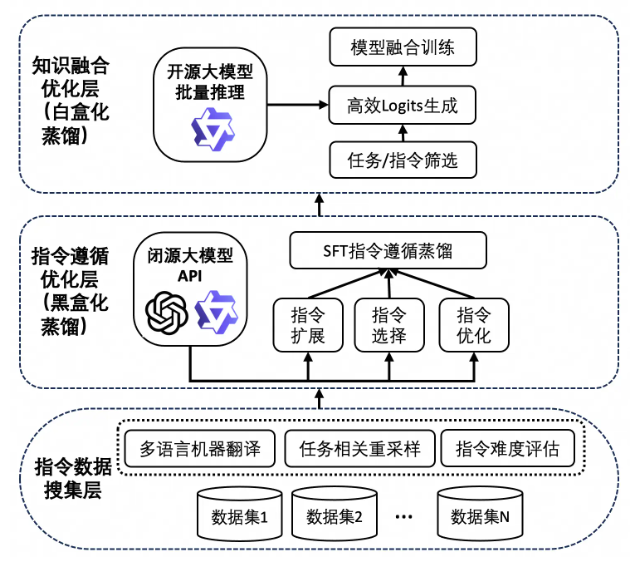

The success of DistilQwen2.5 is inseparable from its unique knowledge distillation technology. This process first relies on a large number of high-quality instruction data, which comes from multiple open source data sets and private synthetic data sets. To ensure the diversity of data, the research team expanded Chinese and English data through Qwen-max, thus achieving task and language balance. Subsequently, the model uses the output of the teacher model to expand, select and rewrite instructions through "black box distillation". This approach not only improves the quality of the data, but also enhances the multitasking capability of the model, making it perform better in complex scenarios.

It is worth mentioning that DistilQwen2.5 also introduces white box distillation technology, which imitates the its distribution of the teacher model, makes the student model more efficient in obtaining knowledge. This technology effectively avoids the problems of GPU memory consumption, slow storage and reading speeds faced by traditional white box distillation, and further improves the training efficiency and performance of the model.

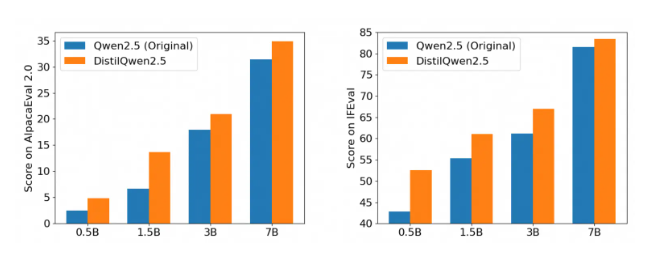

After multiple authoritative instructions to follow the evaluation benchmarks, DistilQwen2.5's performance is impressive, especially in the AlpacaEval2.0 and MT-Bench evaluations. This marks the entry of a new stage in the development of lightweight large language models, which can significantly reduce computing costs while ensuring performance, and further promote the implementation of AI technology in various application scenarios. Whether it is an enterprise-level application or an individual developer, DistilQwen2.5 provides strong support for it.

The open source release of DistilQwen2.5 also provides convenience for more developers, allowing them to use this powerful tool more easily and contribute to the popularization of artificial intelligence technology. Through open source, the research team hopes to work with global developers to promote the development of large language model technology, explore more innovative application scenarios, and inject more vitality into the future of AI.