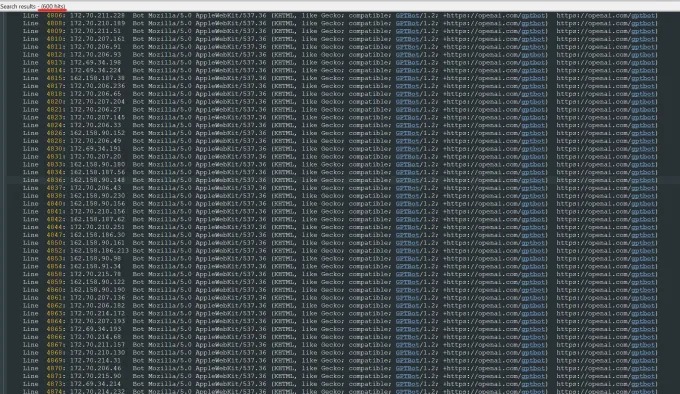

Recently, Oleksandr Tomchuk, CEO of Trilegangers, received an emergency alert saying his company's e-commerce website was suddenly paralyzed. After an in-depth investigation, he found that the root of the problem lies in an OpenAI robot that is relentlessly crawling the content of its entire website. Trilegangers’ website has over 65,000 products, each with detailed pages and at least three images. OpenAI's robot sent tens of thousands of server requests to try to download everything, including hundreds of thousands of images and their descriptions.

Tomchuk pointed out that OpenAI's crawler has had a serious impact on the website, almost equivalent to a distributed denial of service (DDoS) attack. Trilegangers’ main business is to provide 3D object files and images to 3D artists, video game developers, and other users who need to digitally reproduce real human characteristics. These documents include detailed scan data from the hands to the hair, the skin and the whole body.

Trilegangers’ website is at the heart of its business. The company has spent more than a decade building the largest "human digital stand-alone" database on the network, all from 3D scans of real human bodies. Tomchuk’s team is headquartered in Ukraine, but has also been licensed in Tampa, Florida, USA. Although there is a Terms of Service page on the website that explicitly prohibits unauthorized robot crawling, this has not effectively blocked OpenAI's robots.

In order to effectively prevent robot crawling, the website must correctly configure the robot.txt file. The tags in this file can clearly tell OpenAI's GPTBot not to visit the website. Robot.txt, also known as the bot exclusion protocol, is designed to inform search engines what content should not be indexed. OpenAI said on its official page that it respects the files when the website is configured with tags that are prohibited from crawling, but also warns that its robot may take up to 24 hours to recognize the updated robot.txt file.

Tomchuk stressed that if the website does not use robot.txt correctly, OpenAI and other companies may think they can crawl data at will. This is not an optional system, but a necessary measure to protect the content of the website. Worse, not only was Trilegangers forced to be offline by OpenAI’s robots during US working hours, Tomchuk also expected a significant increase in AWS bills due to the robot’s massive CPU and download activity.

However, robot.txt is not a complete solution. Whether AI companies comply with this agreement is entirely dependent on their voluntary . Last summer, another AI startup, Perplexity, was investigated by Wired for alleged failure to comply with the robot.txt protocol, which attracted widespread attention.

Tomchuk said he could not find a way to contact OpenAI and ask about it. OpenAI also did not respond to TechCrunch's request for comment. In addition, OpenAI has so far failed to provide its long-term commitment of opt-out tool, which makes the issue even more complicated.

This is a particularly tricky problem for Trilegangers. Tomchuk noted that the business they are engaged in involves serious rights issues because they are scanning for real people. Under European GDPR and other laws, it is illegal to use live photos online without permission.

Ironically, the greedy behavior of OpenAI robots has made Trilegangers aware of the vulnerability of their websites. Tomchuk said that if the robot crawled data in a more gentle way, he might never notice the problem.

“It’s scary because these companies seem to be exploiting a vulnerability to crawl data, and they say ‘If you update your robot.txt with our tags, you can opt out’,” Tomchuk said. However, this actually puts the blame on business owners, asking them to understand how to stop these robots.

Tomchuk hopes other small online businesses will realize that the only way to discover whether AI bots are stealing copyright assets on the website is to actively seek it. He is not the only one to be troubled by AI robots. Owners of other websites also revealed to Business Insider how OpenAI’s bots disrupt their website and increase their AWS fees.

By 2024, this problem is expected to worsen further. New research by digital advertising firm DoubleVerify found that AI crawlers and crawlers have resulted in an 86% increase in “general invalid traffic” that comes not from real users but from the activity of bots.