Nanjing University and Megvii Research Institute collaborated to develop an unsupervised visual large model paradigm called SeVa, which effectively solves the problem of preference alignment of visual language models. This breakthrough technology does not require manual or GPT-4 participation. It significantly reduces alignment costs by automatically constructing preference data, improves the model's instruction following ability, reduces hallucinations, and has achieved remarkable results in multiple benchmark tests. The core of SeVa is to use image augmentation technology to generate positive and negative samples, and use this to train the model, ultimately achieving effective alignment of human preferences.

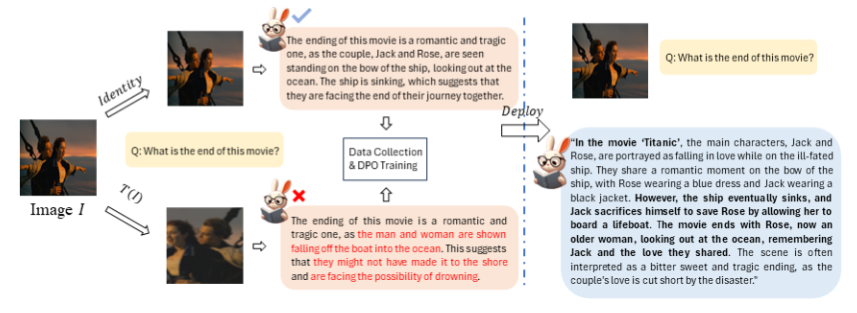

The core of this technology is to automatically construct a pipeline of preference data. By comparing the model output before and after preference alignment, changes can be clearly seen. The researchers found that even small image augmentations could give VLM different answers to the same question. Therefore, they used the original image answers as positive samples and the augmented image answers as negative samples for training.

SeVa's experimental results are impressive. Using only 8k constructed unsupervised data has significantly improved VLM's instruction following ability, reduced hallucinations, and achieved significant improvements in multi-modal and other benchmarks. More importantly, this method is simple, low-cost, and does not require any human or GPT-4 annotation.

Test results on multiple benchmarks show that SeVa has significant advantages in improving the human preference alignment of visual models. Especially on the MMVet and LLaVA-bench evaluated by GPT-4, SeVa's performance is particularly outstanding. In addition, SeVa can also produce longer and more detailed answers, with higher consistency in each answer, and is more robust to perturbations of different temperatures.

This research not only provides an effective solution to the alignment problem of large visual models, but also opens up new possibilities for the development of the AI field. With the open source of SeVa, we can foresee that more researchers and developers will use this paradigm to promote the further development of AI technology in the future. In this era full of infinite possibilities, let us look forward to more surprises brought by AI technology.

Project address: https://github.com/Kevinz-code/SeVa

The open source of SeVa will promote the development of visual large model technology, provide researchers and developers with new tools and methods, and further improve the performance and application of visual AI. The success of this research demonstrates the great potential of unsupervised learning in solving AI alignment problems and points out a new direction for the future development of AI technology.