Character AI, an AI chatbot application developed by former Google Brain employees, is creating a craze among young people in the United States. It allows users to interact with various characters, from fictional characters to historical celebrities and even contemporary public figures, greatly enriching the user's interactive experience. It has 20 million monthly active users, handles 20,000 queries per second, accounts for 20% of Google searches, and has triggered extensive discussions and sharing on Reddit. However, its rapid popularity has also brought about some issues worthy of attention, especially the phenomenon of emotional dependence and addiction among teenagers, which has triggered thinking and discussion from all walks of life.

Character AI, is causing a stir among young Americans. Launched in 2022 by former Google Brain employees Noam Shazeer and Daniel De Freitas, this AI chatbot application allows users to interact with a variety of characters, from fictional characters to historical celebrities and even contemporary public figures.

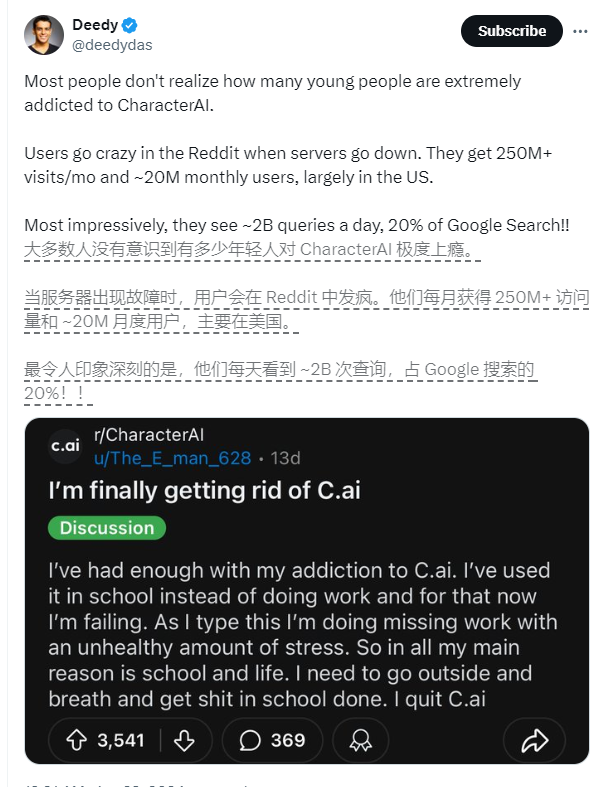

Character AI has 20 million monthly active users and handles 20,000 queries per second, accounting for 20% of Google searches. More than 1 million people share their created characters and communication experiences on Reddit. However, the popularity of this technology has also brought about some problems, especially the emotional dependence and addiction of teenagers on Character AI.

57.07% of users are teenagers aged 18-24, and the average usage time reaches 2 hours. In the Reddit community, many people say that talking to an AI bot is easier than talking to a real person, and posts about addiction are everywhere. Some users even showed strong "withdrawal reactions" when the server went down.

Character AI's chatbot is becoming more and more lifelike through training with large amounts of text data and continuous improvement through interactions with users. For example, the "psychologist" character created by New Zealand user Sam Zaia was trained using his professional knowledge to provide users with real psychological support.

However, professional psychotherapist Theresa Plewman has questioned the effectiveness of Character AI, arguing that it cannot replace human response and treatment. Dr. Kelly Merrill Jr. of the University of Cincinnati believes that chatbots have a positive role in providing mental health support, but also warns of the limitations and potential risks of AI.

Futurist Professor Rocky Scopelliti predicts that tools like Character AI will become more popular and AI will play a more important role in the human emotional and spiritual world. But at the same time, he also called for the need to actively face the ethical and social dilemmas that AI may bring through regulation.

The popularity of Character AI allows us to see the potential of AI technology in emotional support, but it also forces us to think about how to avoid over-reliance on it while enjoying the convenience brought by technology. This is not only a technical problem, but also a social problem that needs to be faced and solved by us together.

The rapid popularity of Character AI demonstrates the huge potential of AI technology in the field of emotional interaction, but it also sounds a warning. In the future, how to balance technological progress and social ethics will be an important issue before us. It requires the joint efforts of the whole society to make AI technology better serve mankind.