With the rapid development of AI-generated video technology, its fidelity has reached the point where it is difficult to distinguish authenticity from fake. To address this challenge, researchers at Columbia University have developed a new tool called DIVID for detecting AI-generated videos. DIVID is an extension of the Raidar tool that was previously used to detect AI-generated text. It effectively identifies videos generated by diffusion models, such as OpenAI's Sora and Runway, by analyzing the characteristics of the video itself rather than relying on the internal working mechanism of the AI model. Gen-2 and Pika et al. This research result is of great significance for combating deep fake videos and maintaining network information security.

AI-generated videos are becoming more and more realistic, making it difficult for humans (and existing detection systems) to distinguish real videos from fake ones. To solve this problem, researchers at Columbia University School of Engineering, led by computer science professor Junfeng Yang, developed a new tool called DIVID for detecting AI-generated videos, which stands for DIffusion-generated VIdeo Detector. DIVID is an extension of Raidar, which the team released earlier this year, which detects AI-generated text by analyzing the text itself without accessing the inner workings of a large language model.

DIVID improves on earlier methods for detecting generated videos, effectively identifying videos generated by older AI models such as Generative Adversarial Networks (GANs). A GAN is an AI system with two neural networks: one used to create fake data and another used to evaluate to distinguish between real and fake data. Through continuous feedback, both networks continuously improve, resulting in highly realistic synthetic videos. Current AI detection tools look for tell-tale signs like unusual pixel arrangements, unnatural movements, or inconsistencies between frames, which don’t typically show up in real video.

A new generation of generative AI video tools, such as OpenAI’s Sora, Runway Gen-2, and Pika, use diffusion models to create videos. Diffusion modeling is an AI technology that creates images and videos by gradually converting random noise into clear, realistic pictures. For videos, it optimizes each frame individually while ensuring smooth transitions, resulting in high-quality, realistic results. The development of this increasingly complex AI-generated video poses a significant challenge to the detection of its authenticity.

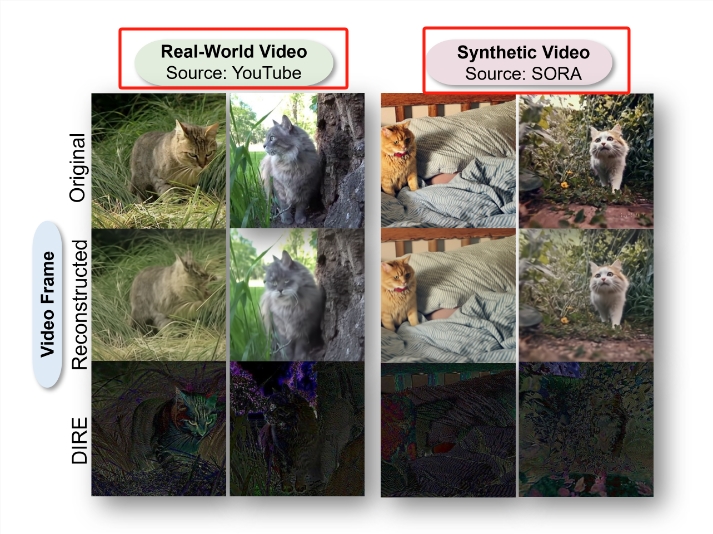

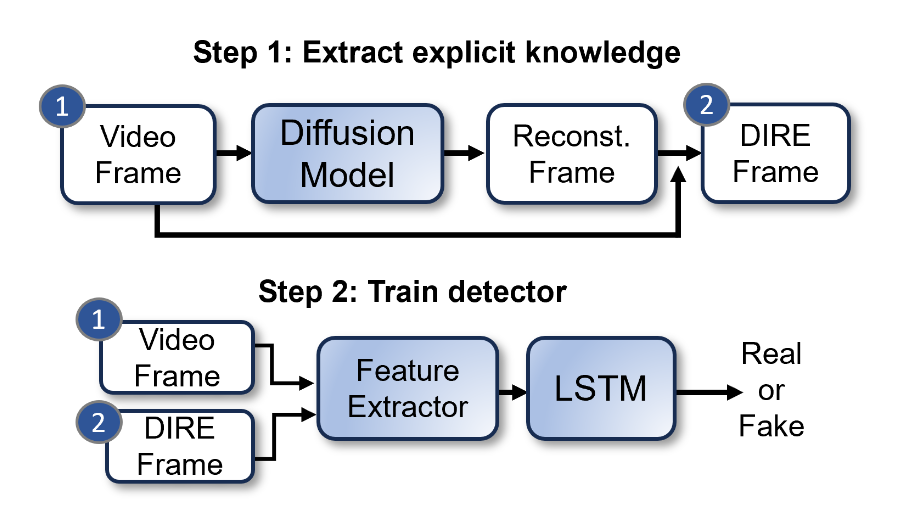

Bernadette Young's team used a technique called DIRE (DIffusion Reconstruction Error) to detect diffusion-generated images. DIRE is a method that measures the difference between an input image and the corresponding output image reconstructed by a pretrained diffusion model.

Junfeng Yang, co-director of the Software Systems Lab, has been exploring how to detect AI-generated text and video. With the release of Raidar earlier this year, Junfeng Yang and collaborators implemented a method to detect AI-generated text by analyzing the text itself, without accessing the inner workings of large language models such as chatGPT-4, Gemini, or Llama. Raidar uses a language model to reformulate or modify a given text and then measures the number of edits the system makes to the given text. A high number of edits means the text was probably written by a human, while a low number of edits means the text was probably machine-generated.

“Raidar’s heuristic — that another AI generally perceives another AI’s output to be of high quality, so it makes fewer edits — is a very powerful insight, not just limited to text,” said Junfeng Yang. He said: “Given that AI-generated videos are becoming more and more realistic, we wanted to use Raidar’s insights to create a tool that can accurately detect AI-generated videos.”

Researchers developed DIVID using the same concept. This new generative video detection method can identify videos generated by diffusion models. The research paper was published at the Computer Vision and Pattern Recognition Conference (CVPR) in Seattle on June 18, 2024, and the open source code and data set were released at the same time.

Paper address: https://arxiv.org/abs/2406.09601

Highlight:

- In response to increasingly realistic AI-generated videos, researchers at Columbia University School of Engineering have developed a new tool, DIVID, that can detect AI-generated videos with 93.7% accuracy.

- DIVID is an improvement on previous methods for detecting a new generation of generated AI videos, which can identify videos generated by a diffusion model that gradually transforms random noise into high-quality, realistic video images.

- Researchers extend insights from Raidar’s AI-generated text to video, using language models to reformulate or modify the text or video, and then measure the number of edits the system makes to the text or video to determine its authenticity.

In short, the emergence of DIVID provides a new weapon to combat false information in AI-generated videos. The release of its open source code and data sets will also promote future research and development in this field and contribute to building a more secure and reliable network environment.