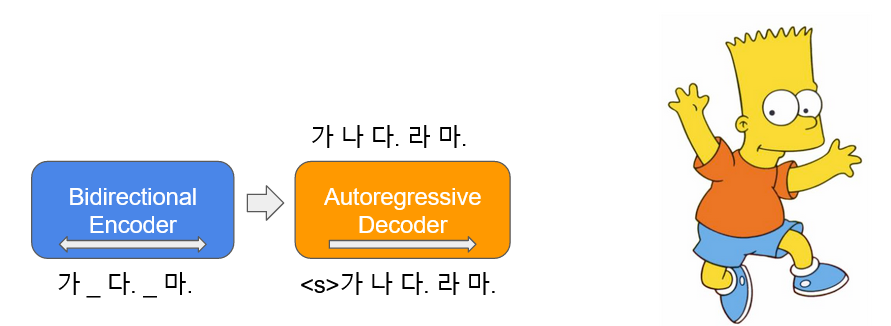

BART ( B IDIRECTIONAL AND A UTO- R EGRERASSIVE T RANSFORMERS) is learned in the form of autoencoder , which adds noise to some of the input text and restores it as an original text. Korean BART ( KOBART ) is a Korean encoder-decoder language model that has been learned about Korean text of 40GB or more using Text Infilling noise function used in the paper. This distributes the derived KoBART-base .

pip install git+https://github.com/SKT-AI/KoBART#egg=kobart| Data | # of sentences |

|---|---|

| Korean wiki | 5M |

| Other Corpus | 0.27b |

In addition to Korean Wikipedia, various data such as news, books, and all of the horses of v1.0 (conversation, news, ...) were used for model learning.

Learned with Character BPE tokenizer in the tokenizers Package.

vocab size is 30,000 and added emoticons and emoji, which are often used for conversations, and the recognition ability of the token is raised.

?,

:),?,(-:-):-)

In addition, we have defined unused tokens such as <unused0> to <unused99> so that they can be freely defined according to the necessary subtasks .

> >> from kobart import get_kobart_tokenizer

> >> kobart_tokenizer = get_kobart_tokenizer ()

> >> kobart_tokenizer . tokenize ( "안녕하세요. 한국어 BART 입니다.?:)l^o" )

[ '▁안녕하' , '세요.' , '▁한국어' , '▁B' , 'A' , 'R' , 'T' , '▁입' , '니다.' , '?' , ':)' , 'l^o' ]| Model | # of params | Type | # of layers | # of heads | ffn_dim | hidden_dims |

|---|---|---|---|---|---|---|

KoBART-base | 124m | Encoder | 6 | 16 | 3072 | 768 |

| Decoder | 6 | 16 | 3072 | 768 |

> >> from transformers import BartModel

> >> from kobart import get_pytorch_kobart_model , get_kobart_tokenizer

> >> kobart_tokenizer = get_kobart_tokenizer ()

> >> model = BartModel . from_pretrained ( get_pytorch_kobart_model ())

> >> inputs = kobart_tokenizer ([ '안녕하세요.' ], return_tensors = 'pt' )

> >> model ( inputs [ 'input_ids' ])

Seq2SeqModelOutput ( last_hidden_state = tensor ([[[ - 0.4418 , - 4.3673 , 3.2404 , ..., 5.8832 , 4.0629 , 3.5540 ],

[ - 0.1316 , - 4.6446 , 2.5955 , ..., 6.0093 , 2.7467 , 3.0007 ]]],

grad_fn = < NativeLayerNormBackward > ), past_key_values = (( tensor ([[[[ - 9.7980e-02 , - 6.6584e-01 , - 1.8089e+00 , ..., 9.6023e-01 , - 1.8818e-01 , - 1.3252e+00 ],| NSMC (ACC) | KORSTS (Spearman) | Question Pair (ACC) | |

|---|---|---|---|

| ----------------------------------------- | |||

| Kobart-base | 90.24 | 81.66 | 94.34 |

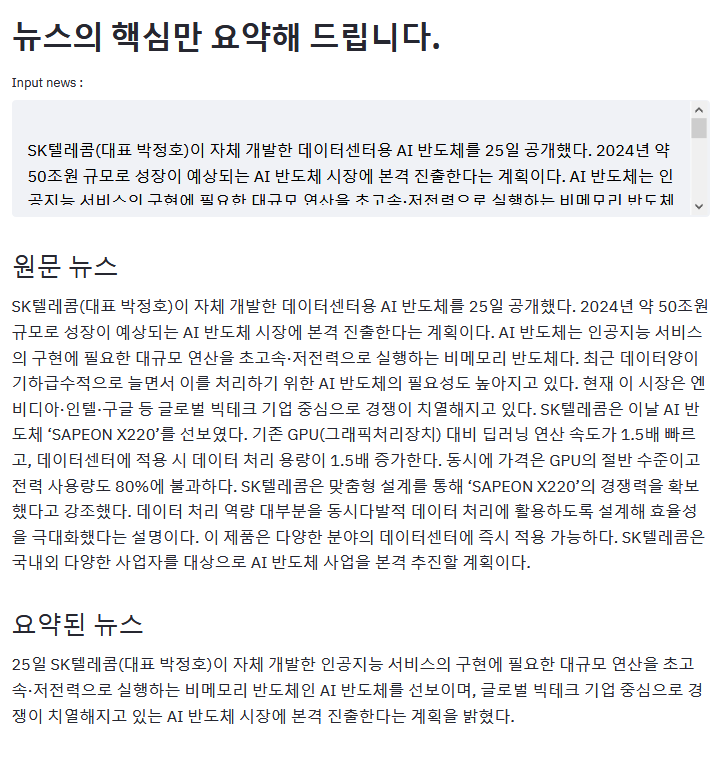

The above example is the result of summarizing the zdnet article.

If you have an interesting example using KOBART, please PR!

aws s3<unk> token disappearing due to the talk nicer bugKoBART model update (SAMPLE EFFICENT improves)모두의 말뭉치pip installation support Please upload KoBART -related issues here.

KoBART is released under modified MIT license. If you are using models and code, please follow the license content. License specialists can be found in LICENSE file.