It is a project that combines the Tacotron2 model and the Vocoder model (Griffinlim, Wavenet, Melgan) to implement Korean TTS.

Based on

https://github.com/tensorspeech/tensorflowtts

https://github.com/hccho2/tacotron2-korean-tts

https://carpedm20.github.io/tacotron/

Koran Single Speaker Speech

Actor Yoo Inna's voice

Pet pet trainer Kang Hyung -wook voice

Audio data conducted on learning is not shared with copyright issues. Please check each data source.

KSS: https://www.kaggle.com/bryanpark/korean-le-speaker-speech-dataset

KBS Radio: http://program.kbs.co.kr/2fm/radio/Uvolum/pc/index.html

Convert the wav file to a numpy file

'Audio', 'MEL', 'LINEAR', 'TEXT', etc.

Data/kss/"voice file name.npz creation

Mel-spectrogram, linear-spectrogram correct answer set

There are a total of four learning.

Tacotron2 + GriffinLim + SingleSpeaker

Tacotron2 + Griffinlim + Multispeaker (Deep Voice 2)

Tacotron2 + Melgan + Single Speaker

Tacotron2 + Melgan + Multispeaker (Transfer Learning)

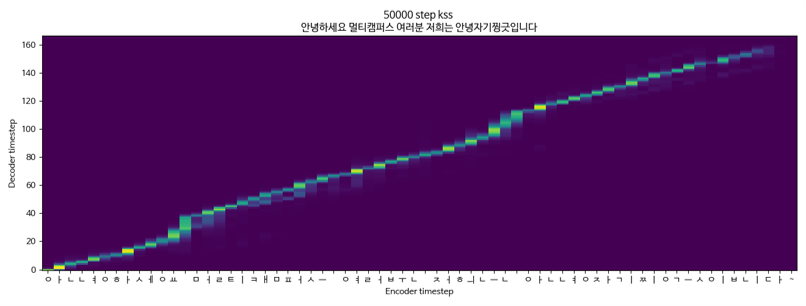

Tacotron2 + Griffinlim + Multispeaker (KSS + Yoo Inna) KSS data

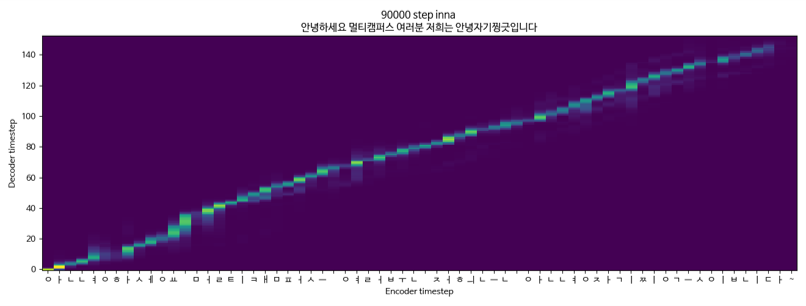

Tacotron2 + Griffinlim + Multispeaker (KSS + Yoo In -na)

Tacotron2 + Melgan + SingleSpeaker (KSS)