Documentation | Chinese Documentation

Simplified Chinese | English

Tengine

Introduction

Tengine is led by OPEN AI LAB , and the project realizes the need for rapid and efficient deployment of deep learning neural network models on embedded devices. In order to realize cross-platform deployment in many AIoT applications, this project uses C language for core module development and conducts in-depth framework cutting based on the limited resources of embedded devices. At the same time, a completely separate front-end design is adopted, which is conducive to the rapid porting and deployment of heterogeneous computing units such as CPU, GPU, and NPU, reducing the cost of evaluation and migration.

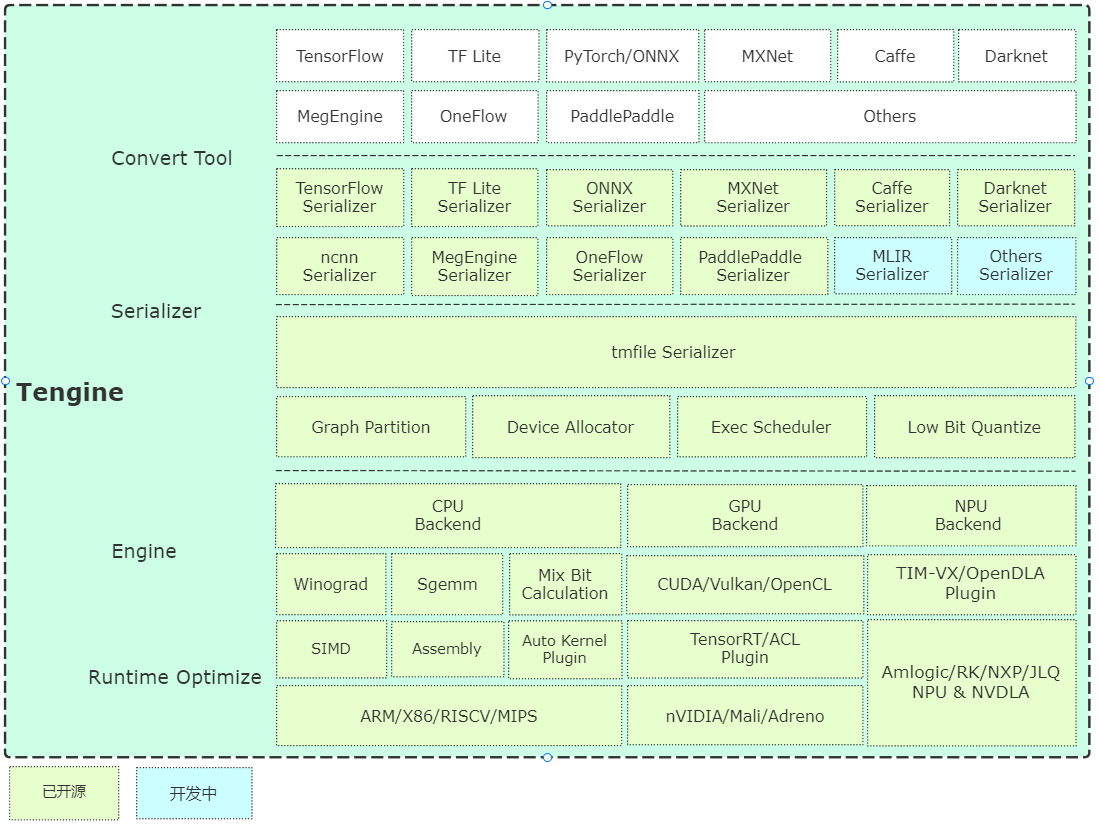

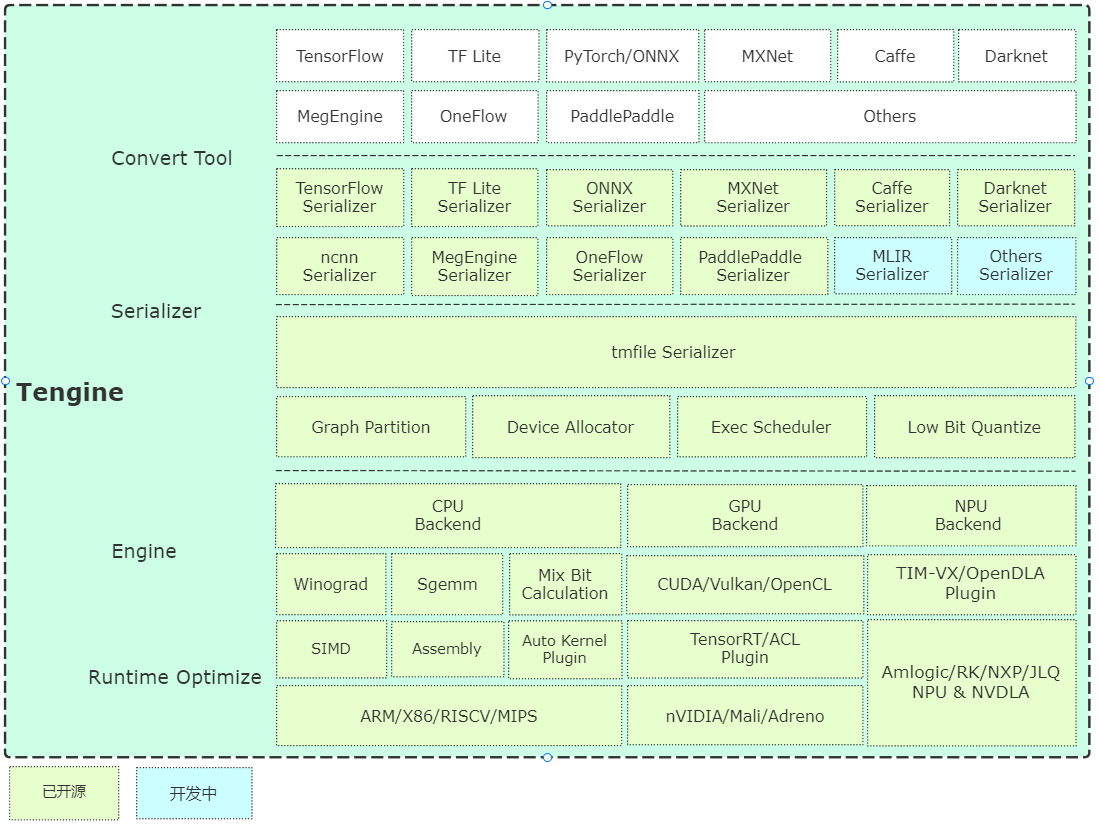

The Tengine core code consists of 4 modules:

- device : NN Operators backend module, CPU, GPU, NPU reference codes are provided;

- scheduler : The core components of the framework, including NNIR, computing graph, hardware resources, and the scheduling and execution module of the model parser;

- operator : NN Operators front-end module, implements NN Operators registration and initialization;

- serializer : Model parser, implements network model parameter analysis in tmfile format.

Brief analysis of architecture

Get started quickly

Compilation

- Quick Compilation Based on cmake, simple cross-platform compilation is implemented.

Example

- Examples provide basic classification and detection algorithm use cases, and continuously update according to issue requirements.

- Source installation provides apt-get command line installation and trial of ubuntu system, and currently supports x86/A311D hardware.

Model warehouse

Conversion Tool

- Precompiled version: Provides precompiled model conversion tools on Ubuntu 18.04 system;

- Online conversion version: based on WebAssembly implementation (browser local conversion, model will not be uploaded;

- Source code compilation: It is recommended to compile on the server or PC, the instructions are as follows:

mkdir build && cd build

cmake -DTENGINE_BUILD_CONVERT_TOOL=ON ..

make -j`nproc`

Quantitative Tools

- Source code compilation: The source code of the quantitative tool has been opened and supports uint8/int8.

Speed Assessment

- Benchmark's basic network speed assessment tool, welcome to update it.

NPU Plugin

- TIM-VX VeriSilicon NPU User Guide.

AutoKernel Plugin

- AutoKernel is a simple and easy-to-use, low-threshold automatic operator optimization tool. AutoKernel Plugin implements the one-click deployment of automatic optimization operators into Tengine.

Container

- SuperEdge provides a more convenient business management solution with the help of SuperEdge edge computing open source container management system;

- How to use Tengine with SuperEdge Container Usage Guide;

- Video Capture user manual Demo Dependency file generation guide.

Roadmap

Acknowledgements

Tengine Lite references and draws on the following items:

- Caffe

- Tensorflow

- MegEngine

- ONNX

- ncnn

- FeatherCNN

- MNN

- Paddle Lite

- ACL

- stb

- convertmodel

- TIM-VX

- SuperEdge

License

Clarification Note

- [Online reporting function] The main purpose of the online reporting function is to understand the usage information of Tengine. The information is used to optimize and iterate Tengine and will not affect any normal functions. This function is on by default. If you need to turn it off, you can modify the following configuration to turn it off: (Home directory CMakeLists.txt) OPTION (TENGINE_ONLINE_REPORT "online report" OFF)

FAQ

Technical discussion