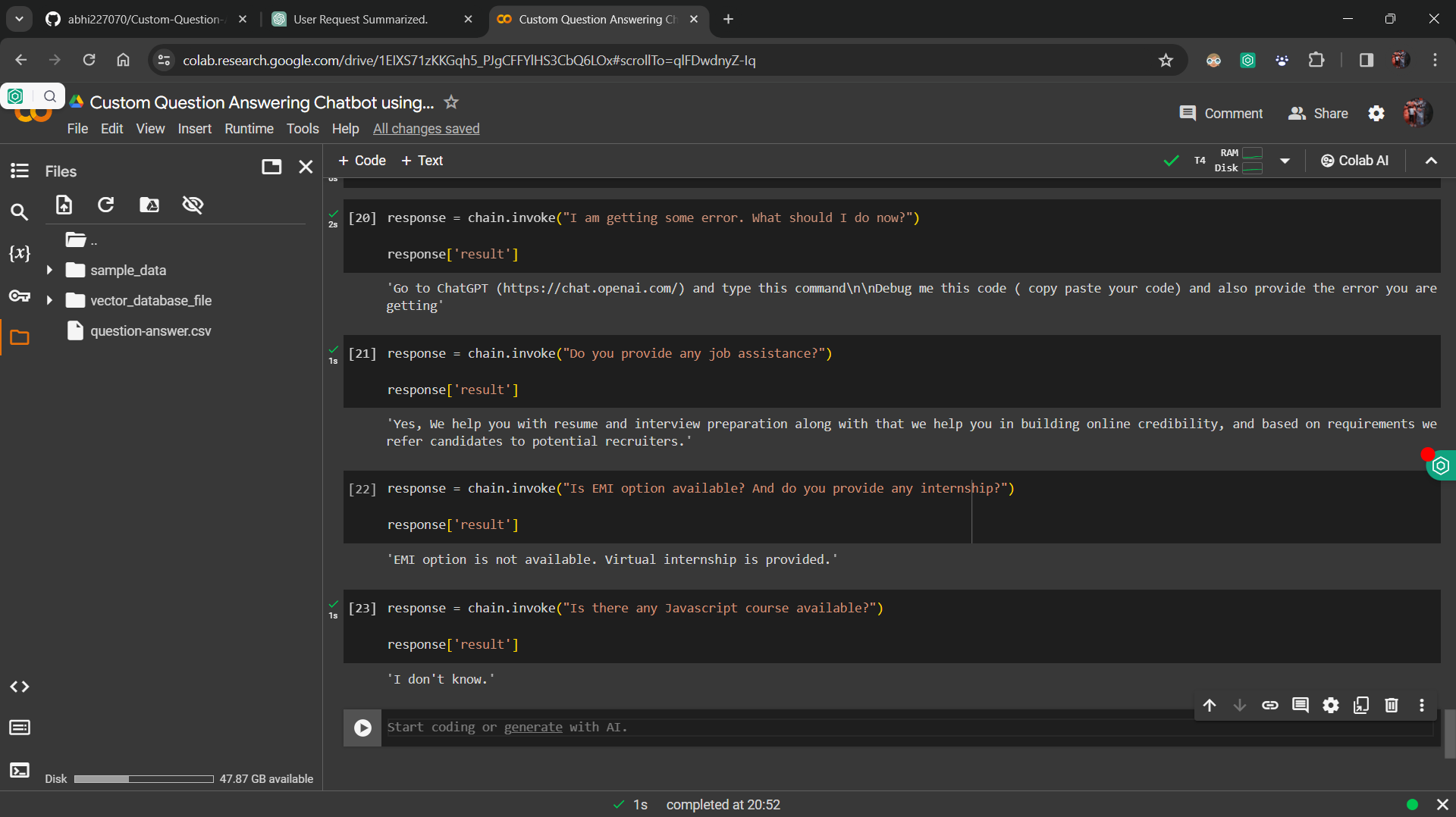

This project implements a custom question answering chatbot using Langchain and Google Gemini Language Model (LLM). The chatbot is trained on industrial data from an online learning platform, consisting of questions and corresponding answers.

The project workflow involves the following steps:

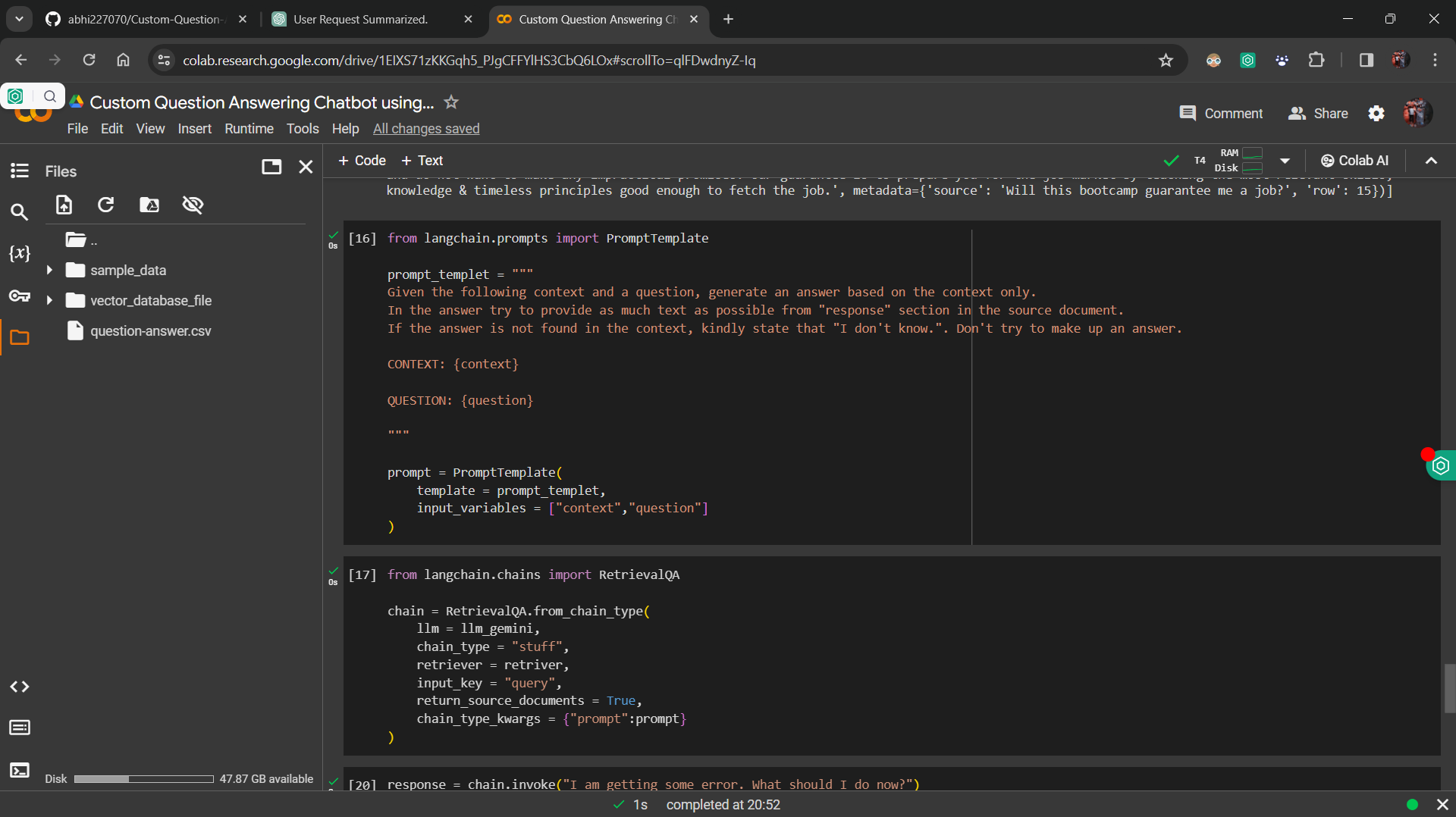

Data Fine-Tuning: The Google Gemini LLM is fine-tuned with the industrial data, ensuring that the model can accurately answer questions based on the provided context.

Embedding and Vector Database: HuggingFace sentence embedding is utilized to convert questions and answers into vectors, which are stored in a vector database.

Retriever Implementation: A retriever component is developed to retrieve similar-looking vectors from the vector database based on the user's query.

Integration with Langchain RetrivalQA Chain: The components are integrated into a chain using Langchain RetrivalQA chain, which processes incoming queries and retrieves relevant answers.

User Interface: Streamlit is used to create a simple user interface, allowing users to input their questions and receive answers from the chatbot.

To run the project locally, follow these steps:

requirements.txt file.streamlit run app.py in your terminal.

The custom question answering chatbot serves various purposes, including:

This project is licensed under the MIT License.