AI Assistant

It's an attempt to learn how to take advantage of LLM and create a better product.

- Doc summarizer (done)

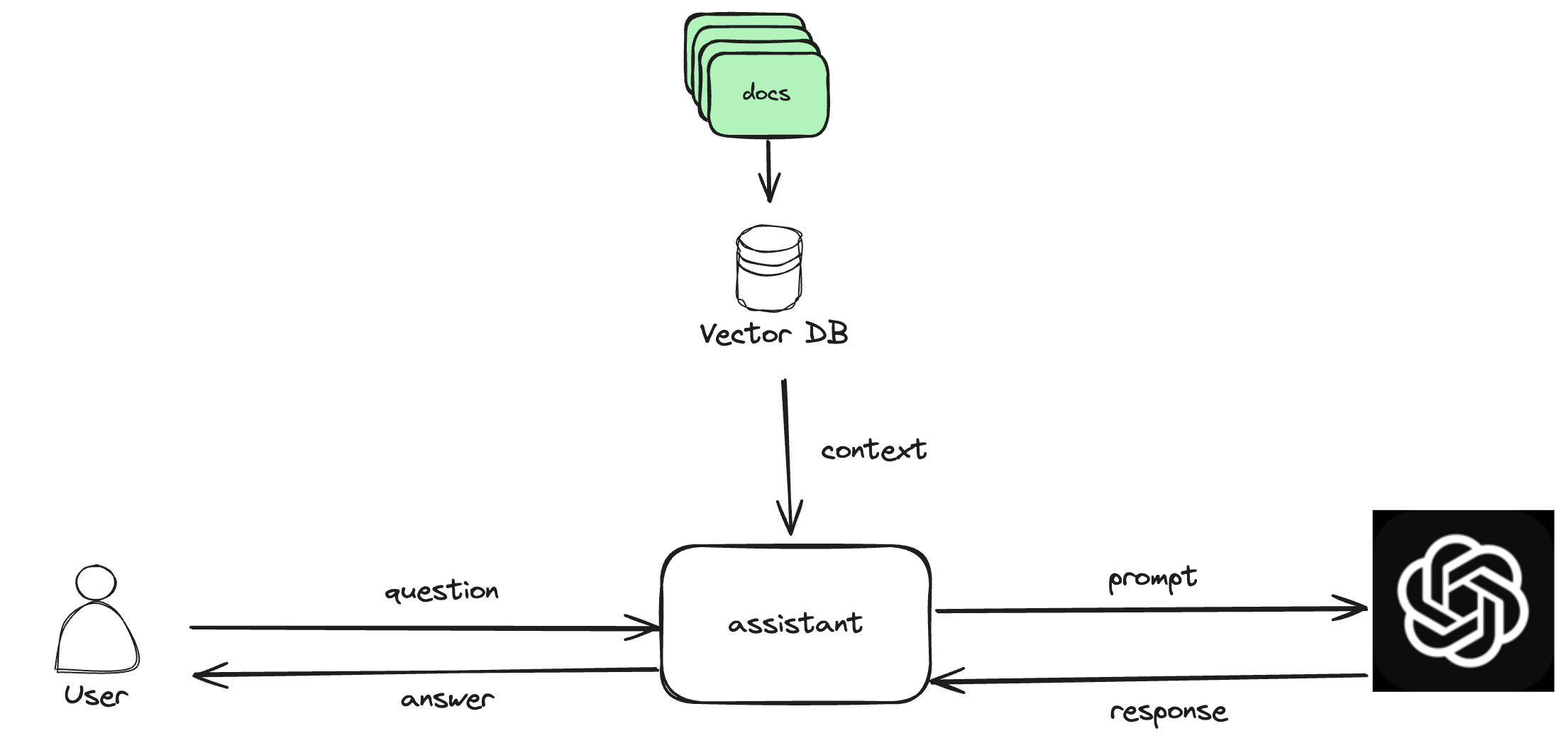

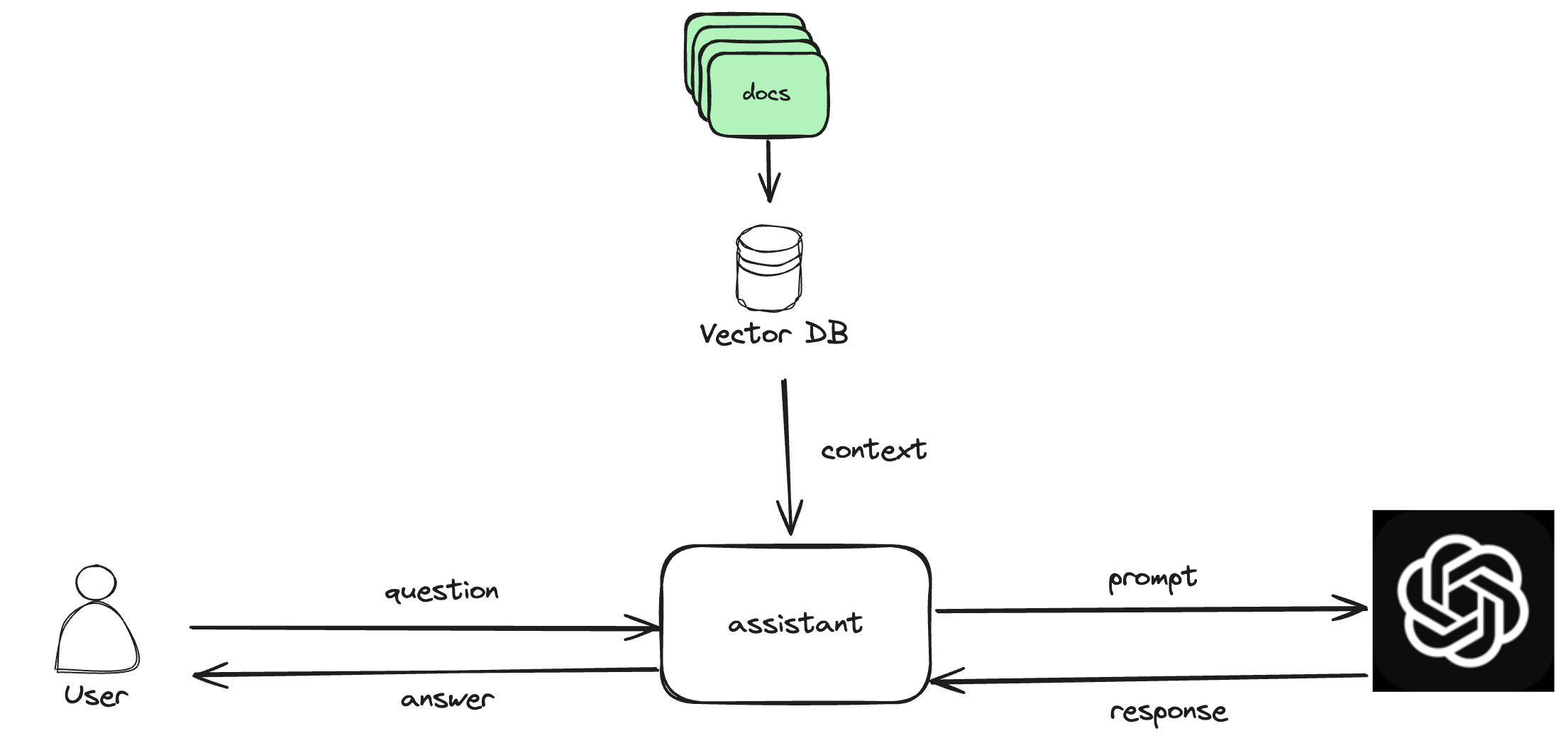

User upload the document or preprocessed his docs which will be stored in vector database. Now whenever user is quering, first passing this query to vector db which is returning context using using similarity search. Pass question and context to LLM with prompt which will return answer only from the context provided. Also have support for chat history so particular session becomes relevant for the user.

- Voice Assistant (todo)

- Search Engine (todo)

Installation

To set up the AI Assistant, follow these steps:

- Install required dependencies:

pip install -r requirements.txt

- Obtain an API key from Google and copy to .env file

- You can also convert existing docs locally without uploading using

- Launch the application:

TechStack

- LangChain

- ChromaDB

- StreamLit

- LLM - Google Gemini

Star History