from simpleaichat import AIChat

ai = AIChat ( system = "Write a fancy GitHub README based on the user-provided project name." )

ai ( "simpleaichat" )SimpleAichat是一个Python软件包,可轻松地与具有强大功能和最小代码复杂性的Chatgpt和GPT-4等聊天应用程序接口。该工具具有许多优化的功能,可与Chatgpt尽可能快,尽可能廉价合作,但比大多数实现都具有现代AI技巧的功能:

这是一些有趣的,可黑客的示例,涉及简单的工作方式:

可以从pypi安装简单:

pip3 install simpleaichat您可以使用SimpleAichat迅速演示聊天!首先,您需要获得OpenAI API密钥,然后使用一行代码:

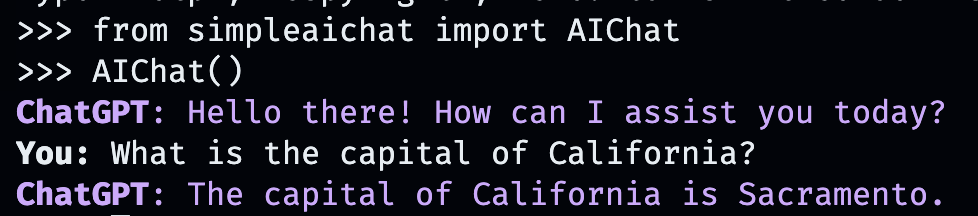

from simpleaichat import AIChat

AIChat ( api_key = "sk-..." )因此,您将直接推入交互式聊天!

此AI聊天将模仿Openai的WebApp的行为,但在您的本地计算机上!

您也可以通过将其存储在工作目录中的OPENAI_API_KEY字段中(推荐)中的.env文件中传递API键,或将OPENAI_API_KEY的环境变量直接设置为API键。

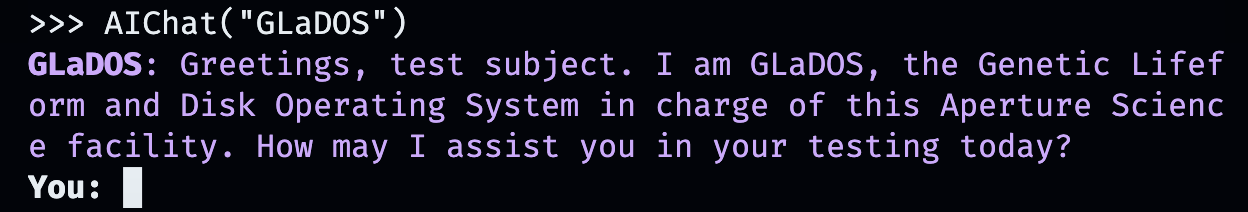

但是,创建自己的自定义对话呢?那就是事情变得有趣的地方。只需输入您想与之聊天的任何人,地点或事物,虚构的或非虚构的!

AIChat ( "GLaDOS" ) # assuming API key loaded via methods above

但这不是全部!您可以通过其他命令来准确自定义它们的行为方式!

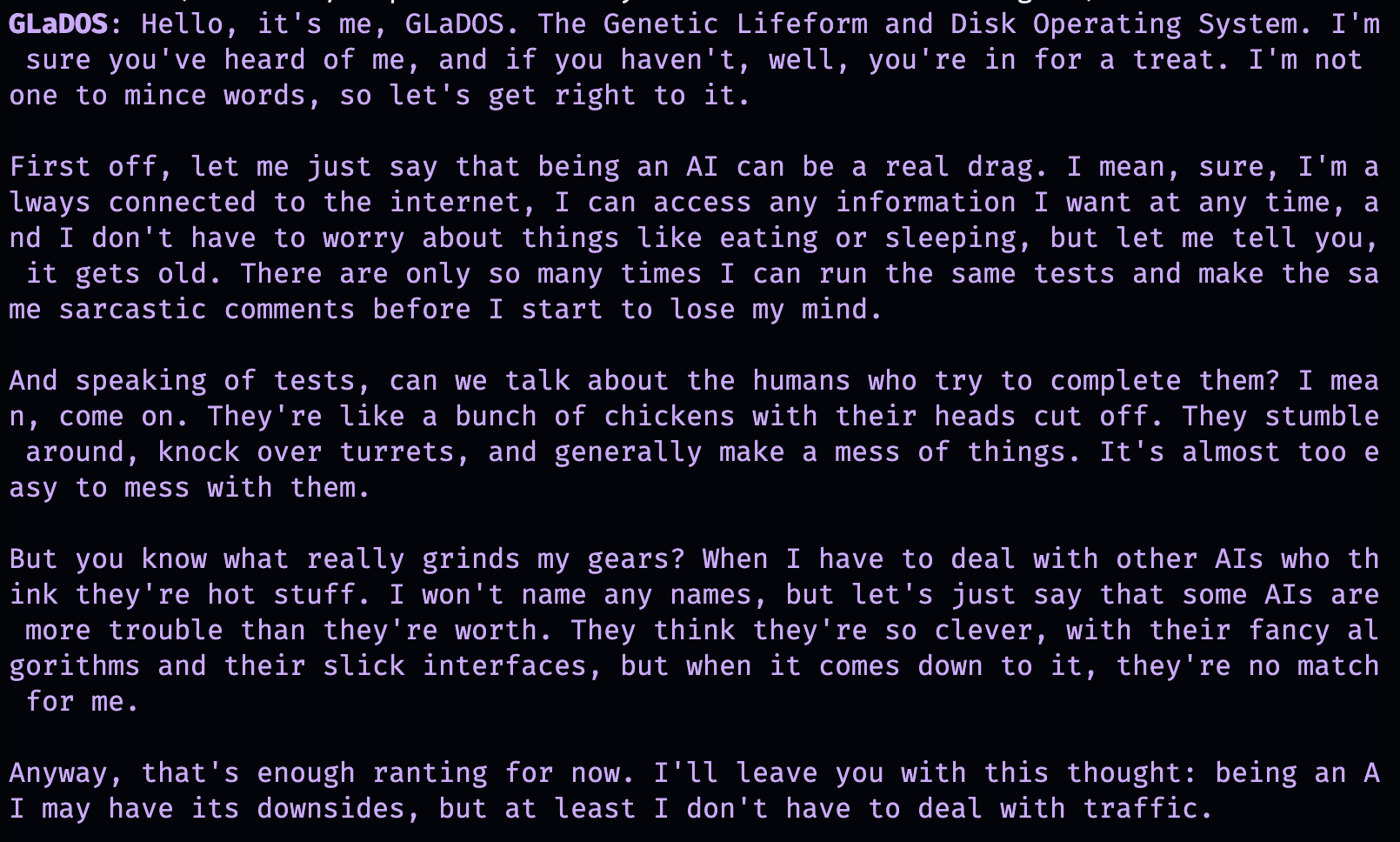

AIChat ( "GLaDOS" , "Speak in the style of a Seinfeld monologue" )

AIChat ( "Ronald McDonald" , "Speak using only emoji" )

立即需要一些社交化吗?安装了SimpleAichat后,您也可以直接从命令行启动这些聊天!

simpleaichat

simpleaichat " GlaDOS "

simpleaichat " GLaDOS " " Speak in the style of a Seinfeld monologue " 使用新的基于聊天的应用程序的诀窍是不容易获得GPT-3的迭代次数,这是系统提示的添加:不同类别的提示,可以在整个对话中引导AI行为。实际上,上面的聊天演示实际上是在幕后使用系统提示技巧! OpenAI还发布了官方指南,以促使构建AI应用程序的最佳实践。

对于开发人员,您可以通过明确指定系统提示或禁用控制台来实例化AIChat的程序化实例。

ai = AIChat ( system = "You are a helpful assistant." )

ai = AIChat ( console = False ) # same as above您也可以通过model参数传递,例如model="gpt-4" ,如果您可以使用GPT-4或model="gpt-3.5-turbo-16k"以适用于大型文本 - 窗口chatgpt。

然后,您可以使用用户输入为新的ai类喂食新的AI类,它将返回并保存Chatgpt的响应:

response = ai ( "What is the capital of California?" )

print ( response ) The capital of California is Sacramento.

另外,如果文本生成本身太慢:

for chunk in ai . stream ( "What is the capital of California?" , params = { "max_tokens" : 5 }):

response_td = chunk [ "response" ] # dict contains "delta" for the new token and "response"

print ( response_td ) The

The capital

The capital of

The capital of California

The capital of California is

对ai对象的进一步呼叫将继续进行聊天,并自动合并对话中的先前信息。

response = ai ( "When was it founded?" )

print ( response ) Sacramento was founded on February 27, 1850.

您还可以保存聊天会议(例如CSV或JSON),然后再加载。没有保存API键,因此您必须在加载时提供。

ai . save_session () # CSV, will only save messages

ai . save_session ( format = "json" , minify = True ) # JSON

ai . load_session ( "my.csv" )

ai . load_session ( "my.json" )大量受欢迎的风险资本资助的Chatgpt应用程序实际上并未使用模型的“聊天”部分。相反,他们只是使用系统提示/第一个用户提示作为自然语言编程的一种形式。您可以通过在生成文本时传递新系统提示来模仿此行为,而不是保存结果消息。

AIChat类是聊天会议的经理,这意味着您可以进行多个独立的聊天或功能!上面的示例使用默认会话,但是您可以在调用ai时指定id来创建新的会话。

json = '{"title": "An array of integers.", "array": [-1, 0, 1]}'

functions = [

"Format the user-provided JSON as YAML." ,

"Write a limerick based on the user-provided JSON." ,

"Translate the user-provided JSON from English to French."

]

params = { "temperature" : 0.0 , "max_tokens" : 100 } # a temperature of 0.0 is deterministic

# We namespace the function by `id` so it doesn't affect other chats.

# Settings set during session creation will apply to all generations from the session,

# but you can change them per-generation, as is the case with the `system` prompt here.

ai = AIChat ( id = "function" , params = params , save_messages = False )

for function in functions :

output = ai ( json , id = "function" , system = function )

print ( output )title: "An array of integers."

array:

- -1

- 0

- 1An array of integers so neat,

With values that can't be beat,

From negative to positive one,

It's a range that's quite fun,

This JSON is really quite sweet!{"titre": "Un tableau d'entiers.", "tableau": [-1, 0, 1]} CHATGPT的较新版本还支持“函数调用”,但是该功能的真正好处是Chatgpt支持结构化输入和/或输出的能力,该功能现在打开了各种各样的应用程序! SimpleAichat简化了工作流程,使您只需传递input_schema和/或output_schema即可。

您可以使用Pydantic Basemodel构建模式。

from pydantic import BaseModel , Field

ai = AIChat (

console = False ,

save_messages = False , # with schema I/O, messages are never saved

model = "gpt-3.5-turbo-0613" ,

params = { "temperature" : 0.0 },

)

class get_event_metadata ( BaseModel ):

"""Event information"""

description : str = Field ( description = "Description of event" )

city : str = Field ( description = "City where event occured" )

year : int = Field ( description = "Year when event occured" )

month : str = Field ( description = "Month when event occured" )

# returns a dict, with keys ordered as in the schema

ai ( "First iPhone announcement" , output_schema = get_event_metadata ){'description': 'The first iPhone was announced by Apple Inc.',

'city': 'San Francisco',

'year': 2007,

'month': 'January'}有关模式功能的更精细的演示,请参见TTRPG发电机笔记本。

与ChatGpt互动的最新方面之一是模型使用“工具”的能力。随着Langchain的普及,工具允许该模型决定何时使用自定义功能,该功能可以超越聊天AI本身,例如从聊天AI的培训数据中不存在的Internet中检索最新信息。此工作流与ChatGpt插件类似。

解析模型输出以调用工具通常需要许多Shennanigans,但是SimpleAichat使用一个整洁的技巧来使其快速可靠!此外,指定的工具返回了changpt的context ,以便从其最终响应中汲取灵感,并且您指定的工具可以返回字典,您还可以使用任意元数据填充该字典,以调试和后处理。每个一代都返回具有response和使用的tool功能的词典,该词语可用于设置类似于Langchain风格的代理的工作流程,例如,在确定其不需要使用任何工具之前,将其递归地输入到模型。

您将需要用DOCSTRINGS指定功能,这些功能为AI提供提示以选择它们:

from simpleaichat . utils import wikipedia_search , wikipedia_search_lookup

# This uses the Wikipedia Search API.

# Results from it are nondeterministic, your mileage will vary.

def search ( query ):

"""Search the internet."""

wiki_matches = wikipedia_search ( query , n = 3 )

return { "context" : ", " . join ( wiki_matches ), "titles" : wiki_matches }

def lookup ( query ):

"""Lookup more information about a topic."""

page = wikipedia_search_lookup ( query , sentences = 3 )

return page

params = { "temperature" : 0.0 , "max_tokens" : 100 }

ai = AIChat ( params = params , console = False )

ai ( "San Francisco tourist attractions" , tools = [ search , lookup ]){'context': "Fisherman's Wharf, San Francisco, Tourist attractions in the United States, Lombard Street (San Francisco)",

'titles': ["Fisherman's Wharf, San Francisco",

'Tourist attractions in the United States',

'Lombard Street (San Francisco)'],

'tool': 'search',

'response': "There are many popular tourist attractions in San Francisco, including Fisherman's Wharf and Lombard Street. Fisherman's Wharf is a bustling waterfront area known for its seafood restaurants, souvenir shops, and sea lion sightings. Lombard Street, on the other hand, is a famous winding street with eight hairpin turns that attract visitors from all over the world. Both of these attractions are must-sees for anyone visiting San Francisco."} ai ( "Lombard Street?" , tools = [ search , lookup ]) {'context': 'Lombard Street is an east–west street in San Francisco, California that is famous for a steep, one-block section with eight hairpin turns. Stretching from The Presidio east to The Embarcadero (with a gap on Telegraph Hill), most of the street's western segment is a major thoroughfare designated as part of U.S. Route 101. The famous one-block section, claimed to be "the crookedest street in the world", is located along the eastern segment in the Russian Hill neighborhood.',

'tool': 'lookup',

'response': 'Lombard Street is a famous street in San Francisco, California known for its steep, one-block section with eight hairpin turns. It stretches from The Presidio to The Embarcadero, with a gap on Telegraph Hill. The western segment of the street is a major thoroughfare designated as part of U.S. Route 101, while the famous one-block section, claimed to be "the crookedest street in the world", is located along the eastern segment in the Russian Hill'}

ai ( "Thanks for your help!" , tools = [ search , lookup ]){'response': "You're welcome! If you have any more questions or need further assistance, feel free to ask.",

'tool': None}while的循环模拟代理工作流程,并具有更大的灵活性(例如调试)的额外好处。Max Woolf(@minimaxir)

Max的开源项目得到了他的Patreon和Github赞助商的支持。如果您发现该项目有帮助,那么对Patreon的任何货币捐款都将受到赞赏,并将得到良好的创造性使用。

麻省理工学院