Pruna AI is a startup from Europe focusing on the development of compression algorithms for AI models. Recently, the company announced that it will open source its optimization framework to help developers compress and AI models more efficiently.

The framework developed by Pruna AI combines a variety of efficiency approaches, including caching, pruning, quantization and distillation, to enhance the performance of AI models. This framework not only standardizes the storage and loading of compression models, but also evaluates the compressed model to determine whether its quality has dropped significantly while measuring the performance improvements brought by compression.

"Our framework is similar to Hugging Face's standardization of transformers and diffusers, and we provide a unified way to call and use various efficiency methods," said John Rachwan, co-founder and chief technology officer of Pruna AI. Large companies such as OpenAI have applied multiple compression methods in their models, such as using distillation to create faster versions of their flagship models.

Distillation is a technology that extracts knowledge through the "teacher-student" model, where developers send requests to the teacher model and record output. These outputs are then used to train the student model to approximate the behavior of the teacher model. Lahwan notes that while many large companies tend to build compression tools themselves, in the open source community, solutions based on a single method are often only found, and Pruna AI provides a tool that integrates multiple methods, greatly simplifying the use process.

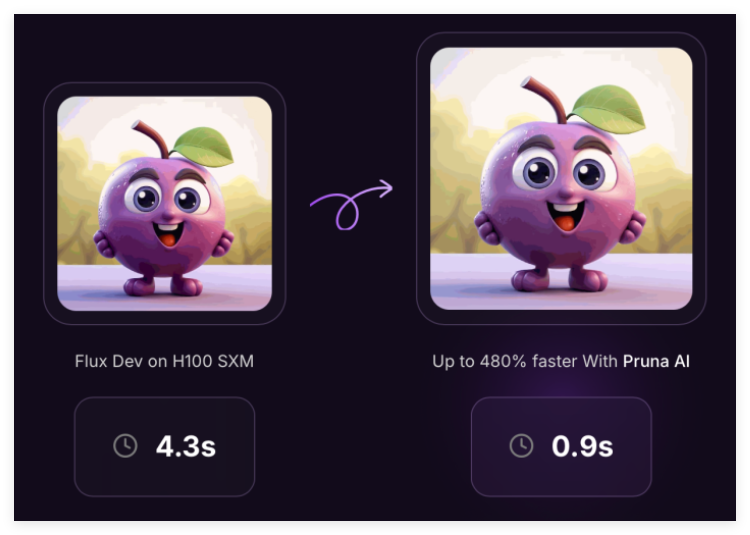

Currently, Pruna AI’s framework supports a variety of models, including large language models, diffusion models, speech recognition models, and computer vision models. But the company is currently focusing on optimization of image and video generation models. Companies such as Scenario and PhotoRoom have already used Pruna AI services.

In addition to the open source version, Pruna AI has also launched an enterprise version that includes advanced optimization features and an optimization agent. "The most exciting feature we are about to release is the compression agent, where users only need to provide the model and set the speed and accuracy requirements, and the agent will automatically find the best compression combination."

Pruna AI charges an hourly fee, similar to how users rent GPUs on cloud services. By using optimized models, businesses can save a lot of money when reasoning. For example, Pruna AI successfully reduced the size of an Llama model by eight times, with little loss of accuracy. The company hopes that customers can view its compression framework as an investment that ultimately earns returns.

Recently, Pruna AI completed a $6.5 million seed financing, with investors including EQT Ventures, Daphni, Motier Ventures and Kima Ventures.

Project: https://github.com/PrunaAI/pruna

Key points:

Pruna AI launches an open source optimization framework that combines multiple compression methods to improve the performance of AI models.

Large companies often use distillation and other technologies, and Pruna AI provides tools that integrate multiple methods to simplify the usage process.

The Enterprise Edition supports advanced features to help users achieve model compression and performance improvements while maintaining accuracy.