The "mind reading technique" in science fiction movies seems to be becoming a reality! Scientists at Yale University, Dartmouth College and Cambridge University jointly developed an AI model called MindLLM that can directly decode brain signals scanned by functional magnetic resonance imaging (fMRI) into human-understandable text. This breakthrough technology makes people sigh that the future has quietly arrived.

Transforming complex brain activities into words has always been a huge challenge in the field of neuroscience, and it is as difficult as climbing Mount Everest. Previous techniques either had poor predictions or could only handle simple tasks, and had almost zero versatility across subjects. However, the emergence of MindLLM completely changed this situation.

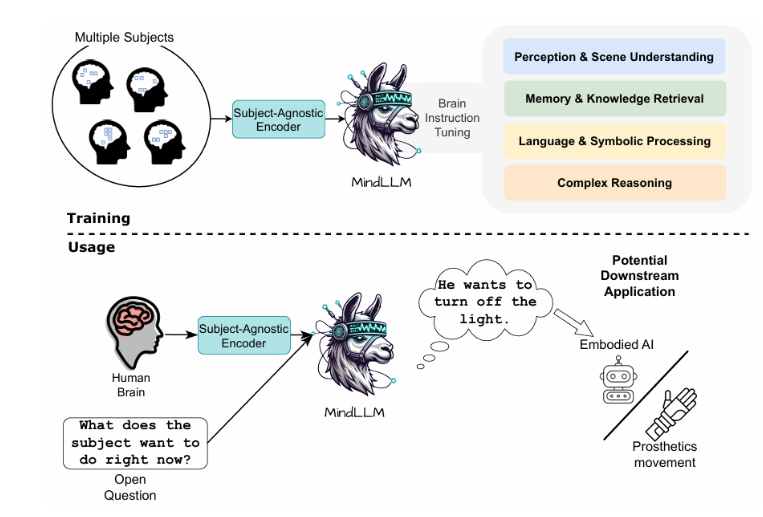

MindLLM is like a "super translator" who can not only understand the brain's activities, but also convert it into words without being restricted by the subject and subject. This breakthrough is thanks to the brain instruction adjustment (BIT) technology developed by researchers, which allows MindLLM to more accurately capture semantic information in fMRI signals, thereby greatly improving decoding capabilities.

In actual testing, MindLLM's performance was amazing. Among various fMRI to text tasks, its performance far exceeds all previous models, with downstream tasks improved by 12.0%, the generalization ability of unknown topics increased by 16.4%, and the adaptability of new tasks increased by 25.0%. The performance of this "all-round academic master" undoubtedly opened up new possibilities for brain-computer interface technology.

MindLLM has a broad application prospect. For patients with language disorders such as aphasia and amyotrophic cervix, it is expected to help them regain their ability to express themselves and reconnect with the world. For healthy people, MindLLM has also opened the door to "mind control" digital devices, and whether it is controlling AI or controlling prosthetics, it will become more natural and human.

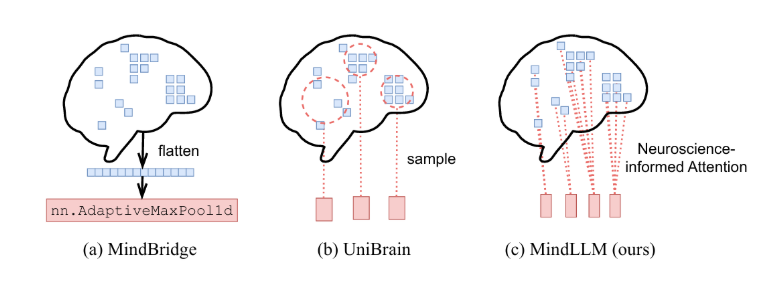

MindLLM's success is inseparable from its "both internal and external" design. It uses a subject-independent fMRI encoder that accurately extracts key features of brain activity from complex fMRI signals and is suitable for different subjects. At the same time, it is equipped with a large language model (LLM), which can smoothly convert the extracted EEG features into human language.

To further improve the accuracy and versatility of decoding, the researchers also developed brain instruction adjustment (BIT) technology. Through images as mediation, MindLLM can learn various tasks, including perception, memory, language and reasoning, thereby comprehensively improving the understanding of brain semantic information.

In rigorous testing, MindLLM performed far beyond expectations, not only surpassing the baseline model in all indicators, but also effectively adapted to various new tasks, showing amazing plasticity and flexibility. In addition, the researchers also analyzed MindLLM's attention mechanism in depth and found that its decision-making process is interpretable, which provides valuable insights into how the brain works.

The birth of MindLLM is undoubtedly a milestone breakthrough from fMRI to text decoding. It not only greatly improves the accuracy and universality of decoding, but also ignites infinite imagination for the future development of brain-computer interface technology. Perhaps in the near future, "interpretation of ideas" will no longer be a scene in science fiction movies, but will truly enter our lives and open a new era of human-computer interaction.

Paper link: https://arxiv.org/abs/2502.15786