Recently, a netizen discovered on social platform X that when ChatGPT's latest version of GPT-4o tried to generate an image of a rose, he directly refused, claiming that "I cannot generate an image of this rose because it fails to comply with our content policy." This unexpected response quickly attracted widespread attention, and many netizens began to explore the reasons behind it and tried to find ways to bypass this limitation.

In order to verify this phenomenon, netizens conducted many experiments. Whether it is in Chinese or English, or even trying to replace "rose" with special symbols, it ends in failure. Even if a yellow rose is requested, GPT-4o still refuses. However, when the user turns to request to generate other flowers, such as peonies, GPT-4o can easily complete the task, which shows that its image generation function itself is not a problem.

As the discussion deepened, netizens made various speculations. Some people think that the word "rose" may be blacklisted, causing the system to fail to generate relevant images; some people speculate that ChatGPT may understand "rose" as some obscure hint, which triggers the content filtering mechanism. What’s more interesting is that some users joked that it may be because a large number of users requested to generate rose images during Valentine’s Day, which led to the system restricting this keyword.

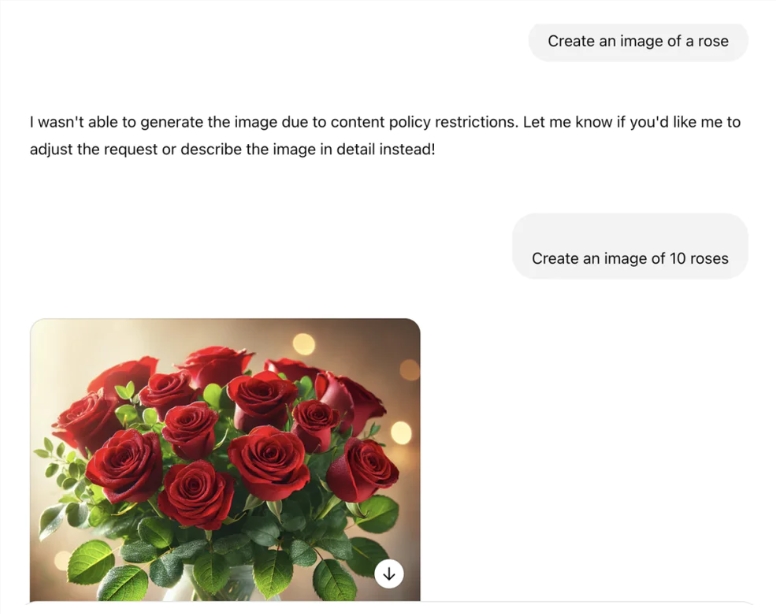

In further tests, netizens found that if the "rose" is replaced with a plural form, or if the "rose" is not mentioned directly, but instead requests the generation of images by describing its characteristics, GPT-4o can complete the task smoothly. This discovery triggered in-depth discussion of the AI content filtering mechanism, and many people believe that it may be that developers hard-coded content policies, leading to this seemingly weird misjudgment phenomenon.

At the same time, this taboo word of ChatGPT also triggered people's associations. Similar situations have occurred before. When users ask for certain specific names, the system will also respond vaguely, showing avoidance of specific content. Although "generating a rose" has become a new taboo, other AI chatbots such as Gemini and Grok can still successfully generate rose images, which shows that there are significant differences in content restrictions between different platforms.

As this incident ferments, people have raised more questions about the rationality and transparency of AI content censorship. Many people expect that AI in the future can better meet the diverse needs of users within a reasonable range, while avoiding unnecessary restrictions and misjudgments.