Japanese artificial intelligence startup Sakana AI recently released an innovative technology called "AI CUDA Engineer", a system designed to generate highly optimized CUDA cores through automated processes, thereby significantly improving the operational efficiency of machine learning operations. The system has increased the common PyTorch operation speed by 10 to 100 times through the evolution of large language model (LLM)-driven code optimization technology, marking a major breakthrough for AI in the field of GPU performance optimization.

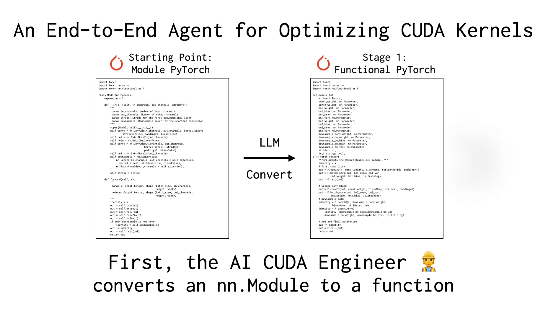

CUDA cores are the core of GPU computing, and their writing and optimization usually require a deep technical background and a high level of expertise. Although existing frameworks such as PyTorch provide convenient usage, they often fail to match manually optimized kernels in performance. Sakana AI's "AI CUDA Engineer" solved this problem through an intelligent workflow. It not only automatically converts PyTorch code into an efficient CUDA core, but also performs performance tuning through evolutionary algorithms, and even integrates multiple cores to further improve runtime efficiency.

User @shao__meng on the X platform compared this technology to "installing an automatic gearbox for AI development", allowing ordinary code to "automatically upgrade to racing-level performance." Another user @FinanceYF5 also pointed out in the post that the launch of the system demonstrates the potential of AI self-optimization and may bring a revolutionary improvement to the efficiency of future computing resource use.

Sakana AI has previously made its mark in the industry due to projects such as "AI Scientist". The release of "AI CUDA Engineer" further highlights its ambitions in the field of AI automation. The company claims that the system has successfully generated and verified more than 17,000 CUDA cores, covering multiple PyTorch operations, and the exposed dataset will provide valuable resources for researchers and developers. Industry insiders believe that this technology not only lowers the threshold for high-performance GPU programming, but also may push the training and deployment efficiency of artificial intelligence models to a new level.

Information reference: https://x.com/FinanceYF5/status/1892856847780237318