Shanghai AI Laboratory recently released a multimodal large language model called InternLM-XComposer-2.5 (IXC-2.5 for short), and this open source project has attracted widespread attention in the field of artificial intelligence. This model not only achieved many breakthroughs in technology, but also showed strong potential in practical applications, especially in ultra-high resolution image understanding, fine-grained video understanding and multi-round image dialogue.

The release of IXC-2.5 has filled the gap in the field of multimodal LLM in China, especially in the production of web pages and the generation of mixed graphic and text articles. The model has been specially optimized, providing great convenience for content creators. Whether it is web design or the generation of graphic content, IXC-2.5 can provide efficient and accurate solutions, greatly improving creative efficiency.

The core features of the IXC-2.5 model include:

Long context processing capability: The model natively supports 24K marker inputs and can be expanded to 96K, which means it can handle ultra-long text and image inputs, providing users with more creative space. Whether it is complex documents or large amounts of image data, IXC-2.5 can handle it easily.

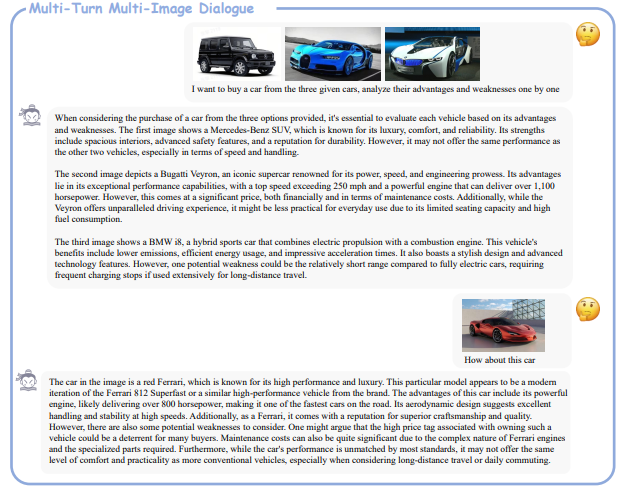

Diverse visual capabilities: IXC-2.5 not only supports ultra-high resolution image understanding, but also allows fine-grained video understanding and multi-round multi-graphic dialogue. This multimodal processing capability is extremely rare in previous models, especially in terms of video understanding. IXC-2.5 is able to splice frames in video along short edges to form high-resolution images while retaining the index of frames to provide Time relationship.

Strong generation capability: IXC-2.5 can generate web pages and high-quality graphic articles, taking the combination of text and images to a new level. Whether it is web design or mixed text article generation, IXC-2.5 can provide high-quality output to meet the needs of different scenarios.

Advanced model architecture: IXC-2.5 uses lightweight vision encoder, large language models and some LoRA alignment technologies. The combination of these technologies has made the model significantly improve its performance. Especially when dealing with complex multimodal data, IXC-2.5 demonstrates excellent efficiency.

Of the 28 benchmarks, IXC-2.5 outperformed the existing open source model in 16 tests, and the performance in another 16 tests was close to or surpassing the GPT-4V and Gemini Pro. This test result fully proves the strong strength of IXC-2.5, especially in tasks such as video understanding, structured high-resolution image understanding, multiple rounds of multi-picture dialogues and general visual question and answers. IXC-2.5 has shown great strength. Competitiveness.

The R&D team of IXC-2.5 is jointly composed of Shanghai Artificial Intelligence Laboratory, the Chinese University of Hong Kong, SenseTime Technology Group and Tsinghua University. The original design of this model is to support long-context input and output to cope with increasingly complex text image understanding and creation tasks. During the pre-training phase, IXC-2.5 extends the context window to 96K through position encoding extrapolation, which demonstrates outstanding capabilities in human-computer interaction and content creation.

In terms of image processing, IXC-2.5 adopts a unified dynamic image segmentation strategy, which can adapt to images of any resolution and aspect ratio. In terms of video processing, it can splice frames in the video along short edges to form high-resolution images while retaining the index of the frames to provide time relationships. This approach makes IXC-2.5 perform well in video comprehension tasks.

In addition, IXC-2.5 also expands its application in web page generation, allowing it to automatically build web pages based on visual screenshots, free-form instructions or resume documents. In terms of text image article creation, IXC-2.5 proposes a scalable process by combining multiple technologies to generate high-quality and stable text image articles.

The open source of IXC-2.5 is not only a technological leap, but also a great contribution to the entire field of artificial intelligence. It allows us to see the infinite possibilities of multimodal LLM and also opens up new paths for future AI applications. Whether it is content creation, web design or multimodal data processing, IXC-2.5 will become an important tool in future artificial intelligence applications.

Project address: https://top.aibase.com/tool/internlm-xcomposer-2-5

Paper address: https://arxiv.org/pdf/2407.03320