The University of Hong Kong and ByteDance jointly released LlamaGen, an innovative technology that applies the prediction paradigm of large-scale language models to the field of image generation and has achieved remarkable results. By redesigning the image segmenter and training the model on a large scale, LlamaGen achieves leading image generation performance without the need for visual signal induction bias, bringing new breakthroughs to the field of image generation. This technology not only performs well in the ImageNet benchmark, but also demonstrates excellent capabilities in image quality and text alignment, and achieves significant acceleration through the vllm service framework. The various models and tools it provides provide valuable resources for developers and researchers.

Product entrance: https://top.aibase.com/tool/llamagen

LlamaGen is a disruptive innovation to traditional image generation models, demonstrating that ordinary autoregressive models can achieve leading image generation performance even in the absence of visual signal induction bias, as long as they are properly scaled. LlamaGen autoregression is the output of the Transformer and the next token is used as the input for predicting the next token. It uses the LLaMA architecture and does not use the Diffusion model. This discovery brings new possibilities and inspirations to the field of image generation, and provides new ideas and directions for future image generation research.

LlamaGen features include:

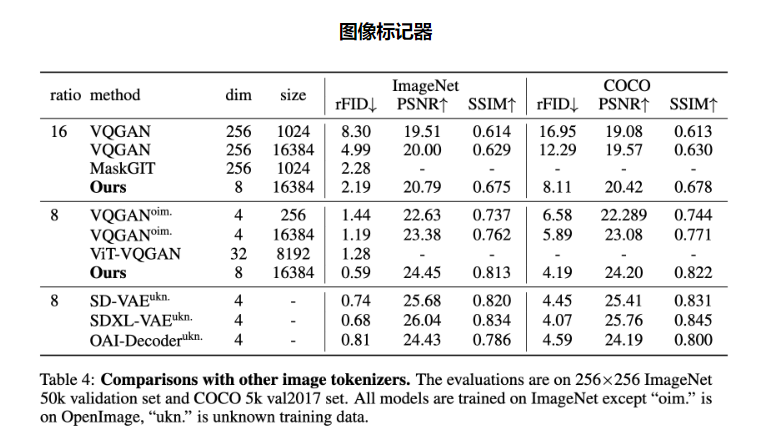

Image tokenizer: Launched an image tokenizer with 16x downsampling ratio, 0.94 reconstruction quality, and 97% codebook utilization, which performed well on the ImageNet benchmark.

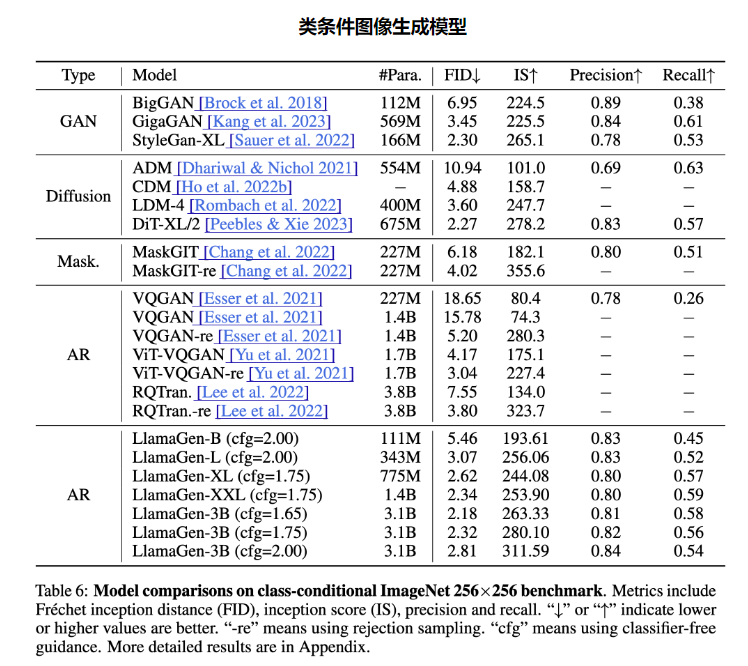

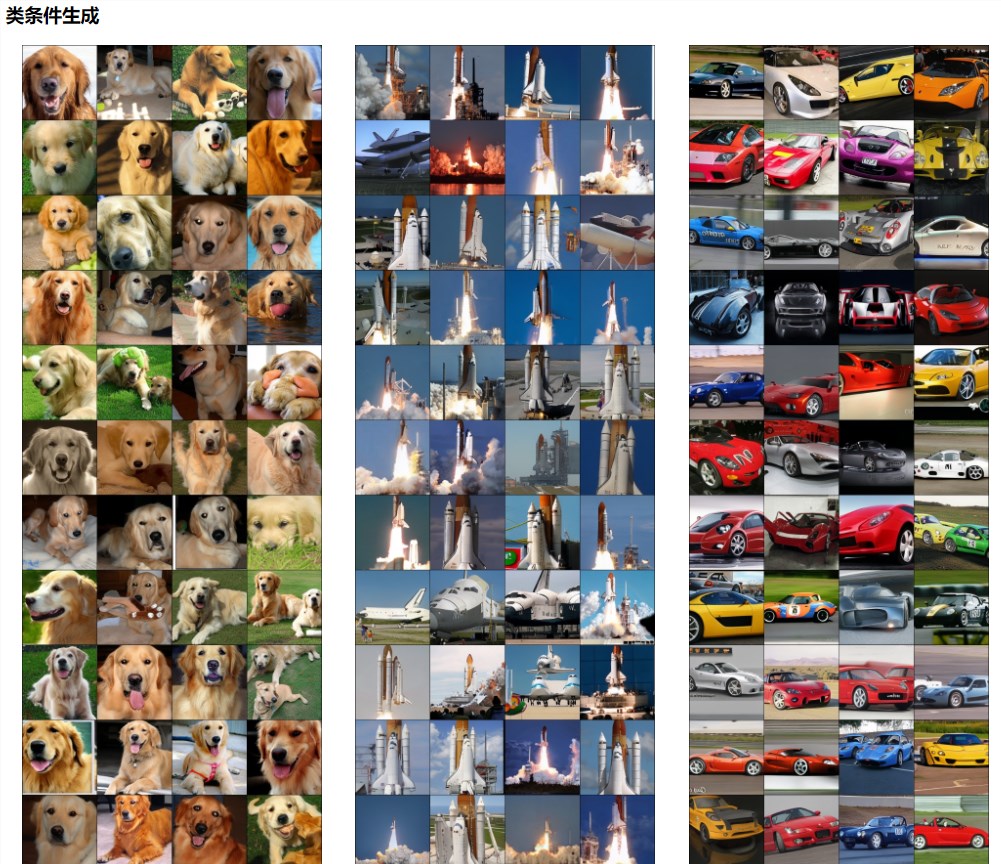

Category-conditional image generation model: A series of category-conditional image generation models with parameter ranges from 111M to 3.1B were launched, achieving an FID of 2.18 on the ImageNet256×256 benchmark, surpassing the popular diffusion model.

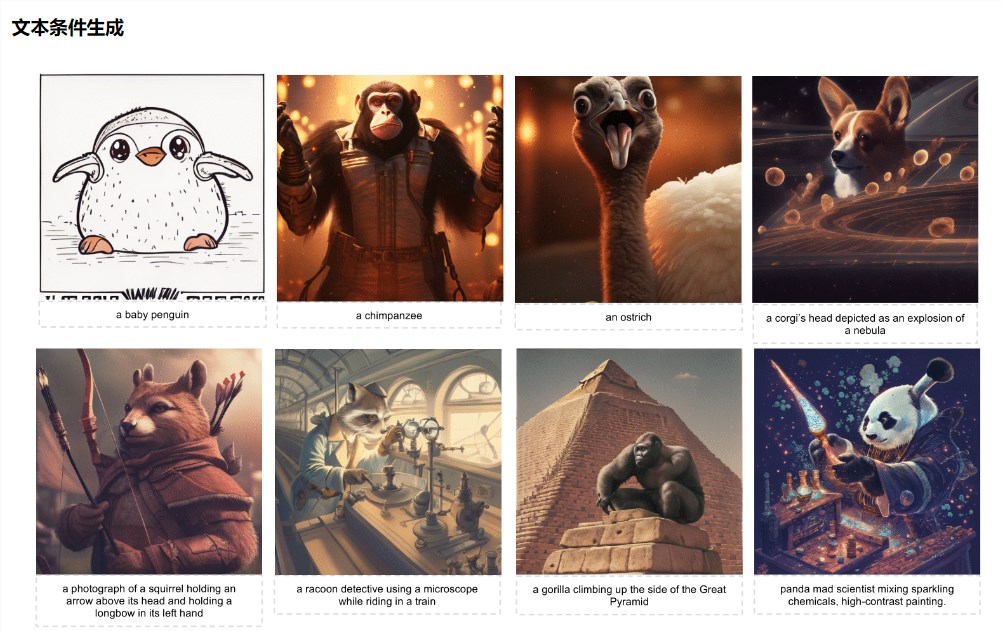

Text conditional image generation model: A text conditional image generation model with 775M parameters was launched. After two-stage training by LAION-COCO, it can generate high-quality aesthetic images and demonstrate excellent visual quality and text alignment performance.

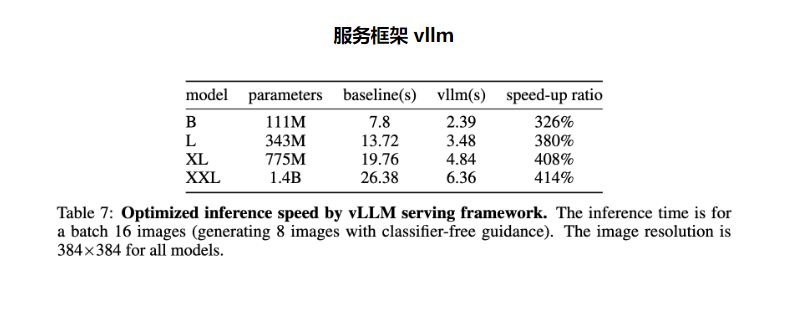

Service framework vllm: Verified the effectiveness of the LLM service framework in optimizing the inference speed of the image generation model, achieving an acceleration of 326% to 414%.

In this project, the research team released two image segmenters, seven-category conditional generation models and two text conditional generation models, while providing online demonstrations and a high-throughput service framework. The release of these models and tools provides developers and researchers with a wealth of resources and tools, allowing them to better understand and apply LlamaGen technology.

The emergence of LlamaGen not only promotes the advancement of image generation technology, but also provides new directions and ideas for future research in the field of artificial intelligence. It is worth looking forward to its application and development in more fields.