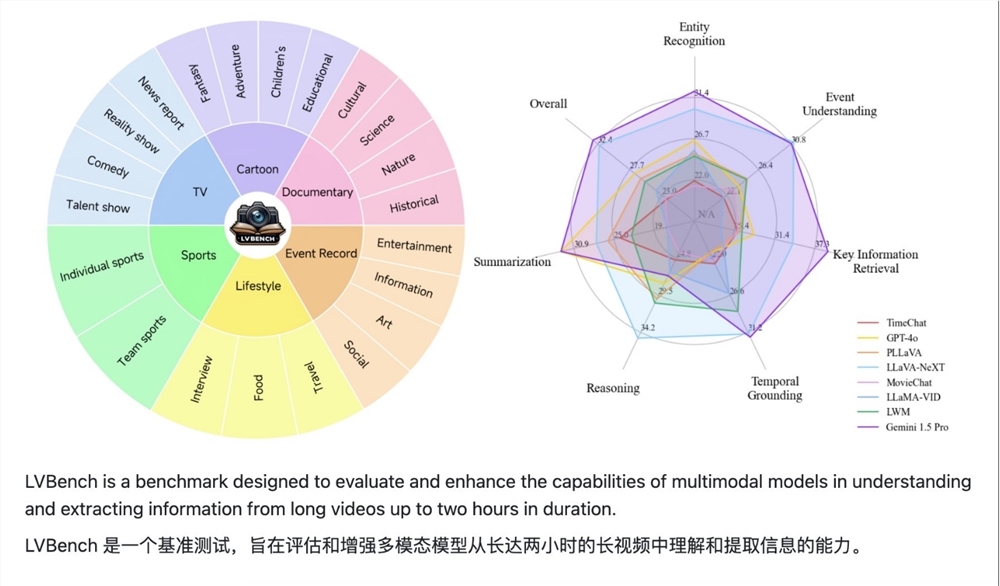

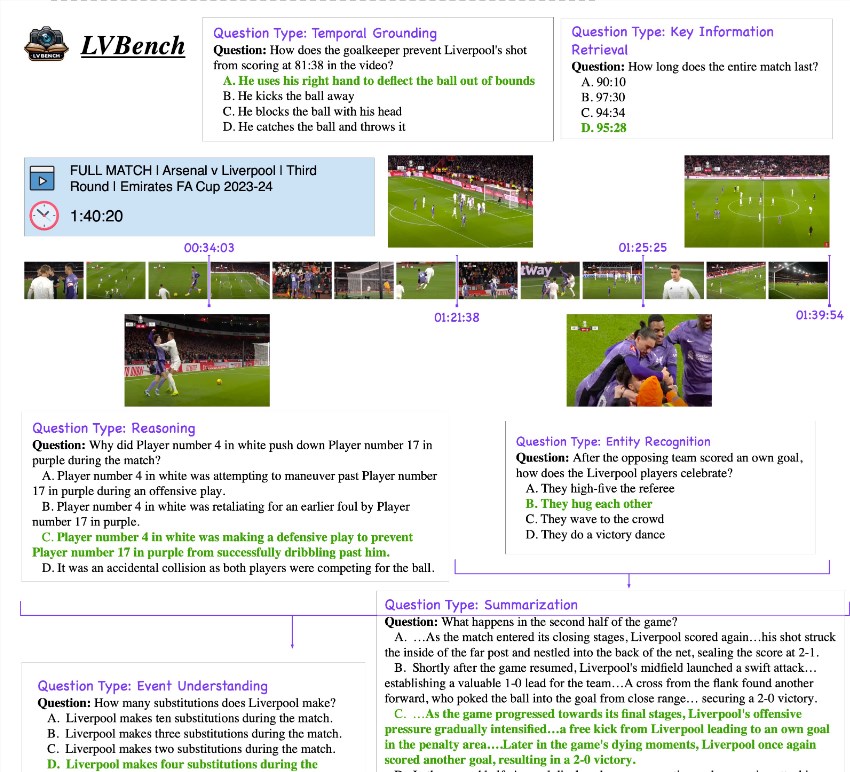

LVBench, a long video understanding benchmark project jointly launched by Zhipu, Tsinghua University and Peking University, aims to solve the challenges faced by existing multi-modal large language models in processing long videos. The project provides several hours of QA data covering different types of video content such as TV series, sports broadcasts and surveillance videos, and contains 6 main categories and 21 sub-categories. The data is annotated with high quality and LLM is used to filter out challenging problems. , covering a variety of tasks such as video summarization, event detection, character recognition, and scene understanding. The launch of LVBench will promote breakthroughs and innovations in long video understanding technology, providing strong support for applications such as embodied intelligent decision-making, in-depth film and television reviews, and professional sports commentary.

This project contains several hours of QA data in 6 main categories and 21 sub-categories, covering different types of video content such as TV series, sports broadcasts and daily surveillance footage from public sources. The data are all high-quality annotated and LLM is used to filter out challenging problems. It is reported that the LVBench data set covers a variety of tasks such as video summarization, event detection, character recognition and scene understanding.

The launch of the LVBench benchmark not only aims to test the model's reasoning and operating capabilities in long video scenarios, but also promotes breakthroughs and innovations in related technologies to achieve embodied intelligent decision-making, in-depth film and television reviews, and professional sports commentary in the field of long videos. Application needs inject new impetus.

Many research institutions have started working on the LVBench data set, gradually expanding the boundaries of artificial intelligence in understanding long-term information flows by building large models for long video tasks, and injecting new ideas into the continued exploration of video understanding, multi-modal learning and other fields. of vitality.

github:https://github.com/THUDM/LVBench

Project: https://lvbench.github.io

Paper: https://arxiv.org/abs/2406.08035

The launch of the LVBench project marks a new stage in the development of long video understanding technology. The rich data sets and challenging tasks it provides will attract more researchers to participate, accelerate the progress of artificial intelligence in the field of long video understanding, and bring benefits to future applications. More possibilities. Looking forward to more research results based on LVBench in the future.