The training of large language models (LLM) requires large amounts of high-quality data, and obtaining this data is a huge challenge. Traditional web crawler tools are inefficient and difficult to process unstructured data, which limits the training and development of LLM. The editor of Downcodes will introduce to you a powerful open source tool - Crawl4AI, which can efficiently collect and clean network data and format it into LLM-friendly formats, such as JSON, HTML and Markdown.

In the era of driven artificial intelligence, large language models (LLM) such as GPT-3 and BERT have an increasing demand for high-quality data. However, manually curating this data from the web is time-consuming and often difficult to scale.

This poses quite a challenge to developers, especially when large amounts of data are required. Traditional web crawlers and data scraping tools have limited capabilities in extracting structured data. Although they can collect web page data, they often cannot format the data into a style suitable for LLM processing.

To deal with this problem, Crawl4AI emerged as an open source tool. It not only collects data from websites, but also processes and cleans it into formats suitable for LLM use, such as JSON, clean HTML, and Markdown. The innovation of Crawl4AI lies in its efficiency and scalability, and its ability to process multiple URLs simultaneously, making it ideal for large-scale data collection.

This tool also features user agent customization, JavaScript execution, and proxy support to effectively bypass network restrictions, thereby enhancing its suitability. Such customized functions enable Crawl4AI to adapt to various data types and web page structures, allowing users to collect text, images, metadata and other content in a structured manner, which greatly facilitates LLM training.

Crawl4AI’s workflow is also fairly clear. First, users can enter a series of seed URLs or define specific crawling criteria. The tool then crawls the web page and follows the site's policies, such as robots.txt. After data is captured, Crawl4AI will use advanced data extraction technologies such as XPath and regular expressions to extract relevant text, images and metadata. In addition, it also supports JavaScript execution and can crawl dynamically loaded content to make up for the shortcomings of traditional crawlers.

It is worth mentioning that Crawl4AI supports parallel processing, allowing multiple web pages to be crawled and processed at the same time, reducing the time required for large-scale data collection. At the same time, it also has an error handling mechanism and a retry strategy to ensure that data integrity is still guaranteed even when the page fails to load or there is a network problem. Users can customize the crawling depth, frequency and extraction rules according to specific needs, further improving the flexibility of the tool.

Crawl4AI provides an efficient and customizable solution for automatically collecting web page data suitable for LLM training. It solves the limitations of traditional web crawlers and provides an LLM-optimized output format, making data collection simple and efficient, and is suitable for a variety of LLM-driven application scenarios. Crawl4AI is a valuable tool for researchers and developers looking to streamline the process of acquiring data for machine learning and artificial intelligence projects.

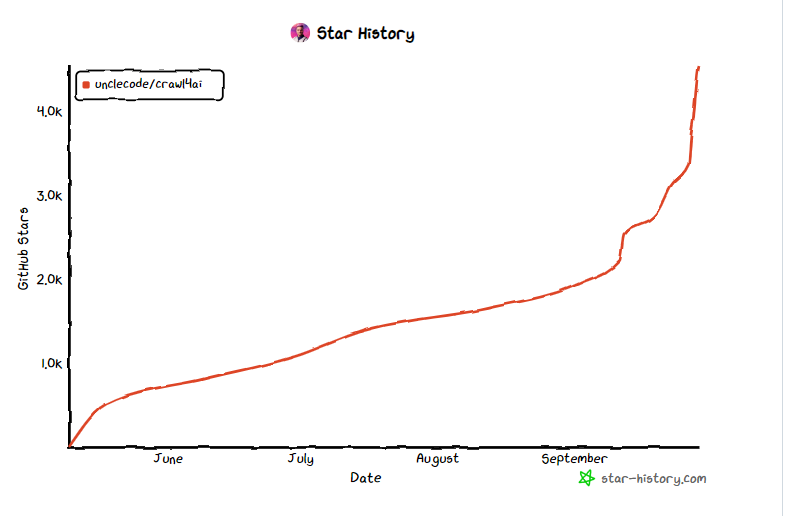

Project entrance: https://github.com/unclecode/crawl4ai

Highlight:

- Crawl4AI is an open source tool designed to simplify and optimize the data collection process required for LLM training.

- ? The tool supports parallel processing and dynamic content capture, enhancing the efficiency and flexibility of data collection.

- ? Crawl4AI outputs data formats such as JSON and Markdown, which facilitates subsequent processing and application.

In short, Crawl4AI, as an efficient, flexible and easy-to-use open source tool, provides strong support for data acquisition for LLM training and is worth trying and using by developers and researchers. It simplifies the data collection process, improves efficiency, and contributes to advancements in the field of artificial intelligence.