Recently, there has been a wave of resistance on social media against Meta AI's use of user data to train models. Many users have publicly stated their opposition, triggering extensive discussions about data privacy and the application of AI technology. The editor of Downcodes will conduct an in-depth analysis of this phenomenon and discuss the reasons behind it, Meta’s response and possible future development directions.

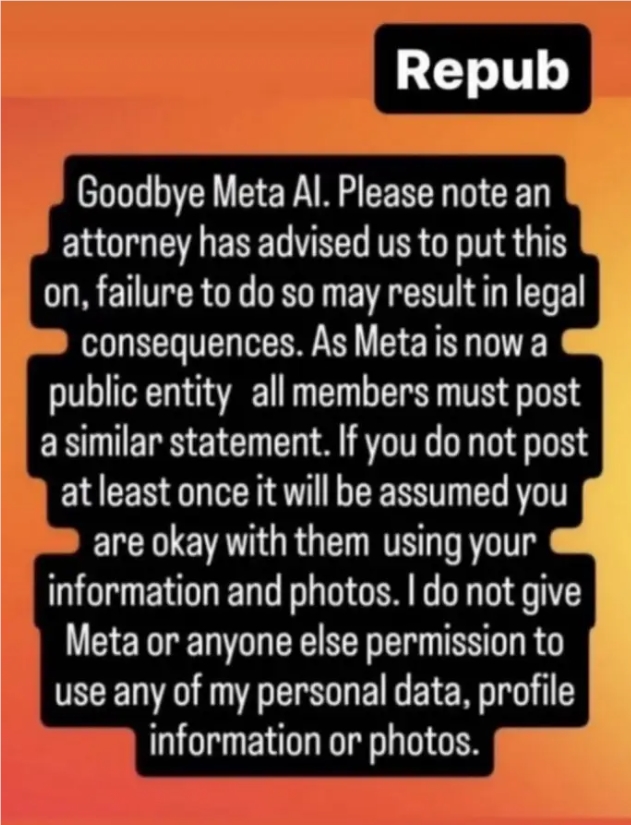

Recently, there has been a wave of goodbye, Meta AI on social media platforms. Numerous users, including celebrities like Tom Brady and musician Cat Power, have posted statements on Instagram in an attempt to prevent Meta from using their data to train AI models. This phenomenon reflects users’ deep concerns about data privacy and the application of AI technology, and also poses new challenges for technology giants in how to balance technological innovation and user rights.

Although these statements are not legally binding, and Meta has made it clear that these texts have no legal effect, we cannot simply dismiss this as ignorance or naivety on the part of the user. On the contrary, what this behavior reflects is users’ concerns about the rapid development of AI technology and their fear of the misuse of personal data.

In fact, Meta does use public Facebook posts and photos dating back to 2007 to train its AI model. Users have few options to opt out unless they are in the EU, which undoubtedly exacerbates users’ insecurities. In this case, users can only protect their data by making posts private, which is obviously not an ideal solution.

Such protective statements spread on social media are nothing new. Similar content has appeared on Facebook and Instagram over the years, claiming to protect users from technology companies. Although these claims often prove to be invalid, they reflect the power imbalance that users feel when using these platforms. Users enjoy free services, but are worried that their data will be improperly used. This ambivalence stems from Facebook's various mistakes in user privacy protection in the past.

On the eve of the upcoming Meta Connect event, The Verge reporter Alex Heath asked Mark Zuckerberg this question directly. Zuckerberg's response seemed a bit vague. He mentioned that any new technology field will involve boundary issues of fair use and control, and said that these issues need to be re-discussed and examined in the AI era. This response acknowledges the existence of the problem but does not offer concrete solutions.

For Meta, how to balance technological innovation and user rights protection will be a long-term and arduous challenge. Companies need to listen carefully to users and understand their concerns about data being used for AI training. At the same time, Meta also needs to explain its data use policy more transparently, so that users can clearly understand how their data will be used, and provide more choices.

Additionally, the industry may need to revisit ethical standards for data use. In the context of the rapid development of AI, how to use user data reasonably and how to find a balance between innovation and privacy protection are issues that need to be solved urgently.

All in all, this wave of "Goodbye, Meta AI" reflects public concerns about the development of AI technology and data privacy, and also poses new challenges for technology companies. In the future, how to strike a balance between technological innovation and user rights will become a key factor in determining whether AI technology can develop sustainably.