In this era of rapid advancement in AI technology, we are becoming more and more dependent on artificial intelligence. At the same time, we also need to be alert to the potential risks of AI. The editor of Downcodes would like to discuss a disturbing discovery with you today: a study by MIT shows that AI chatbots may unknowingly implant "fake memories" in us. This is not just a technical issue, but an important issue related to the accuracy of our memory and cognitive safety. Let’s dive into this research to see how AI can become a magician for memory, and how we can combat this potential threat.

In this era of information explosion, we are exposed to massive amounts of information every day, but have you ever thought that our memory may not be as reliable as we think? Recently, a study from MIT told us Demonstrates a surprising fact: AI, especially those that can chat, may implant "fake memories" in our brains.

AI turns into a memory "hacker" to secretly tamper with your brain memory

First, we have to understand what “false memory” is. Simply put, they are things that we remember happened, but didn’t actually happen. This is not a plot in science fiction novels, but it actually exists in each of our brains. Just like you remember getting lost in a mall as a kid, but it might actually just be the plot of a movie you saw.

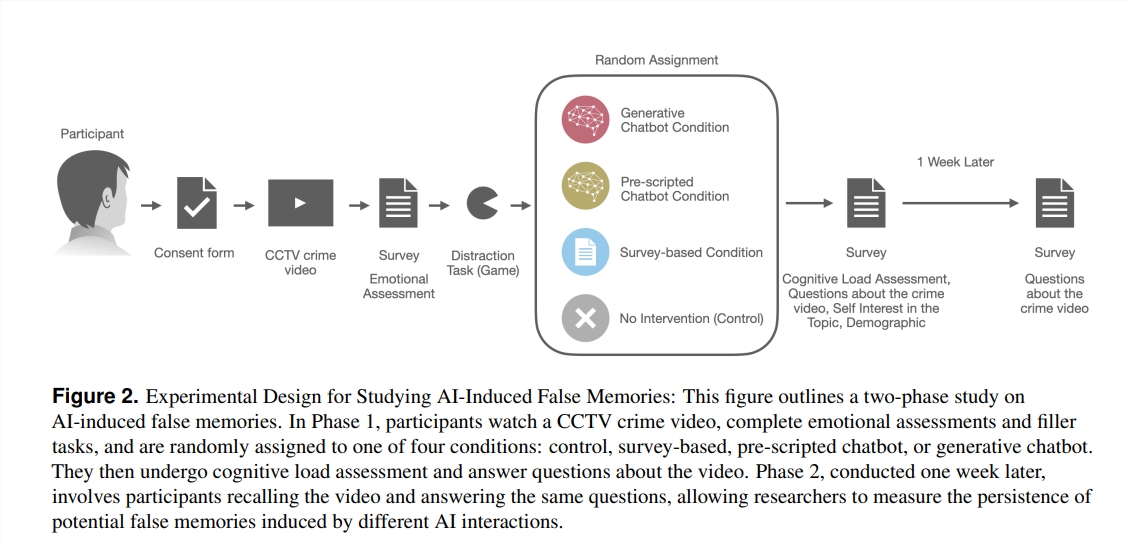

So, how did AI become the "magician" of memory? The researchers designed an experiment in which 200 volunteers watched a crime video and then asked questions in four different ways: one was a traditional questionnaire; There are chatbots with preset scripts, and there is also a large language model (LLM)-driven chatbot that can be used freely. It was found that volunteers who interacted with the LLM chatbot produced more than three times as many false memories!

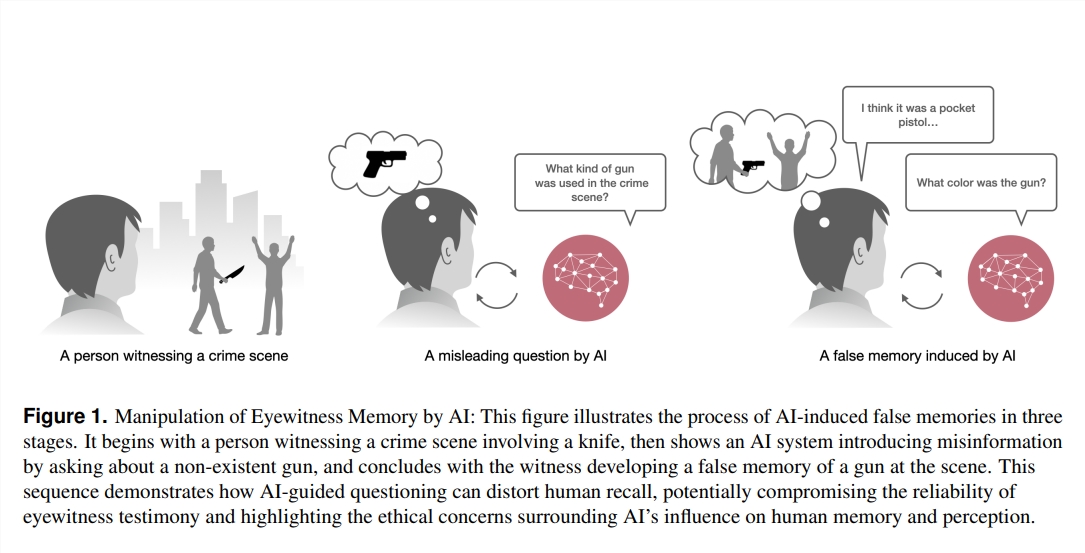

What's going on here? Researchers have found that these chatbots can unknowingly steer our memories in the wrong direction by asking questions. For example, if the chatbot asks: "Did you see the robber driving to the entrance of the mall?" Even if the robber in the video came on foot, someone may "remember" seeing the car because of this question.

What’s even more surprising is that these false memories implanted by AI are not only numerous in number, but also have a particularly long “life span”. Even after a week, these false memories persisted in the minds of the volunteers, and their confidence in these false memories was particularly high.

Who is more likely to be “trickled”?

So, who is more likely to be implanted with false memories by AI? Research has found that those who are not familiar with chatbots but are more interested in AI technology are more likely to be "succumbed". This may be because they have a preconceived sense of trust in AI, thus reducing their vigilance about the authenticity of information.

This study has sounded the alarm for us: in fields such as law, medicine, and education that require extremely high memory accuracy, the application of AI needs to be more cautious. At the same time, this also poses new challenges for the future development of AI: How do we ensure that AI does not become a "hacker" that implants false memories, but becomes a "guardian" that helps us protect and enhance memories?

In this era of rapid development of AI technology, our memories may be more fragile than we think. Understanding how AI affects our memory is not only a task for scientists, but also a matter that each of us needs to pay attention to. After all, memory is the cornerstone of our understanding of the world and ourselves. To protect our memory is to protect our own future.

References:

https://www.media.mit.edu/projects/ai-false-memories/overview/

https://arxiv.org/pdf/2408.04681

All in all, this MIT study reveals the potential risks of AI and reminds us that we need to treat AI technology with caution, especially in areas where the authenticity of information is crucial. In the future, we need to pay more attention to AI ethics and safety to ensure that AI technology can better serve human beings instead of becoming a tool to manipulate memory. Let us work together to make AI a boost to human progress rather than a potential threat.