The Tsinghua University research team launched the SonicSim mobile sound source simulation platform and SonicSet data set, aiming to solve the problem of insufficient data in mobile sound source scenarios in the field of speech processing. The editor of Downcodes will take you to understand the results of this breakthrough research, how it simulates the real acoustic environment, and how it provides high-quality data support for the training of speech separation and enhancement models.

A research team from Tsinghua University recently released a mobile sound source simulation platform called SonicSim, which aims to solve the current problem of lack of data in the field of speech processing in mobile sound source scenarios.

This platform is built on the Habitat-sim simulation platform, which can simulate the real-world acoustic environment with high fidelity and provide better data support for the training and evaluation of speech separation and enhancement models.

Most of the existing speech separation and enhancement data sets are based on static sound sources, which are difficult to meet the needs of moving sound source scenarios.

Although some real-recorded data sets also exist in the real world, their scale is limited and their collection costs are high. In contrast, although synthetic data sets are larger in scale, their acoustic simulations are often not realistic enough to accurately reflect the acoustic characteristics in real environments.

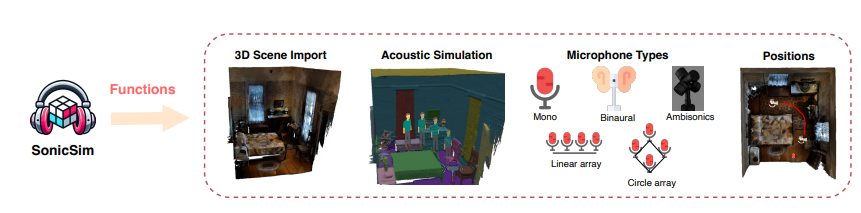

The emergence of SonicSim platform effectively solves the above problems. The platform can simulate a variety of complex acoustic environments, including obstructions, room geometry, and sound absorption, reflection, and scattering characteristics of different materials, and supports user-defined scene layout, sound source and microphone positions, microphone types, etc. parameter.

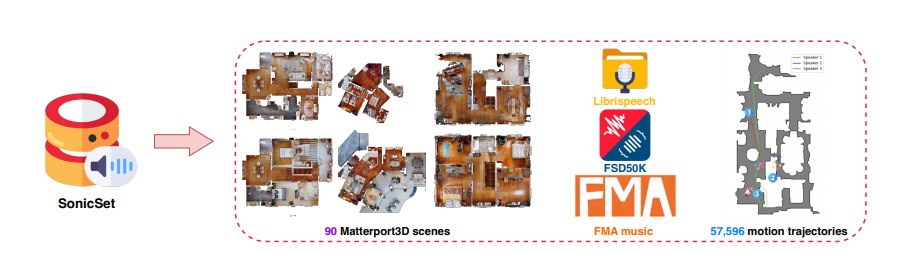

Based on the SonicSim platform, the research team also built a large-scale multi-scene mobile sound source data set called SonicSet.

This data set uses speech and noise data from LibriSpeech, Freesound Dataset50k and Free Music Archive, as well as 90 real scenes from the Matterport3D data set, which contains rich speech, environmental noise and music noise data.

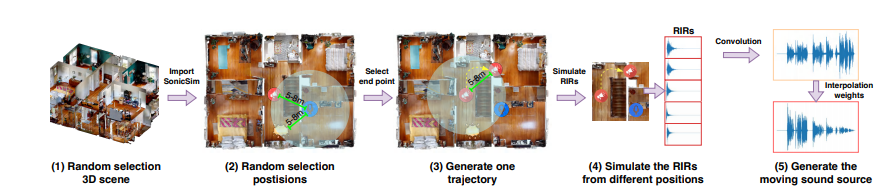

The construction process of the SonicSet data set is highly automated and can randomly generate the positions of sound sources and microphones as well as the motion trajectories of the sound sources, ensuring the authenticity and diversity of the data.

In order to verify the effectiveness of the SonicSim platform and SonicSet data set, the research team conducted a large number of experiments on speech separation and speech enhancement tasks.

The results show that the model trained on the SonicSet data set achieved better performance on the real-world recorded data set, proving that the SonicSim platform can effectively simulate the real-world acoustic environment and provide a powerful basis for research in the field of speech processing. support.

The release of the SonicSim platform and SonicSet data set has brought new breakthroughs to research in the field of speech processing. With the continuous improvement of simulation tools and optimization of model algorithms, the application of speech processing technology in complex environments will be further promoted in the future.

However, the realism of the SonicSim platform is still limited by the details of 3D scene modeling. When the imported 3D scene has missing or incomplete structures, the platform cannot accurately simulate the reverberation effect in the current environment.

Paper address: https://arxiv.org/pdf/2410.01481

The emergence of SonicSim and SonicSet has brought new hope to the development of speech processing technology, but it still needs to be continuously improved. Expect to see applications of this technology in more complex acoustic environments in the future. The editor of Downcodes will continue to pay attention to the research progress in this field.