영어 | 이탈리아 노 | Español | Français | বাংলা | தமிழ் | ગુજરાતી | 포르투갈어 | हिंदी | తెలుగు | RomânĂ | العريz | 네팔 | 简体中文

이 프로젝트에 기여하는 데 관심이 있으시면 Praybiting.md를 검토하여 시작 방법에 대한 자세한 지침은 검토하십시오. 당신의 기여는 대단히 감사합니다!

컴퓨터 과학은 컴퓨터 및 컴퓨팅 및 이론적이고 실용적인 응용에 대한 연구입니다. 컴퓨터 과학은 수학, 공학 및 논리의 원칙을 다양한 문제에 적용합니다. 여기에는 알고리즘 공식, 소프트웨어/하드웨어 개발 및 인공 지능이 포함됩니다.

컴퓨터는 고속 수학적, 논리 또는 데이터 처리 작업을 수행하도록 설계된 장치입니다. 정보를 효율적으로 조립, 저장, 상관 관계 및 처리 할 수있는 프로그래밍 가능한 전자 기계입니다.

부울 논리는 진실 가치, 특히 참과 거짓에 중점을 둔 수학의 한 분야입니다. 이진 시스템으로 작동하며 0은 false를 나타내고 1은 true를 나타냅니다. 부울 대수로 알려진이 시스템은 1854 년 George Boole에 의해 처음 소개되었습니다.

| 연산자 | 이름 | 설명 |

|---|---|---|

| ! | 아니다 | 피연산자의 값을 무효화 (반전). |

| && | 그리고 | 두 피연산자가 모두 참이면 진실을 반환합니다. |

| || | 또는 | 하나 이상의 피연산자가 참이면 True를 반환합니다. |

| 연산자 | 이름 | 설명 |

|---|---|---|

| () | 괄호 | 키워드를 그룹화하고 용어를 검색 할 순서를 제어 할 수 있습니다. |

| "" " | 견적 마크 | 정확한 문구와 함께 결과를 제공합니다. |

| * | 별표 | 키워드 변형이 포함 된 결과를 제공합니다. |

| ⊕ | xor | 피연산자가 다른 경우 TRUE 를 반환합니다 |

| ⊽ | 도 아니다 | 모든 피연산자가 거짓 인 경우 true를 반환합니다. |

| ⊼ | NAND | 두 입력의 두 값이 모두 참인 경우에만 False를 반환합니다. |

디지털 회로는 부울 신호 (1 및 0)를 다룹니다. 그것들은 컴퓨터의 근본적인 빌딩 블록입니다. 컴퓨터 시스템에 필수적인 프로세서 유닛 및 메모리 장치를 작성하는 데 사용되는 구성 요소 및 회로입니다.

진실 테이블은 논리 및 디지털 회로 설계에 사용되는 수학 테이블입니다. 그들은 회로의 기능을 매핑하는 데 도움이됩니다. 복잡한 디지털 회로를 설계하는 데 도움이 될 수 있습니다.

진리 테이블에는 각 입력 변수마다 1 개의 열이 있고 1 개의 최종 열은 테이블이 나타내는 논리적 작동의 가능한 모든 결과를 보여줍니다.

디지털 회로에는 두 가지 유형이 있습니다 : 조합 및 순차적

디지털 회로, 특히 복잡한 회로를 설계 할 때. 설계 프로세스를 돕기 위해 부울 대수 도구를 사용하는 것이 중요합니다 (예 : Karnaugh Map). 또한 모든 것을 작은 회로로 분해하고 그 작은 회로에 필요한 진실 테이블을 검사하는 것이 중요합니다. 한 번에 전체 회로를 다루려고하지 말고, 그것을 분해하고 점차 조각을 합치십시오.

숫자 시스템은 숫자를 표현하기위한 수학적 시스템입니다. 숫자 시스템은 숫자를 나타내는 데 사용되는 기호 세트와 해당 기호를 조작하기위한 일련의 규칙으로 구성됩니다. 숫자 시스템에 사용 된 기호를 숫자라고합니다.

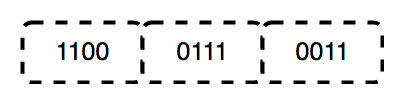

바이너리는 Gottfried Leibniz에 의해 발명 된 Base-2 번호 시스템으로 0과 1의 숫자로 구성됩니다.이 시스템은 모든 바이너리 코드의 기본이며 컴퓨터 프로세서 지침을 포함하여 디지털 데이터를 인코딩하는 데 사용됩니다. 이진에서, 숫자는 상태를 나타냅니다. 0은 "OFF"에, 1은 "on"에 해당합니다.

트랜지스터에서 "0"은 전기의 흐름을 나타내지 않는 반면 "1"은 전기가 흐르고 있음을 나타냅니다. 이 숫자의 물리적 표현을 통해 컴퓨터는 계산 및 작업을 효율적으로 수행 할 수 있습니다.

바이너리는 컴퓨터의 핵심 언어로 남아 있으며 몇 가지 주요 이유로 전자 제품 및 하드웨어에 사용됩니다.

중앙 처리 장치 (CPU)는 모든 컴퓨터에서 가장 중요한 부분입니다. CPU는 뇌가 신체를 제어하는 방식과 같은 컴퓨터의 다른 부분을 제어하기 위해 신호를 보냅니다. CPU는 지침이라고 불리는 컴퓨터 목록에서 작동하는 전자 기계입니다. 지침 목록을 읽고 각각을 순서대로 실행 (실행)합니다. CPU가 실행할 수있는 지침 목록은 컴퓨터 프로그램입니다. CPU는 "코어"라는 섹션에서 한 번에 하나 이상의 명령을 처리 할 수 있습니다. 4 개의 코어가있는 CPU는 한 번에 4 개의 프로그램을 처리 할 수 있습니다. CPU 자체는 세 가지 주요 구성 요소로 만들어집니다. 그들은 다음과 같습니다.

레지스터는 CPU 내에 포함 된 소량의 고속 메모리입니다. 레지스터는 "플립 플롭"(1 비트의 메모리를 저장하는 데 사용되는 회로)의 모음입니다. 프로세서는 처리 중에 필요한 소량의 데이터를 저장하는 데 사용됩니다. CPU에는 "코어"라고하는 여러 세트의 레지스터가있을 수 있습니다. 등록은 또한 산술 및 논리 작업에 도움이됩니다.

산술 연산은 레지스터에 저장된 수치 데이터에서 CPU에 의해 수행되는 수학적 계산입니다. 이러한 작업에는 추가, 뺄셈, 곱셈 및 분할이 포함됩니다. 로직 작업은 레지스터에 저장된 이진 데이터에 대한 CPU에 의해 수행되는 부울 계산입니다. 이러한 작업에는 비교 (예 : 두 값이 동일하다면 테스트) 및 논리 작업 (예 : 및 OR, NOT)이 포함됩니다.

CPU가 소량의 데이터에 신속하게 액세스하고 조작 할 수 있기 때문에 이러한 작업을 수행하는 데 필수적입니다. CPU는 레지스터에 자주 액세스하는 데이터를 레지스터에 저장하면 메모리에서 데이터를 검색하는 느린 프로세스를 피할 수 있습니다.

더 많은 양의 데이터는 캐시에 저장 될 수 있으며 ( "Cash"로 발음) 레지스터와 동일한 통합 회로에 위치한 매우 빠른 메모리입니다. 캐시는 프로그램이 실행될 때 자주 액세스하는 데이터에 사용됩니다. 더 많은 양의 데이터가 RAM에 저장 될 수 있습니다. RAM은 무작위 액세스 메모리를 나타냅니다.이 메모리는 프로세서가 필요할 때까지 디스크 스토리지에서 이동 한 데이터 및 지침을 보유하는 메모리 유형입니다.

캐시 메모리는 컴퓨터 메모리에서 데이터를 검색하는 칩 기반 컴퓨터 구성 요소입니다. 컴퓨터 프로세서가 데이터를 쉽게 검색 할 수 있도록 임시 저장 영역 역할을합니다. 캐시로 알려진이 임시 저장 영역은 컴퓨터의 기본 메모리 소스, 일반적으로 일부 형태의 DRAM보다 프로세서에서 더 쉽게 사용할 수 있습니다.

캐시 메모리는 일반적으로 CPU 칩에 직접 통합되거나 CPU와 별도의 버스가 상호 연결된 별도의 칩에 배치되기 때문에 CPU (중앙 처리 장치) 메모리라고도합니다. 따라서 프로세서에 더 액세스 할 수 있으며 프로세서에 물리적으로 가깝기 때문에 효율성을 높일 수 있습니다.

프로세서에 가깝기 위해서는 캐시 메모리가 메인 메모리보다 훨씬 작아야합니다. 결과적으로 저장 공간이 적습니다. 더 높은 성능을 산출하는 더 복잡한 칩이기 때문에 메인 메모리보다 비싸다.

크기와 가격이 희생되는 것은 속도를 보완합니다. 캐시 메모리는 RAM보다 10 ~ 100 배 빠르게 작동하므로 CPU 요청에 응답하려면 몇 나노초 만 필요합니다.

캐시 메모리에 사용되는 실제 하드웨어의 이름은 고속 정적 임의의 액세스 메모리 (SRAM)입니다. 컴퓨터의 기본 메모리에 사용되는 하드웨어 이름은 DRAM (Dynamic Random Access Memory)입니다.

캐시 메모리는 더 넓은 용어 캐시와 혼동되지 않아야합니다. 캐시는 하드웨어와 소프트웨어 모두에 존재할 수있는 임시 데이터 저장소입니다. 캐시 메모리는 컴퓨터가 다양한 레벨의 네트워크에서 캐시를 생성 할 수있는 특정 하드웨어 구성 요소를 나타냅니다. 캐시는 컴퓨팅 환경에 일시적으로 데이터를 저장하는 데 사용되는 하드웨어 또는 소프트웨어입니다.

RAM (Random Access Memory)은 일반적으로 작업 데이터 및 기계 코드를 저장하는 데 사용되는 순서로 읽고 변경할 수있는 컴퓨터 메모리의 형태입니다. 임의의 액세스 메모리 장치를 사용하면 다른 직접 액세스 데이터 스토리지 미디어 (예 : 하드 디스크, CD-RW, DVD-RW 및 구형 자기 테이프 및 드럼 메모리)와 대조적으로 메모리 내부 데이터의 물리적 위치에 관계없이 데이터 항목을 거의 같은 시간에 읽거나 작성할 수 있습니다. 움직임.

컴퓨터 과학에서 명령어는 프로세서 명령어 세트에 의해 정의 된 프로세서의 단일 작업입니다. 컴퓨터 프로그램은 컴퓨터에 무엇을 해야하는지 알려주는 지침 목록입니다. 컴퓨터가 수행하는 모든 것은 컴퓨터 프로그램을 사용하여 수행됩니다. 컴퓨터의 메모리에 저장된 프로그램 ( "내부 프로그래밍")은 컴퓨터가 간격을두고 중단 할 수있게합니다.

프로그래밍 언어는 시각적 프로그래밍 언어의 경우 문자열 또는 그래픽 프로그램 요소를 다양한 종류의 기계 코드 출력으로 변환하는 규칙 세트입니다. 프로그래밍 언어는 알고리즘을 구현하기 위해 컴퓨터 프로그래밍에 사용되는 컴퓨터 언어 중 하나입니다.

프로그래밍 언어는 종종 두 가지 범주로 나뉩니다.

몇 가지 다른 프로그래밍 패러다임 도 있습니다. 프로그래밍 패러다임은 주어진 프로그램이나 프로그래밍 언어를 구성 할 수있는 다른 방법이나 스타일입니다. 각 패러다임은 일반적인 프로그래밍 문제를 해결 해야하는 방법에 대한 특정 구조, 기능 및 의견으로 구성됩니다.

프로그래밍 패러다임은 언어 나 도구가 아닙니다 . 패러다임으로 무엇이든 "건설"할 수 없습니다. 그것들은 많은 사람들이 동의하고, 추적하고, 확장 한 일련의 이상과 지침과 비슷합니다. 프로그래밍 언어가 항상 특정 패러다임과 관련이있는 것은 아닙니다. 특정 패러다임을 염두에두고 구축 된 언어가 있으며 다른 종류보다 더 많은 프로그래밍을 용이하게하는 기능이 있습니다 (Haskell 및 기능 프로그래밍은 좋은 예입니다). 그러나 특정 패러다임이나 다른 패러다임에 맞게 코드를 조정할 수있는 "다중 파라 디그 (Multi-Paradigm)"언어도 있습니다 (JavaScript 및 Python은 좋은 예입니다).

프로그래밍에서 데이터 유형은 변수의 값 유형과 오류를 일으키지 않고 적용 할 수있는 수학적, 관계형 또는 논리적 작업의 유형을 지정하는 분류입니다.

원시 데이터 유형은 프로그래밍 언어에서 가장 기본적인 데이터 유형입니다. 그들은 더 복잡한 데이터 유형의 빌딩 블록입니다. 원시 데이터 유형은 프로그래밍 언어에 의해 사전 정의되며 예약 된 키워드로 명명됩니다.

비 프리맨티 데이터 유형은 참조 데이터 유형이라고도합니다. 그들은 프로그래머에 의해 만들어지며 프로그래밍 언어에 의해 정의되지 않습니다. 비 프리맨티 데이터 유형은 다른 유형으로 구성되기 때문에 복합 데이터 유형이라고도합니다.

컴퓨터 프로그래밍에서, 성명서는 수행 할 조치를 표현하는 명령적인 프로그래밍 언어의 구문 단위입니다. 이러한 언어로 작성된 프로그램은 하나 이상의 진술 시퀀스로 구성됩니다. 문에는 내부 구성 요소 (예 : 표현식)가있을 수 있습니다. 모든 프로그래밍 언어에는 코드의 논리를 구축하는 데 필요한 두 가지 주요 진술 유형이 있습니다.

주로 조건부 진술에는 두 가지 유형이 있습니다.

주로 세 가지 유형의 루프가 있습니다.

함수는 특정 작업을 수행하는 진술 블록입니다. 함수는 데이터를 수락하고, 처리하고, 결과를 반환하거나 실행합니다. 기능은 주로 재사용 가능성의 개념을 지원하기 위해 작성됩니다. 함수가 작성되면 동일한 코드를 반복하지 않고도 쉽게 호출 할 수 있습니다.

다른 기능 언어는 다른 구문을 사용하여 기능을 작성합니다.

기능에 대한 자세한 내용은 여기를 참조하십시오

컴퓨터 과학에서 데이터 구조는 효율적인 액세스 및 수정을 가능하게하는 데이터 구성, 관리 및 스토리지 형식입니다. 보다 정확하게는 데이터 구조는 데이터 값, 이들 간의 관계 및 데이터에 적용 할 수있는 기능 또는 작업의 모음입니다.

알고리즘은 계산을 완료하는 데 필요한 일련의 단계입니다. 그들은 우리의 장치가하는 일의 핵심이며, 이것은 새로운 개념이 아닙니다. 수학 자체가 개발되었으므로 알고리즘은 작업을보다 효율적으로 완료하는 데 도움이되기 위해 필요했지만 오늘날 우리는 정렬 및 그래프 검색과 같은 몇 가지 현대적인 컴퓨팅 문제를 살펴보고 어떻게 더 효율적으로 만들었는지 보여 주므로 Winterfell 또는 레스토랑 또는 무언가에 대한 저렴한 항공료 또는지도 방향을보다 쉽게 찾을 수 있습니다.

알고리즘의 시간 복잡성은 알고리즘이 일부 입력에 사용할 시간을 추정합니다. 아이디어는 매개 변수가 입력 크기 인 함수로 효율성을 나타내는 것입니다. 시간 복잡성을 계산함으로써 알고리즘이 구현하지 않고도 충분한 지 여부를 결정할 수 있습니다.

공간 복잡성은 실행을위한 입력 값의 공간을 포함하여 알고리즘/프로그램이 사용하는 총 메모리 공간의 총량을 나타냅니다. 공간 복잡성을 결정하기 위해 알고리즘/프로그램에서 변수로 차지하는 공간을 계산하십시오.

정렬은 특정 순서로 항목 목록을 정리하는 프로세스입니다. 예를 들어, 이름 목록이 있으면 알파벳순으로 정렬 할 수 있습니다. 또는 숫자 목록이 있다면 숫자 목록이 가장 작은 것부터 가장 큰 것까지 순서대로 정리할 수 있습니다. 정렬은 일반적인 작업이며 여러 가지 방법으로 할 수있는 작업입니다.

검색은 컨테이너 내부에서 특정 대상 요소를 찾기위한 알고리즘입니다. 검색 알고리즘은 저장된 데이터 구조에서 요소를 확인하거나 요소를 검색하도록 설계되었습니다.

문자열은 프로그래밍에서 가장 많이 사용되고 가장 중요한 데이터 구조 중 하나이며,이 저장소에는 코드를 개선하는 데 더 빠른 검색 시간에 도움이되는 몇 가지 가장 많이 사용되는 알고리즘이 포함되어 있습니다.

그래프 검색은 특정 노드를 찾기 위해 그래프를 검색하는 프로세스입니다. 그래프는 방정되지 않은 그래프에 대한 정렬되지 않은 쌍의 정렬 된 쌍 또는 지시 된 그래프에 대한 정렬 된 쌍의 정렬되지 않은 쌍과 함께 유한 (및 아마도 변이 가능한) 정점 또는 노드 또는 포인트 세트로 구성된 데이터 구조입니다. 이 쌍은 방향없는 그래프의 가장자리, 아크 또는 선으로 알려져 있으며, 화살표, 지시 된 가장자리, 지시 된 아크 또는 지시 된 그래프의 선으로 알려져 있습니다. 정점은 그래프 구조의 일부일 수 있거나 정수 지수 또는 참조로 표시되는 외부 엔티티 일 수 있습니다. 그래프는 많은 실제 응용 프로그램에 가장 유용한 데이터 구조 중 하나입니다. 그래프는 객체 간의 쌍별 관계를 모델링하는 데 사용됩니다. 예를 들어, 항공사 경로 네트워크는 도시가 정점이며 비행 경로는 가장자리입니다. 그래프는 네트워크를 나타내는 데에도 사용됩니다. 인터넷은 컴퓨터가 정점 인 그래프로 모델링 될 수 있으며 컴퓨터 간의 링크는 가장자리입니다. 그래프는 LinkedIn 및 Facebook과 같은 소셜 네트워크에서도 사용됩니다. 그래프는 컴퓨터 네트워크, 회로 설계 및 항공 스케줄링과 같은 많은 실제 응용 프로그램을 나타내는 데 사용됩니다.

동적 프로그래밍은 수학적 최적화 방법과 컴퓨터 프로그래밍 방법입니다. Richard Bellman은 1950 년대 에이 방법을 개발했으며 항공 우주 공학에서 경제에 이르기까지 수많은 분야에서 응용 프로그램을 발견했습니다. 두 맥락에서, 그것은 재귀적인 방식으로 더 간단한 하위 프로젝트로 나누어 복잡한 문제를 단순화하는 것을 말합니다. 일부 결정 문제는 이런 식으로 분리 할 수 없지만 여러 시점에 걸쳐있는 결정은 종종 재귀 적으로 분리됩니다. 마찬가지로, 컴퓨터 과학에서, 문제를 하위 프로젝트로 나누고 하위 프로젝트에 대한 최적의 솔루션을 재귀 적으로 찾아서 최적으로 해결할 수 있다면, 최적의 하위 구조가 있다고합니다. 동적 프로그래밍은 이러한 속성의 문제를 해결하는 한 가지 방법입니다. 복잡한 문제를 더 간단한 하위 프로젝트로 분해하는 과정을 "나누기와 정복"이라고합니다.

욕심 많은 알고리즘은 일부 최적화 문제에 대한 최적의 솔루션을 찾는 데 사용할 수있는 간단하고 직관적 인 알고리즘 클래스의 알고리즘입니다. 그들은 각 단계에서 그 순간에 가장 잘 보이는 선택을하기 때문에 욕심이라고 불립니다. 이는 욕심 많은 알고리즘이 전 세계 최적의 솔루션을 반환하는 것을 보장하지 않고 대신 글로벌 최적을 찾기 위해 로컬 최적의 선택을합니다. 욕심 많은 알고리즘은 최적화 문제에 사용됩니다. 문제에 다음과 같은 속성이있는 경우 Greedy를 사용하여 최적화 문제를 해결할 수 있습니다. 모든 단계에서 우리는 현재 가장 잘 보이는 선택을 할 수 있으며 전체 문제에 대한 최적의 솔루션을 얻을 수 있습니다.

역 추적은 한 번에 한 번에 한 조각을 점진적으로 구축하려고 시도함으로써 문제를 재귀 적으로 해결하기위한 알고리즘 기술입니다. 어느 시점에서도 문제의 제약 조건을 충족시키지 못하는 솔루션을 제거합니다 (여기서, 여기서, 검색 트리의 모든 수준에 도달 할 때까지 경과 할 때까지).

Branch and Bound는 조합 최적화 문제를 해결하기위한 일반적인 기술입니다. 문제의 구조를 사용하여 최적이 될 수없는 후보 솔루션을 제거하여 후보 솔루션의 수를 줄이는 체계적인 열거 기술입니다.

시간 복잡성 : 총 시간이 아닌 특정 명령 세트가 실행될 것으로 예상되는 횟수로 정의됩니다. 시간은 의존적 현상이므로 시간 복잡성은 프로세서 속도, 사용 된 컴파일러 등과 같은 일부 외부 요인에 따라 다를 수 있습니다.

공간 복잡성 : 프로그램이 실행을 위해 소비되는 총 메모리 공간입니다.

둘 다 입력 크기 (n)의 함수로 계산됩니다. 알고리즘의 시간 복잡성은 큰 O 표기법으로 표현됩니다.

알고리즘의 효율은이 두 매개 변수에 따라 다릅니다.

시간 복잡성의 유형 :

일반적인 시간 복잡성은 다음과 같습니다.

O (1) : 이것은 일정한 시간을 나타냅니다. o (1)는 일반적으로 알고리즘이 입력 크기에 관계없이 일정한 시간을 가짐을 의미합니다. 해시 맵은 일정한 시간의 완벽한 예입니다.

O (로그 N) : 이것은 로그 시간을 나타냅니다. o (로그 n)는 작업의 각 인스턴스마다 감소하는 것을 의미합니다. 이진 검색 트리 (BST)에서 요소를 검색하는 것은 로그 시간의 좋은 예입니다.

O (n) : 이것은 선형 시간을 나타냅니다. o (n)은 성능이 입력 크기에 직접 비례 함을 의미합니다. 간단히 말해서 입력 수와 해당 입력을 실행하는 데 걸리는 시간은 비례합니다. 배열의 선형 검색은 선형 시간 복잡성의 훌륭한 예입니다.

o (n*n) : 이것은 2 차 시간을 나타냅니다. o (n^2)는 성능이 입력의 제곱에 직접 비례 함을 의미합니다. 간단히 말하면, 실행에 걸린 시간은 입력 크기의 제곱 배가됩니다. 중첩 루프는 2 차 시간 복잡성의 완벽한 예입니다.

o (n log n) : 이것은 다항식 시간 복잡성을 나타냅니다. o (n log n)는 성능이 O (log n)의 성능이 N 배 (최악의 복잡성)임을 의미합니다. 좋은 예는 나뉘어지고 Merge 정렬과 같은 알고리즘을 정복합니다. 이 알고리즘은 먼저 O (로그 N) 시간이 걸리는 세트를 나누고 세트를 정복하고 정렬하여 O (n) 시간이 걸리므로 정렬을 병합하는 데 시간이 걸립니다.

| 연산 | 시간 복잡성 | 공간 복잡성 | ||

|---|---|---|---|---|

| 최상의 | 평균 | 최악의 | 최악의 | |

| 선택 정렬 | ω (n^2) | θ (n^2) | O (n^2) | o (1) |

| 버블 정렬 | ω (n) | θ (n^2) | O (n^2) | o (1) |

| 삽입 정렬 | ω (n) | θ (n^2) | O (n^2) | o (1) |

| 힙 정렬 | ω (n log (n)) | θ (n log (n)) | o (n log (n)) | o (1) |

| 빠른 정렬 | ω (n log (n)) | θ (n log (n)) | O (n^2) | 에) |

| 정렬을 병합하십시오 | ω (n log (n)) | θ (n log (n)) | o (n log (n)) | 에) |

| 버킷 정렬 | ω (n +K) | θ (n +K) | O (n^2) | 에) |

| radix 정렬 | ω (NK) | θ (NK) | O (NK) | O (N + K) |

| 정렬을 계산하십시오 | ω (n +K) | θ (n +K) | O (N +K) | 좋아요) |

| 쉘 정렬 | ω (n log (n)) | θ (n log (n)) | O (n^2) | o (1) |

| 팀 정렬 | ω (n) | θ (n log (n)) | o (n log (n)) | 에) |

| 나무 정렬 | ω (n log (n)) | θ (n log (n)) | O (n^2) | 에) |

| 큐브 정렬 | ω (n) | θ (n log (n)) | o (n log (n)) | 에) |

| 연산 | 시간 복잡성 | ||

|---|---|---|---|

| 최상의 | 평균 | 최악의 | |

| 선형 검색 | o (1) | 에) | 에) |

| 이진 검색 | o (1) | O (logn) | O (logn) |

Alan Turing (1912 년 6 월 23 일, 런던, 1954 년 6 월 7 일, Cheshire의 Wilmslow)은 영어 수학자이자 논리학 자였습니다. 그는 캠브리지 대학교와 프린스턴의 고급 연구 연구소에서 공부했습니다. 그의 1936 년 논문에서 "계산 가능한 숫자"에 대한 그는 수학의 진실을 결정하는 보편적 인 알고리즘 방법이 존재할 수 없으며 수학에는 항상 알 수없는 (알 수없는) 제안이 포함되어 있음을 증명했다. 그 논문은 또한 튜링 머신을 소개했습니다. 그는 컴퓨터가 결국 인간의 컴퓨터와 구별 할 수없는 사고가 가능할 것이라고 믿었 으며이 기능을 평가하기 위해 간단한 테스트 (튜링 테스트 참조)를 제안했다. 주제에 대한 그의 논문은 인공 지능 연구의 기초로 널리 인정됩니다. 그는 제 2 차 세계 대전 당시 암호화에서 귀중한 일을했으며 독일이 무선 통신을 위해 사용하는 수수께끼를 깨는 데 중요한 역할을했습니다. 전쟁 후, 그는 맨체스터 대학교에서 가르쳤으며 현재 인공 지능으로 알려진 작업을 시작했습니다. 이 획기적인 작업 가운데 튜링은 침대에서 시안화물에 중독 된 것으로 밝혀졌습니다. 그의 죽음은 동성애 행위 (범죄)와 12 개월간의 호르몬 요법을 선고 한 것에 대한 체포에 이어 그의 체포에 이어졌다.

2009 년 공개 캠페인에 이어, 고든 브라운 영국 총리는 영국 정부를 대신하여 튜링이 대우받은 끔찍한 방법으로 공식적인 공공 사과를했다. 엘리자베스 2 세 여왕은 2013 년에 사후 사면을 부여했다. "앨런 튜링 법 (Alan Turing Law)이라는 용어는 이제 동성애 행위를 불법적으로 보낸 역사적 법안에 따라 소급 적으로 용서하거나 유죄 판결을받은 영국의 2017 년 법을 비공식적으로 언급하는 데 비공식적으로 사용된다.

튜링은 컴퓨터 과학 혁신에 대한 연례 상을 포함하여 그의 동상과 그 이름을 따서 명명 된 많은 것들과 함께 광범위한 유산을 가지고 있습니다. 그는 2021 년 6 월 23 일에 발표 된 영국 은행 £ 50에 출두하여 그의 생일과 일치합니다. 청중이 투표 한 2019 BBC 시리즈는 그를 20 세기의 가장 위대한 사람으로 지명했습니다.

소프트웨어 엔지니어링은 소프트웨어 응용 프로그램의 설계, 개발, 테스트 및 유지 관리를 다루는 컴퓨터 과학의 지점입니다. 소프트웨어 엔지니어는 엔지니어링 원칙과 프로그래밍 언어에 대한 지식을 적용하여 최종 사용자를위한 소프트웨어 솔루션을 구축합니다.

소프트웨어 엔지니어링의 다양한 정의를 살펴 보겠습니다.

성공적인 엔지니어는 올바른 프로그래밍 언어, 플랫폼 및 아키텍처를 사용하여 컴퓨터 게임에서 네트워크 제어 시스템에 이르기까지 모든 것을 개발하는 방법을 알고 있습니다. 소프트웨어 엔지니어는 시스템을 구축하는 것 외에도 다른 엔지니어가 구축 한 소프트웨어를 테스트, 개선 및 유지 관리합니다.

이 역할에서 일상적인 작업에는 다음이 포함될 수 있습니다.

소프트웨어 엔지니어링 프로세스에는 수집, 설계, 구현, 테스트 및 유지 보수 요구 사항을 포함한 여러 단계가 포함됩니다. 소프트웨어 개발에 대한 훈련 된 접근 방식을 따라 소프트웨어 엔지니어는 사용자의 요구를 충족시키는 고품질 소프트웨어를 만들 수 있습니다.

소프트웨어 엔지니어링의 첫 번째 단계는 요구 사항 수집입니다. 이 단계에서 소프트웨어 엔지니어는 클라이언트와 협력하여 소프트웨어의 기능적 및 비 기능적 요구 사항을 결정합니다. 기능 요구 사항은 소프트웨어가해야 할 일을 설명하는 반면, 기능이없는 요구 사항은 그것이 얼마나 잘 수행 해야하는지 설명합니다. 요구 사항 수집은 전체 소프트웨어 개발 프로세스의 기초가되므로 중요한 단계입니다.

요구 사항이 수집 된 후 다음 단계는 설계입니다. 이 단계에서 소프트웨어 엔지니어는 소프트웨어 아키텍처 및 기능에 대한 자세한 계획을 작성합니다. 이 계획에는 소프트웨어의 구조, 동작 및 다른 시스템과의 상호 작용을 지정하는 소프트웨어 설계 문서가 포함되어 있습니다. 소프트웨어 설계 문서는 구현 단계의 청사진 역할을하기 때문에 필수적입니다.

구현 단계는 소프트웨어 엔지니어가 소프트웨어의 실제 코드를 작성하는 곳입니다. 이곳에서 설계 문서가 작업 소프트웨어로 변환되는 곳입니다. 구현 단계에는 코드 작성, 컴파일 및 디자인 문서에 지정된 요구 사항을 충족하도록 테스트하는 것이 포함됩니다.

테스트는 소프트웨어 엔지니어링에서 중요한 단계입니다. 이 단계에서 소프트웨어 엔지니어는 소프트웨어가 올바르게 작동하는지 확인하고 신뢰할 수 있으며 사용하기 쉽습니다. 여기에는 단위 테스트, 통합 테스트 및 시스템 테스트를 포함한 여러 유형의 테스트가 포함됩니다. 테스트를 통해 소프트웨어가 예상대로 요구 사항 및 기능을 충족하도록합니다.

소프트웨어 엔지니어링의 최종 단계는 유지 보수입니다. 이 단계에서 소프트웨어 엔지니어는 소프트웨어를 변경하여 오류를 수정하거나 새로운 기능을 추가하거나 성능을 향상시킵니다. 유지 보수는 소프트웨어의 평생 동안 계속되는 지속적인 프로세스입니다.

데이터 과학은 컴퓨터 과학, 통계 및 고려중인 영역에 대한 지식을 적용하여 종종 지저분한 데이터로부터 귀중한 통찰력을 추출합니다. 데이터 과학 사용의 예로는 전화 레코드에서 고객 정서를 도출하거나 판매 기록에서 파생 된 구매 추천 시스템이 포함됩니다.

통합 회로 또는 모 놀리 식 통합 회로 (IC, 칩 또는 마이크로 칩이라고도 함)는 반도체 재료, 일반적으로 실리콘의 작은 평평한 조각 (또는 "칩")에 전자 회로 세트입니다. 많은 작은 MOSFETS (금속-산화물-일체형 전계 효과 트랜지스터)가 작은 칩에 통합됩니다. 이로 인해 개별 전자 구성 요소로 구성된 것보다 크고 빠르며 저렴한 회로가 발생합니다. 통합 회로 설계에 대한 IC의 대량 생산 기능, 신뢰성 및 빌딩 블록 접근 방식은 개별 트랜지스터 대신 표준화 된 IC의 빠른 채택을 보장했습니다. ICs are now used in virtually all electronic equipment and have revolutionized the world of electronics. Computers, mobile phones, and other home appliances are now inextricable parts of the structure of modern societies, made possible by the small size and low cost of ICs such as modern computer processors and microcontrollers.

Very-large-scale integration was made practical by technological advancements in metal–oxide–silicon (MOS) semiconductor device fabrication. Since their origins in the 1960s, the size, speed, and capacity of chips have progressed enormously, driven by technical advances that fit more and more MOS transistors on chips of the same size – a modern chip may have many billions of MOS transistors in an area the size of a human fingernail. These advances, roughly following Moore's law, make today's computer chips possess millions of times the capacity and thousands of times the speed of the computer chips of the early 1970s.

ICs have two main advantages over discrete circuits: cost and performance. The cost is low because the chips, with all their components, are printed as a unit by photolithography rather than being constructed one transistor at a time. Furthermore, packaged ICs use much less material than discrete circuits. Performance is high because the IC's components switch quickly and consume comparatively little power because of their small size and proximity. The main disadvantage of ICs is the high cost of designing them and fabricating the required photomasks. This high initial cost means ICs are only commercially viable when high production volumes are anticipated.

Modern electronic component distributors often further sub-categorize integrated circuits:

Object Oriented Programming is a fundamental programming paradigm that is based on the concepts of objects and data.

It is the standard way of code that every programmer has to abide by for better readability and reusability of the code.

Read more about these concepts of OOP here

In computer science, functional programming is a programming paradigm where programs are constructed by applying and composing functions. It is a declarative programming paradigm in which function definitions are trees of expressions that map values to other values, rather than a sequence of imperative statements which update the running state of the program.

In functional programming, functions are treated as first-class citizens, meaning that they can be bound to names (including local identifiers), passed as arguments, and returned from other functions, just as any other data type can. This allows programs to be written in a declarative and composable style, where small functions are combined in a modular manner.

Functional programming is sometimes treated as synonymous with purely functional programming, a subset of functional programming which treats all functions as deterministic mathematical functions, or pure functions. When a pure function is called with some given arguments, it will always return the same result, and cannot be affected by any mutable state or other side effects. This is in contrast with impure procedures, common in imperative programming, which can have side effects (such as modifying the program's state or taking input from a user). Proponents of purely functional programming claim that by restricting side effects, programs can have fewer bugs, be easier to debug and test, and be more suited to formal verification procedures.

Functional programming has its roots in academia, evolving from the lambda calculus, a formal system of computation based only on functions. Functional programming has historically been less popular than imperative programming, but many functional languages are seeing use today in industry and education.

Some examples of functional programming languages are:

Functional programming is derived historically from the lambda calculus . Lambda calculus is a framework developed by Alonzo Church to study computations with functions. It is often called "the smallest programming language in the world." It provides a definition of what is computable and what is not. It is equivalent to a Turing machine in its computational ability and anything computable by the lambda calculus, just like anything computable by a Turing machine, is computable. It provides a theoretical framework for describing functions and their evaluations.

Some essential concepts of functional programming are:

Pure functions : These functions have two main properties. First, they always produce the same output for the same arguments irrespective of anything else. Secondly, they have no side effects. ie they do not modify any arguments or local/global variables or input/output streams. The latter property is called immutability . The pure function's only result is the value it returns. They are deterministic. Programs done using functional programming are easy to debug because they have no side effects or hidden I/O. Pure functions also make it easier to write parallel/concurrent applications. When code is written in this style, a smart compiler can do many things- it can parallelize the instructions, wait to evaluate results until needed and memorize the results since the results never change as long as the input doesn't change. Here is a simple example of a pure function in Python:

def sum ( x , y ): # sum is a function taking x and y as arguments

return x + y # returns x + y without changing the valueRecursion : There are no "for" or "while" loops in pure functional programming languages. Iteration is implemented through recursion. Recursive functions repeatedly call themselves until a base case is reached. Here is a simple example of a recursion function in C:

int fib ( n ) {

if ( n <= 1 )

return 1 ;

else

return ( fib ( n - 1 ) + fib ( n - 2 ));

}Referential transparency : In functional programs, variables once defined do not change their value throughout the program. Functional programs do not have assignment statements. If we have to store some value, we define a new variable instead. This eliminates any chance of side effects because any variable can be replaced with its actual value at any point of the execution. The state of any variable is constant at any instant. 예:

x = x + 1 # this changed the value assigned to the variable x

# Therefore, the expression is NOT referentially transparentFunctions are first-class and can be higher order : First class functions are treated as first-class variables. The first class variables can be passed to functions as parameters, can be returned from functions or stored in data structures.

A combination of function applications may be defined using a LISP form called funcall , which takes as arguments a function and a series of arguments and applies that function to those arguments:

( defun filter (list-of-elements test)

( cond (( null list-of-elements) nil )

(( funcall test ( car list-of-elements))

( cons ( car list-of-elements)

(filter ( cdr list-of-elements)

test)))

( t (filter ( cdr list-of-elements)

test))))The function filter applies the test to the first element of the list. If the test returns non-nil, it conses the element onto the result of filter applied to the cdr of the list; otherwise, it just returns the filtered cdr. This function may be used with different predicates passed in as parameters to perform a variety of filtering tasks:

> (filter ' ( 1 3 -9 5 -2 -7 6 ) #' plusp ) ; filter out all negative numbers output: (1 3 5 6)

> (filter ' ( 1 2 3 4 5 6 7 8 9 ) #' evenp ) ; filter out all odd numbersoutput: (2 4 6 8)

등.

Variables are immutable : In functional programming, we can't modify a variable after it's been initialized. We can create new variables- but we can't modify existing variables, and this really helps to maintain the state throughout the runtime of a program. Once we create a variable and set its value, we can have full confidence knowing that the value of that variable will never change.

An operating system (or OS for short) acts as an intermediary between a computer user and computer hardware. The purpose of an operating system is to provide an environment in which a user can execute programs conveniently and efficiently. An operating system is software that manages computer hardware. The hardware must provide appropriate mechanisms to ensure the correct operation of the computer system and to prevent user programs from interfering with the proper operation of the system. An even more common definition is that the operating system is the one program running at all times on the computer (usually called the kernel), with all else being application programs.

Operating systems can be viewed from two viewpoints: resource managers and extended machines. In the resource-manager view, the operating system's job is to manage the different parts of the system efficiently. In the extended-machine view, the job of the system is to provide the users with abstractions that are more con- convenient to use than the actual machine. These include processes, address spaces, and files. Operating systems have a long history, from when they replaced the operator to modern multiprogramming systems. Highlights include early batch systems, multiprogramming systems, and personal computer systems. Since operating systems interact closely with the hardware, some knowledge of computer hardware is useful for understanding them. Computers are built up of processors, memories, and I/O devices. These parts are connected by buses. The basic concepts on which all operating systems are built are processes, memory management, I/O management, the file system, and security. The heart of any operating system is the set of system calls that it can handle. These tell what the operating system does.

The operating system manages all the pieces of a complex system. Modern computers consist of processors, memories, timers, disks, mice, network interfaces, printers, and a wide variety of other devices. In the bottom-up view, the job of the operating system is to provide for an orderly and controlled allocation of the processors, memories, and I/O devices among the various programs wanting them. Modern operating systems allow multiple programs to be in memory and run simultaneously. Imagine what would happen if three programs running on some computer all tried to print their output simultaneously on the same printer. The result would be utter chaos. The operating system can bring order to the potential chaos by buffering all the output destined for the printer on the disk. When one program is finished, the operating system can then copy its output from the disk file where it has been stored for the printer, while at the same time, the other program can continue generating more output, oblivious to the fact that the output is not going to the printer (yet). When a computer (or network) has more than one user, the need to manage and protect the memory, I/O devices, and other resources even more since the users might otherwise interfere with one another. In addition, users often need to share not only hardware but also information (files, databases, etc.). In short, this view of the operating system holds that its primary task is to keep track of which programs are using which resource, to grant resource requests, to account for usage and to mediate conflicting requests from different programs and users.

The architecture of most computers at the machine-language level is primitive and awkward to program, especially for input/output. To make this point more concrete, consider modern SATA (Serial ATA) hard disks used on most computers. What a programmer would have to know to use the disk. Since then, the interface has been revised multiple times and is more complicated than it was in 2007. No sane programmer would want to deal with this disk at the hardware level. Instead, a piece of software called a disk driver deals with the hardware and provides an interface to read and write disk blocks, without getting into the details. Operating systems contain many drivers for controlling I/O devices. But even this level is much too low for most applications. For this reason, all operating systems provide yet another layer of abstraction for using disks: files. Using this abstraction, programs can create, write, and read files without dealing with the messy details of how the hardware works. This abstraction is the key to managing all this complexity. Good abstractions turn a nearly impossible task into two manageable ones. The first is defining and implementing the abstractions. The second is using these abstractions to solve the problem at hand.

First Generation (1945-55) : Little progress was achieved in building digital computers after Babbage's disastrous efforts until the World War II era. At Iowa State University, Professor John Atanasoff and his graduate student Clifford Berry created what is today recognized as the first operational digital computer. Konrad Zuse in Berlin constructed the Z3 computer using electromechanical relays around the same time. The Mark I was created by Howard Aiken at Harvard, the Colossus by a team of scientists at Bletchley Park in England, and the ENIAC by William Mauchley and his doctoral student J. Presper Eckert at the University of Pennsylvania in 1944.

Second Generation (1955-65) : The transistor's invention in the middle of the 1950s drastically altered the situation. Computers became dependable enough that they could be manufactured and sold to paying customers with the assumption that they would keep working long enough to conduct some meaningful job. Mainframes, as these machines are now known, were kept locked up in huge, particularly air-conditioned computer rooms, with teams of qualified operators to manage them. Only huge businesses, significant government entities, or institutions could afford the price tag of several million dollars.

Third Generation (1965-80) : In comparison to second-generation computers, which were constructed from individual transistors, the IBM 360 was the first major computer line to employ (small-scale) ICs (Integrated Circuits). As a result, it offered a significant price/performance benefit. It was an instant hit, and all the other big manufacturers quickly embraced the concept of a family of interoperable computers. All software, including the OS/360 operating system, was supposed to be compatible with all models in the original design. It had to run on massive systems, which frequently replaced 7094s for heavy computation and weather forecasting, and tiny systems, which frequently merely replaced 1401s for transferring cards to tape. Both systems with few peripherals and systems with many peripherals needed to function well with it. It had to function both in professional and academic settings. Above all, it had to be effective for each of these many applications.

Fourth Generation (1980-Present) : The personal computer era began with the creation of LSI (Large Scale Integration) circuits, processors with thousands of transistors on a square centimeter of silicon. Although personal computers, originally known as microcomputers, did not change significantly in architecture from minicomputers of the PDP-11 class, they did differ significantly in price.

Fifth Generation (1990-Present) : People have yearned for a portable communication gadget ever since detective Dick Tracy in the 1940s comic strip began conversing with his "two-way radio wristwatch." In 1946, a real mobile phone made its debut, and it weighed about 40 kilograms. The first real portable phone debuted in the 1970s and was incredibly lightweight at about one kilogram. It was jokingly referred to as "the brick." Soon, everyone was clamoring for one.

Mainframe OS : At the high end are the operating systems for mainframes, those room-sized computers still found in major corporate data centres. These computers differ from personal computers in terms of their I/O capacity. A mainframe with 1000 disks and millions of gigabytes of data is not unusual; a personal computer with these specifications would be the envy of its friends. Mainframes are also making some- a thing of a comeback as high-end Web servers, servers for large-scale electronic commerce sites, and servers for business-to-business transactions. The operating systems for mainframes are heavily oriented toward processing many jobs at once, most of which need prodigious amounts of I/O. They typically offer three kinds of services: batch, transaction processing, and timesharing

Server OS : One level down is the server operating systems. They run on servers, which are either very large personal computers, workstations, or even mainframes. They serve multiple users at once over a network and allow the users to share hardware and software resources. Servers can provide print service, file service, or Web service. Internet providers run many server machines to support their customers, and Websites use servers to store Web pages and handle incoming requests. Typical server operating systems are Solaris, FreeBSD, Linux, and Windows Server 201x.

Multiprocessor OS : An increasingly common way to get major-league computing power is to connect multiple CPUs into a single system. Depending on precisely how they are connected and what is shared, these systems are called parallel computers, multi-computers, or multiprocessors. They need special operating systems, but often these are variations on the server operating systems, with special features for communication, connectivity, and consistency.

Personal Computer OS : The next category is the personal computer operating system. Modern ones all support multiprogramming, often with dozens of programs started up at boot time. Their job is to provide good support to a single user. They are widely used for word processing, spreadsheets, games, and Internet access. Common examples are Linux, FreeBSD, Windows 7, Windows 8, and Apple's OS X. Personal computer operating systems are so widely known that probably little introduction is needed. Many people are not even aware that other kinds exist.

Embedded OS : Embedded systems run on computers that control devices that are not generally considered computers and do not accept user-installed software. Typical examples are microwave ovens, TV sets, cars, DVD recorders, traditional phones, and MP3 players. The main property distinguishing embedded systems from handhelds is the certainty that no untrusted software will ever run on them. You cannot download new applications to your microwave oven—all the software is in ROM. This means there is no need for protection between applications, simplifying design. Systems such as Embedded Linux, QNX and VxWorks is popular in this domain.

Smart Card OS : The smallest operating systems run on credit-card-sized smart card devices with CPU chips. They have very severe processing power and memory constraints. Some are powered by contacts in the reader into which they are inserted, but contactless smart cards are inductively powered, greatly limiting what they can do. Some can handle only a single function, such as electronic payments, but others can handle multiple functions. Often these are proprietary systems. Some smart cards are Java oriented. This means that the ROM on the smart card holds an interpreter for the Java Virtual Machine (JVM). Java applets (small programs) are downloaded to the card and are interpreted by the JVM interpreter. Some of these cards can handle multiple Java applets at the same time, leading to multiprogramming and the need to schedule them. Resource management and protection also become an issue when two or more applets are present simultaneously. These issues must be handled by the (usually extremely primitive) operating system present on the card.

The term memory refers to the component within your computer that allows short-term data access. You may recognize this component as DRAM or dynamic random-access memory. Your computer performs many operations by accessing data stored in its short-term memory. Some examples of such operations include editing a document, loading applications, and browsing the Internet. The speed and performance of your system depend on the amount of memory that is installed on your computer.

If you have a desk and a filing cabinet, the desk represents your computer's memory. Items you need to use immediately are kept on your desk for easy access. However, not much can be stored on a desk due to its size limitations.

Whereas memory refers to the location of short-term data, storage is the component within your computer that allows you to store and access data long-term. Usually, storage comes in the form of a solid-state drive or a hard drive. Storage houses your applications, operating system, and files indefinitely. Computers need to read and write information from the storage system, so the storage speed determines how fast your system can boot up, load, and access what you've saved.

While the desk represents the computer's memory, the filing cabinet represents your computer's storage. It holds items that need to be saved and stored but is not necessarily needed for immediate access. The size of the filing cabinet means that it can hold many things.

An important distinction between memory and storage is that memory clears when the computer is turned off. On the other hand, storage remains intact no matter how often you shut off your computer. Therefore, in the desk and filing cabinet analogy, any files left on your desk will be thrown away when you leave the office. Everything in your filing cabinet will remain.

At the heart of computer systems lies memory, the space where programs run and data is stored. But what happens when the programs you're running and the data you're working with exceed the physical capacity of your computer's memory? This is where virtual memory steps in, acting as a smart extension to your computer's memory and enhancing its capabilities.

Definition and Purpose of Virtual Memory:

Virtual memory is a memory management technique employed by operating systems to overcome the limitations of physical memory (RAM). It creates an illusion for software applications that they have access to a larger amount of memory than what is physically installed on the computer. In essence, it enables programs to utilize memory space beyond the confines of the computer's physical RAM.

The primary purpose of virtual memory is to enable efficient multitasking and the execution of larger programs, all while maintaining the responsiveness of the system. It achieves this by creating a seamless interaction between the physical RAM and secondary storage devices, like the hard drive or SSD.

How Virtual Memory Extends Available Physical Memory:

Think of virtual memory as a bridge that connects your computer's RAM and its secondary storage (disk drives). When you run a program, parts of it are loaded into the faster physical memory (RAM). However, not all parts of the program may be used immediately.

Virtual memory exploits this situation by moving sections of the program that aren't actively being used from RAM to the secondary storage, creating more room in RAM for the parts that are actively in use. This process is transparent to the user and the running programs. When the moved parts are needed again, they are swapped back into RAM, while other less active parts may be moved to the secondary storage.

This dynamic swapping of data in and out of physical memory is managed by the operating system. It allows programs to run even if they're larger than the available RAM, as the operating system intelligently decides what data needs to be in RAM for optimal performance.

In summary, virtual memory acts as a virtualization layer that extends the available physical memory by temporarily transferring parts of programs and data between the RAM and secondary storage. This process ensures that the computer can handle larger tasks and numerous programs simultaneously, all while maintaining efficient performance and responsiveness.

In computing, a file system or filesystem (often abbreviated to fs) is a method and data structure the operating system uses to control how data is stored and retrieved. Without a file system, data placed in a storage medium would be one large body of data with no way to tell where one piece of data stopped and the next began or where any piece of data was located when it was time to retrieve it. By separating the data into pieces and giving each piece a name, the data is easily isolated and identified. Taking its name from how a paper-based data management system is named, each data group is called a "file". The structure and logic rules used to manage the groups of data and their names are called a "file system."

There are many kinds of file systems, each with unique structure and logic, properties of speed, flexibility, security, size, and more. Some file systems have been designed to be used for specific applications. For example, the ISO 9660 file system is designed specifically for optical discs.

File systems can be used on many types of storage devices using various media. As of 2019, hard disk drives have been key storage devices and are projected to remain so for the foreseeable future. Other kinds of media that are used include SSDs, magnetic tapes, and optical discs. In some cases, such as with tmpfs, the computer's main memory (random-access memory, RAM) creates a temporary file system for short-term use.

Some file systems are used on local data storage devices; others provide file access via a network protocol (for example, NFS, SMB, or 9P clients). Some file systems are "virtual", meaning that the supplied "files" (called virtual files) are computed on request (such as procfs and sysfs) or are merely a mapping into a different file system used as a backing store. The file system manages access to both the content of files and the metadata about those files. It is responsible for arranging storage space; reliability, efficiency, and tuning with regard to the physical storage medium are important design considerations.

A file system stores and organizes data and can be thought of as a type of index for all the data contained in a storage device. These devices can include hard drives, optical drives, and flash drives.

File systems specify conventions for naming files, including the maximum number of characters in a name, which characters can be used, and, in some systems, how long the file name suffix can be. In many file systems, file names are not case-sensitive.

Along with the file itself, file systems contain information such as the file's size and its attributes, location, and hierarchy in the directory in the metadata. Metadata can also identify free blocks of available storage on the drive and how much space is available.

A file system also includes a format to specify the path to a file through the structure of directories. A file is placed in a directory -- or a folder in Windows OS -- or subdirectory at the desired place in the tree structure. PC and mobile OSes have file systems in which files are placed in a hierarchical tree structure.

Before files and directories are created on the storage medium, partitions should be put into place. A partition is a region of the hard disk or other storage that the OS manages separately. One file system is contained in the primary partition, and some OSes allow for multiple partitions on one disk. In this situation, if one file system gets corrupted, the data in a different partition will be safe.

There are several types of file systems, all with different logical structures and properties, such as speed and size. The type of file system can differ by OS and the needs of that OS. Microsoft Windows, Mac OS X, and Linux are the three most common PC operating systems. Mobile OSes include Apple iOS and Google Android.

Major file systems include the following:

File allocation table (FAT) is supported by Microsoft Windows OS. FAT is considered simple and reliable and modeled after legacy file systems. FAT was designed in 1977 for floppy disks but was later adapted for hard disks. While efficient and compatible with most current OSes, FAT cannot match the performance and scalability of more modern file systems.

Global file system (GFS) is a file system for the Linux OS, and it is a shared disk file system. GFS offers direct access to shared block storage and can be used as a local file system.

GFS2 is an updated version with features not included in the original GFS, such as an updated metadata system. Under the GNU General Public License terms, both the GFS and GFS2 file systems are available as free software.

Hierarchical file system (HFS) was developed for use with Mac operating systems. HFS can also be called Mac OS Standard, succeeded by Mac OS Extended. Originally introduced in 1985 for floppy and hard disks, HFS replaced the original Macintosh file system. It can also be used on CD-ROMs.

The NT file system -- also known as the New Technology File System (NTFS) -- is the default file system for Windows products from Windows NT 3.1 OS onward. Improvements from the previous FAT file system include better metadata support, performance, and use of disk space. NTFS is also supported in the Linux OS through a free, open-source NTFS driver. Mac OSes have read-only support for NTFS.

Universal Disk Format (UDF) is a vendor-neutral file system for optical media and DVDs. UDF replaces the ISO 9660 file system and is the official file system for DVD video and audio, as chosen by the DVD Forum.

Cloud computing is a type of Internet-based computing that provides shared computer processing resources and data to computers and other devices on demand. It also allows authorized users and systems to access applications and data from any location with an internet connection.

It is a model for enabling ubiquitous, convenient, on-demand network access to a shared pool of configurable computing resources (eg, networks, servers, storage, applications, and services) that can be rapidly provisioned and released with minimal management effort or service provider interaction.

Cloud computing is a big shift from how businesses think about IT resources. Here are seven common reasons organizations are turning to cloud computing services:

Cloud computing eliminates the capital expense of buying hardware and software and setting up and running on-site data centers—the racks of servers, the round-the-clock electricity for power and cooling, and the IT experts for managing the infrastructure. It adds up fast.

Most cloud computing services are provided self-service and on demand, so even vast amounts of computing resources can be provisioned in minutes, typically with just a few mouse clicks, giving businesses a lot of flexibility and taking the pressure off capacity planning.

The benefits of cloud computing services include the ability to scale elastically. In cloud speak, that means delivering the right amount of IT resources—for example, more or less computing power, storage, and bandwidth—right when it is needed and from the right geographic location.

On-site data centers typically require a lot of "racking and stacking"—hardware setup, software patching, and other time-consuming IT management chores. Cloud computing removes the need for many of these tasks, so IT teams can spend time on achieving more important business goals.

The biggest cloud computing services run on a worldwide network of secure data centers, which are regularly upgraded to the latest generation of fast and efficient computing hardware. This offers several benefits over a single corporate data center, including reduced network latency for applications and greater economies of scale.

Cloud computing makes data backup, disaster recovery, and business continuity easier and less expensive because data can be mirrored at multiple redundant sites on the cloud provider's network.

Many cloud providers offer a broad set of policies, technologies, and controls that strengthen your security posture overall, helping protect your data, apps, and infrastructure from potential threats.

Machine learning is the practice of teaching a computer to learn. The concept uses pattern recognition, as well as other forms of predictive algorithms, to make judgments on incoming data. This field is closely related to artificial intelligence and computational statistics.

In this, machine learning models are trained with labelled data sets, which allow the models to learn and grow more accurately over time. For example, an algorithm would be trained with pictures of dogs and other things, all labelled by humans, and the machine would learn ways to identify pictures of dogs on its own. Supervised machine learning is the most common type used today.

Practical applications of Supervised Learning –

In Unsupervised machine learning, a program looks for patterns in unlabeled data. Unsupervised machine learning can find patterns or trends that people aren't explicitly looking for. For example, an unsupervised machine learning program could look through online sales data and identify different types of clients making purchases.

Practical applications of unsupervised Learning

The disadvantage of supervised learning is that it requires hand-labelling by ML specialists or data scientists and requires a high cost to process. Unsupervised learning also has a limited spectrum for its applications. To overcome these drawbacks of supervised learning and unsupervised learning algorithms, the concept of Semi-supervised learning is introduced. Typically, this combination contains a very small amount of labelled data and a large amount of unlabelled data. The basic procedure involved is that first, the programmer will cluster similar data using an unsupervised learning algorithm and then use the existing labelled data to label the rest of the unlabelled data.

Practical applications of Semi-Supervised Learning –

This trains machines through trial and error to take the best action by establishing a reward system. Reinforcement learning can train models to play games or train autonomous vehicles to drive by telling the machine when it made the right decisions, which helps it learn over time what actions it should take.

Practical applications of Reinforcement Learning -

Natural language processing is a field of machine learning in which machines learn to understand natural language as spoken and written by humans instead of the data and numbers normally used to program computers. This allows machines to recognize the language, understand it, and respond to it, as well as create new text and translate between languages. Natural language processing enables familiar technology like chatbots and digital assistants like Siri or Alexa.

Practical applications of NLP:

Neural networks are a commonly used, specific class of machine learning algorithms. Artificial neural networks are modelled on the human brain, in which thousands or millions of processing nodes are interconnected and organized into layers.

In an artificial neural network, cells, or nodes, are connected, with each cell processing inputs and producing an output that is sent to other neurons. Labeled data moves through the nodes or cells, with each cell performing a different function. In a neural network trained to identify whether a picture contains a cat or not, the different nodes would assess the information and arrive at an output that indicates whether a picture features a cat.

Practical applications of Neural Networks:

Deep learning networks are advanced neural networks with multiple layers. These layered networks can process vast amounts of data and adjust the "weights" of each connection within the network. For example, in an image recognition system, certain layers of the neural network might detect individual facial features like eyes, nose, or mouth. Another layer would then analyze whether these features are arranged in a way that identifies a face.

Practical applications of Deep Learning:

Web Technology refers to the various tools and techniques that are utilized in the process of communication between different types of devices over the Internet. A web browser is used to access web pages. Web browsers can be defined as programs that display text, data, pictures, animation, and video on the Internet. Hyperlinked resources on the World Wide Web can be accessed using software interfaces provided by Web browsers.

The part of a website where the user interacts directly is termed the front end. It is also referred to as the 'client side' of the application.

The backend is the server side of a website. It is part of the website that users cannot see and interact with. It is the portion of software that does not come in direct contact with the users. It is used to store and arrange data.

A computer network is a set of computers sharing resources located on or provided by network nodes. Computers use common communication protocols over digital interconnections to communicate with each other. These interconnections are made up of telecommunication network technologies based on physically wired, optical, and wireless radio-frequency methods that may be arranged in a variety of network topologies.

The nodes of a computer network can include personal computers, servers, networking hardware, or other specialized or general-purpose hosts. They are identified by network addresses and may have hostnames. Hostnames serve as memorable labels for the nodes, rarely changed after the initial assignment. Network addresses serve for locating and identifying the nodes by communication protocols such as the Internet Protocol.

Computer networks may be classified by many criteria, including the transmission medium used to carry signals, bandwidth, communications protocols to organize network traffic, the network size, the topology, traffic control mechanism, and organizational intent.

There are two primary types of computer networking:

OSI stands for Open Systems Interconnection . It was developed by ISO – ' International Organization for Standardization 'in the year 1984. It is a 7-layer architecture with each layer having specific functionality to perform. All these seven layers work collaboratively to transmit the data from one person to another across the globe.

The lowest layer of the OSI reference model is the physical layer. It is responsible for the actual physical connection between the devices. The physical layer contains information in the form of bits. It is responsible for transmitting individual bits from one node to the next. When receiving data, this layer will get the signal received and convert it into 0s and 1s and send them to the Data Link layer, which will put the frame back together.

The functions of the physical layer are as follows:

The data link layer is responsible for the node-to-node delivery of the message. The main function of this layer is to make sure data transfer is error-free from one node to another over the physical layer. When a packet arrives in a network, it is the responsibility of the DLL to transmit it to the host using its MAC address.

The Data Link Layer is divided into two sublayers:

The packet received from the Network layer is further divided into frames depending on the frame size of the NIC(Network Interface Card). DLL also encapsulates the Sender and Receiver's MAC address in the header.

The Receiver's MAC address is obtained by placing an ARP(Address Resolution Protocol) request onto the wire asking, “Who has that IP address?” and the destination host will reply with its MAC address.

The functions of the Data Link layer are :

The network layer works for the transmission of data from one host to the other located in different networks. It also takes care of packet routing, ie, the selection of the shortest path to transmit the packet from the number of routes available. The sender & receiver's IP addresses are placed in the header by the network layer.

The functions of the Network layer are :

The Internet is a global system of interconnected computer networks that use the standard Internet protocol suite (TCP/IP) to serve billions of users worldwide. It is a network of networks that consists of millions of private, public, academic, business, and government networks of local to global scope that is linked by a broad array of electronic, wireless, and optical networking technologies. The Internet carries an extensive range of information resources and services, such as the interlinked hypertext documents and applications of the World Wide Web (WWW) and the infrastructure to support email.

The World Wide Web (WWW) is an information space where documents and other web resources are identified by Uniform Resource Locators (URLs), interlinked by hypertext links, and accessible via the Internet. English scientist Tim Berners-Lee invented the World Wide Web in 1989. He wrote the first web browser in 1990 while employed at CERN in Switzerland. The browser was released outside CERN in 1991, first to other research institutions starting in January 1991 and to the general public on the Internet in August 1991.

The Internet Protocol (IP) is a protocol, or set of rules, for routing and addressing packets of data so that they can travel across networks and arrive at the correct destination. Data traversing the Internet is divided into smaller pieces called packets.

A database is a collection of related data that represents some aspect of the real world. A database system is designed to be built and populated with data for a certain task.

Database Management System (DBMS) is software for storing and retrieving users' data while considering appropriate security measures. It consists of a group of programs that manipulate the database. The DBMS accepts the request for data from an application and instructs the operating system to provide the specific data. In large systems, a DBMS helps users and other third-party software store and retrieve data.

DBMS allows users to create their databases as per their requirements. The term "DBMS" includes the use of a database and other application programs. It provides an interface between the data and the software application.

Let us see a simple example of a university database. This database maintains information concerning students, courses, and grades in a university environment. The database is organized into five files:

To define DBMS:

Here are the important landmarks from history:

Here are the characteristics and properties of a Database Management System:

Here is the list of some popular DBMS systems:

Cryptography is a technique to secure data and communication. It is a method of protecting information and communications through the use of codes so that only those for whom the information is intended can read and process it. Cryptography is used to protect data in transit, at rest, and in use. The prefix crypt means "hidden" or "secret", and the suffix graphy means "writing".

There are two types of cryptography:

Cryptocurrency is a digital currency in which encryption techniques are used to regulate the generation of units of currency and verify the transfer of funds, operating independently of a central bank. Cryptocurrencies use decentralized control as opposed to centralized digital currency and central banking systems. The decentralized control of each cryptocurrency works through distributed ledger technology, typically a blockchain, that serves as a public financial transaction database. A defining feature of a cryptocurrency, and arguably its most endearing allure, is its organic nature; it is not issued by any central authority, rendering it theoretically immune to government interference or manipulation.

In theoretical computer science and mathematics, the theory of computation is the branch that deals with what problems can be solved on a model of computation using an algorithm, how efficiently they can be solved, or to what degree (eg, approximate solutions versus precise ones). The field is divided into three major branches: automata theory and formal languages, computability theory, and computational complexity theory, which are linked by the question: "What are the fundamental capabilities and limitations of computers?".

Automata theory is the study of abstract machines and automata, as well as the computational problems that can be solved using them. It is a theory in theoretical computer science. The word automata comes from the Greek word αὐτόματος, which means "self-acting, self-willed, self-moving". An automaton (automata in plural) is an abstract self-propelled computing device that follows a predetermined sequence of operations automatically. An automaton with a finite number of states is called a Finite Automaton (FA) or Finite-State Machine (FSM). The figure on the right illustrates a finite-state machine, which is a well-known type of automaton. This automaton consists of states (represented in the figure by circles) and transitions (represented by arrows). As the automaton sees a symbol of input, it makes a transition (or jump) to another state, according to its transition function, which takes the previous state and current input symbol as its arguments.

In logic, mathematics, computer science, and linguistics, a formal language consists of words whose letters are taken from an alphabet and are well-formed according to a specific set of rules.

The alphabet of a formal language consists of symbols, letters, or tokens that concatenate into strings of the language. Each string concatenated from symbols of this alphabet is called a word, and the words that belong to a particular formal language are sometimes called well-formed words or well-formed formulas. A formal language is often defined using formal grammar, such as regular grammar or context-free grammar, which consists of its formation rules.

In computer science, formal languages are used, among others, as the basis for defining the grammar of programming languages and formalized versions of subsets of natural languages in which the words of the language represent concepts that are associated with particular meanings or semantics. In computational complexity theory, decision problems are typically defined as formal languages and complexity classes are defined as the sets of formal languages that can be parsed by machines with limited computational power. In logic and the foundations of mathematics, formal languages are used to represent the syntax of axiomatic systems, and mathematical formalism is the philosophy that all mathematics can be reduced to the syntactic manipulation of formal languages in this way.

Computability theory, also known as recursion theory, is a branch of mathematical logic and computer science that began in the 1930s with the study of computable functions and Turing degrees. Since its inception, the field has expanded to encompass the study of generalized computability and definability. In these areas, computability theory intersects with proof theory and effective descriptive set theory, reflecting its broad and interdisciplinary nature.

In theoretical computer science and mathematics, computational complexity theory focuses on classifying computational problems according to their resource usage and relating these classes to each other. A computational problem is a task solved by a computer. A computation problem is solvable by a mechanical application of mathematical steps, such as an algorithm.

A problem is regarded as inherently difficult if its solution requires significant resources, whatever the algorithm used. The theory formalizes this intuition by introducing mathematical models of computation to study these problems and quantifying their computational complexity, ie, the number of resources needed to solve them, such as time and storage. Other measures of complexity are also used, such as the amount of communication (used in communication complexity), the number of gates in a circuit (used in circuit complexity), and the number of processors (used in parallel computing). One of the roles of computational complexity theory is to determine the practical limits on what computers can and cannot do. The P versus NP problem, one of the seven Millennium Prize Problems, is dedicated to the field of computational complexity.

Closely related fields in theoretical computer science are the analysis of algorithms and computability theory. A key distinction between the analysis of algorithms and computational complexity theory is that the former is devoted to analyzing the number of resources needed by a particular algorithm to solve a problem, whereas the latter asks a more general question about all possible algorithms that could be used to solve the same problem. More precisely, computational complexity theory tries to classify problems that can or cannot be solved with appropriately restricted resources. In turn, imposing restrictions on the available resources is what distinguishes computational complexity from computability theory: the latter theory asks what kinds of problems can, in principle, be solved algorithmically.

DevOps is a set of practices, tools, and cultural philosophies that automate and integrate the processes between software development (Dev) and IT operations (Ops). The primary goal is to foster a culture of collaboration, automate processes across teams, and deliver high-quality software efficiently, reducing the time between committing a change to a system and the change being placed into production.

In essence, DevOps integrates all aspects of development (coding, building, testing, and releasing) with operations (deployment, monitoring, and maintenance) into a unified, automated pipeline. It builds on the principles of Agile development, but extends them into the operational phase of software deployment.

Sifat ? | Yuvraj Chauhan ? | Rajesh kumar halder ? | Ishan Mondal ? | Apoorva08102000 ? | Apoorva .S. Mehta ? |

Imran Biswas ? | Subrata Pramanik | Samuel Favarin | sahooabhipsa10 | Sahil Rao | KK Chowdhury |

Manas Baroi | Aditi ? | Syed Talib Hossain | Jai Mehrotra | Shuvam Bag | Abhijit Turate |

Jayesh Deorukhkar | JC Shankar | Subrata Pramanik | Imam Suyuti | genius_koder | Altaf Shaikh |

Rajdeep Das | Vikash Patel | Arvind Srivastav | Manish Kr Prasad | MOHIT KUMAR KUSHWAHA | DryHitman |

Harsh Kulkarni | Atreay Kukanur | Sree Haran | Auro Saswat Raj | Aiyan Faras | Priyanshi David |

Ishan Mondal | Nikhil Shrivastava | deepshikha2708 | L.RISHIWARDHAN | Rahul RK | Nishant Wankhade |

pritika163 | Anjuman Hasan | Astha Varshney | Gcettbdeveloper | Elston Tan | Shivansh Dengla |

David Daniels | ayushverma14 | Pratik Rai | Yash | pranavyatnalkar | Jeremia Axel |

Akhil Soni | Zahra Shahid | Mihir20K | Aman | Mauricio Allegretti | Bruno-Vasconcellos-Betella |

Febi Arifin | Dineshwar Doddapaneni | Dheeraj_Soni | Ojash Kushwaha | Laleet Borse | Wahaj Raza |

WahajRaza1 | Ravi Lamkoti | The One and Only Uper | AdarshBajpai67 | Deepak Kharah | sairohit360 |

sairohitzl | Raval Jinit | Vovka1759 | Nijin | Avinil Bedarkar | FercueNat |

Yash Khare | Ayush Anand | DharmaWarrior | Hitarth Raval | Wiem Borchani | Kamden Burke |

denschiro | Nishat | Mohammed Faizan Ahmed | Manish Agrahari | Katari Lokeswara rao | Zahra Shahid |

Glenn Turner | Vinayak godse | Satyajeetbh | Paidipelly Dhruvateja | helloausrine | SourabhJoshi209 |

Stefan Taitano | Abu Noman Md. Sakib | Rishi Mathur | Darky001 | HIMANSHU | Kusumita Ghose |

Yashvi Patel | ArshadAriff | ishashukla183 | jhuynh06 ? | Andrew Asche | J. Nathan Allen ? |

Sayed Afnan Khazi | Technic143 ? | Pin Yuan Wang | Bogdan Otava | Vedeesh Dwivedi ? | Tsig |

Brandon Awan ? | Sanya Madre | Steven | Garrett Crowley | Francesco Franco ? | Alexander Little ? |

Subham Maji ? | SK Jiyad ? | exrol ? | Manav Mittal ? | Rathish R ? | Anubhav Kulshreshtha ? |

Sarthak | architO21 ? | Nikhil Kumar Jha | Kundai Chasinda ? | Rohan kaushal ? | Aayush Kumar |

Vladimir Cucu ? | Mohammed Ali Alsakkaf (Binbasri) ? | 섬기는 사람 ? | Amritanshu Barpanda ? | aheaton22 ? | Masumi Kawasaki ? |

aslezar | Yash Sajwan ? | Abhishek Kumar ? | jakenybo ? | Fangzhou_Jiang ? | Nelson Uprety ? |

Kevin Garfield ? | xaviermonb ? | AryasCodeTreks | khouloud HADDAD AMAMOU | Walter March ? | Nivea Hanley |

nam | Shaan Rehsi ? | mjung1 | Joshua Latham ? | Pietro Bartolocci ? | Naveen Prajapati |

Billy Marcus Wright | Raquel-James | Teddy ASSIH | Md Nayeem ? |