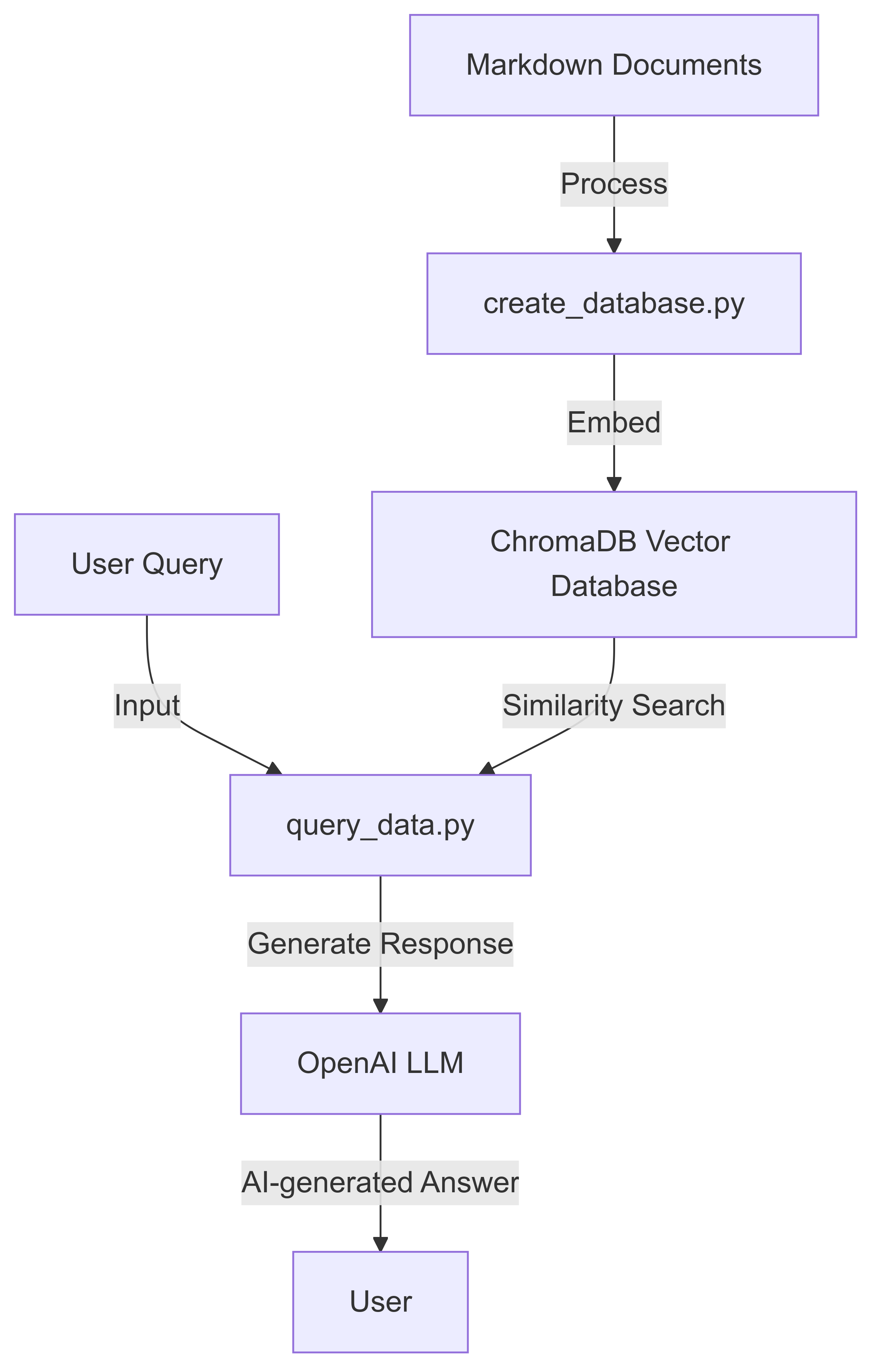

This project implements an AI-powered document query system using LangChain, ChromaDB, and OpenAI's language models. It enables users to create a searchable database from markdown documents and query it using natural language.

requirements.txtpython -m venv .venv

source .venv/bin/activate # On Windows, use `.venvScriptsactivate`

pip install -r requirements.txt

.env file:

OPENAI_API_KEY=your_api_key_here

Follow these steps to quickly set up and use the RAG-based VectorDB-LLM Query Engine:

Create a database from your markdown documents:

python create_database.py --data_folder data/go-docs --chroma_db_path chroma_go_docs/

This command will process the markdown files in the data/go-docs directory and create a vector database in the chroma_go_docs/ folder.

Query the database with a natural language question:

python query_data.py --query_text "Explain goroutines in go in a sentence" --chroma_db_path chroma_go_docs/ --prompt_model gpt-3.5-turbo

View the AI-generated response:

Goroutines are lightweight, concurrent functions or methods in Go that run independently, managed by the Go runtime, allowing for efficient parallel execution and easy implementation of concurrent programming patterns.

For more detailed usage instructions, refer to the following sections:

Create the Database

python create_database.py --data_folder path/to/your/markdown/files --chroma_db_path path/to/save/database

Query the Database

python query_data.py --query_text "Your question here" --chroma_db_path path/to/database --prompt_model gpt-3.5-turbo

create_database.py: Database creation scriptquery_data.py: Database querying scriptestimate_cost.py: Cost estimation moduleget_token_count.py: Token counting utilitydata/: Markdown documents directorychroma/: ChromaDB database storage (gitignored)text-embedding-3-small for embeddings and gpt-3.5-turbo for responses by defaultdata/ or specify a custom pathchroma/ (gitignored)This project is licensed under the terms of the MIT License. For more information, please refer to the LICENSE file.

For questions or issues, please open an issue on the GitHub repository.