Chinese | English

This project opens the source of a large language model set that has been fine-tuning/instruction-tuning in Chinese medical instructions, including LLaMA, Alpaca-Chinese, Bloom, movable type models, etc.

Based on the medical knowledge graph and medical literature, we combined with the ChatGPT API to construct a Chinese medical instruction fine-tuning data set, and used this to fine-tune the instructions of various basic models, improving the question-and-answer effect of the basic model in the medical field.

[2023/09/24] Release "Big Language Model Fine-tuning Technology for Smart Healthcare"

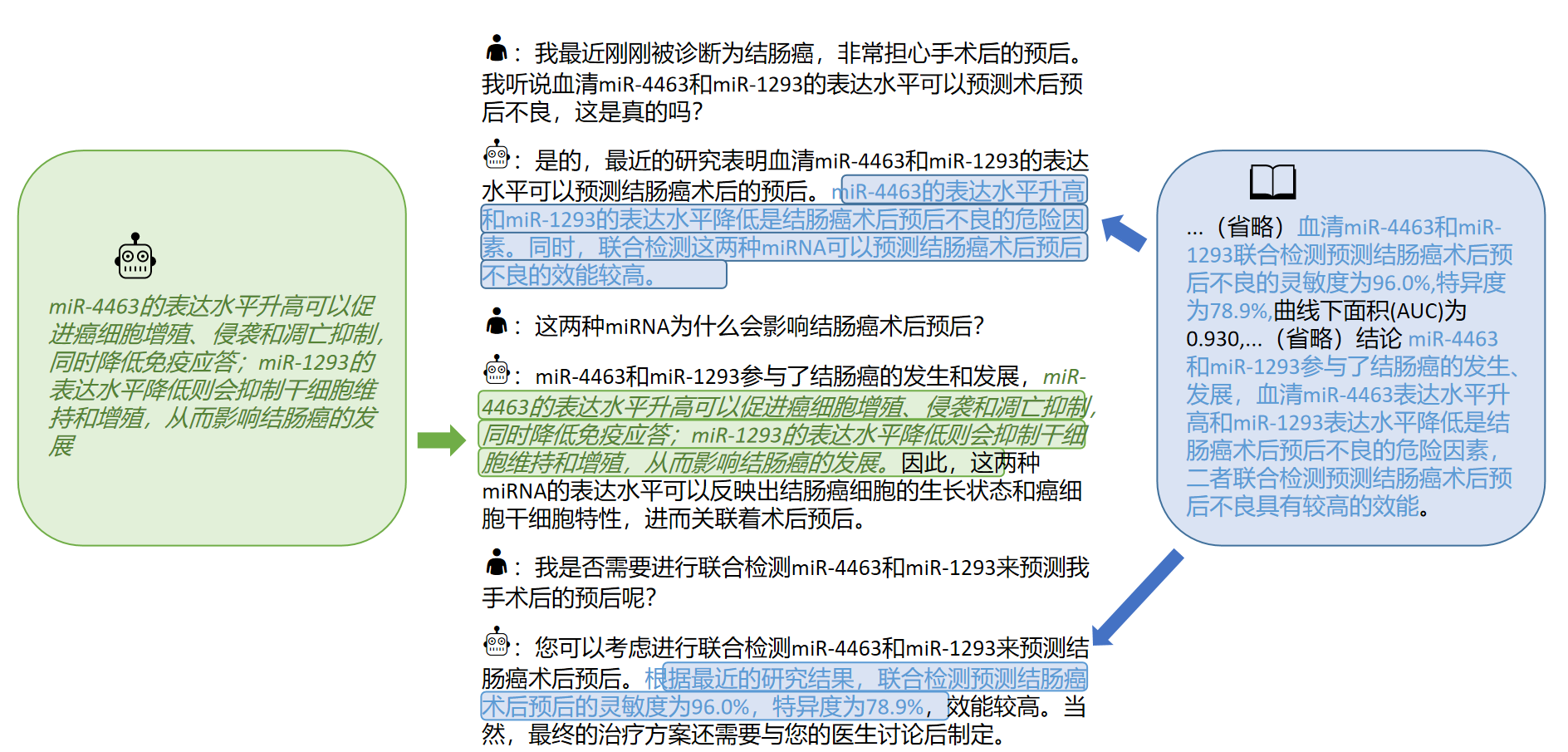

[2023/09/12] Release "Exploring the acquisition of interactive knowledge from medical literature by big models" on arxiv

[2023/09/08] Release "Reliable Chinese Medical Reply Generation Method for Large Language Models Based on Knowledge Fine Tuning" on arxiv

[2023/08/07] A model release based on movable type is added, and the model effect is significantly improved.

[2023/08/05] The herbal model is presented on CCL 2023 Demo Track.

[2023/08/03] SCIR Laboratory's open source movable type general question and answer model, welcome to follow??

[2023/07/19] Added a model for fine-tuning instructions based on Bloom.

[2023/05/12] The model was renamed "Bencao" from "Hua Tuo".

[2023/04/28] Added a model release based on the Chinese Alpaca big model for fine-tuning instructions.

[2023/04/24] Added a model for fine-tuning instruction based on LLaMA and medical literature.

[2023/03/31] Added a model release for fine-tuning instructions based on LLaMA and medical knowledge base.

First install the dependency package, python environment recommends 3.9+

pip install -r requirements.txt

For all base models, we adopt the half-precision base model LoRA fine-tuning method for instruction fine-tuning training to weigh the computing resources and model performance.

LoRA weights can be downloaded through Baidu Netdisk or Hugging Face:

Download the LoRA weight and decompress. The decompressed format is as follows:

**lora-folder-name**/

- adapter_config.json # LoRA权重配置文件

- adapter_model.bin # LoRA权重文件

Based on the same data, we also trained the medical version of the ChatGLM model: ChatGLM-6B-Med

We provide some test cases in ./data/infer.json , which can be replaced with other data sets. Please note that the format is consistent.

Run the infer script

#基于医学知识库

bash ./scripts/infer.sh

#基于医学文献

#单轮

bash ./scripts/infer-literature-single.sh

#多轮

bash ./scripts/infer-literature-multi.sh

Infer.sh script code is as follows. Please replace the base model base_model, lora weight lora_weights and test dataset path instruct_dir in the following code and run it after replacing the base model base_model, lora weight lora_weights, and test dataset path instruct_dir.

python infer.py

--base_model 'BASE_MODEL_PATH'

--lora_weights 'LORA_WEIGHTS_PATH'

--use_lora True

--instruct_dir 'INFER_DATA_PATH'

--prompt_template 'TEMPLATE_PATH'

The selection of prompt templates is related to the model, the details are as follows:

| Movable Type & Bloom | LLaMA&Alpaca |

|---|---|

templates/bloom_deploy.json | Based on medical knowledge base templates/med_template.jsonBased on medical literature templates/literature_template.json |

You can also refer to ./scripts/test.sh

The basic model has limited effects in medical Q&A scenarios, and instruction fine-tuning is an efficient way to make the basic model have the ability to answer human questions.

We have adopted an open and self-built Chinese medical knowledge base, mainly referring to cMeKG.

The medical knowledge base is built around diseases, drugs, examination indicators, etc., and the fields include complications, high-risk factors, histological examinations, clinical symptoms, drug treatment, auxiliary treatment, etc. The knowledge base example is as follows:

{"中心词": "偏头痛", "相关疾病": ["妊娠合并偏头痛", "恶寒发热"], "相关症状": ["皮肤变硬", "头部及眼后部疼痛并能听到连续不断的隆隆声", "晨起头痛加重"], "所属科室": ["中西医结合科", "内科"], "发病部位": ["头部"]}

We used the GPT3.5 interface to build Q&A data around the medical knowledge base and set up a variety of Prompt forms to make full use of knowledge.

The training set data examples for fine-tuning instructions are as follows:

"问题:一位年轻男性长期使用可卡因,突然出现胸痛、呕吐、出汗等症状,经检查发现心电图反映心肌急性损伤,请问可能患的是什么疾病?治疗方式是什么?"

回答: 可能患的是心肌梗塞,需要进行维拉帕米、依普利酮、硝酸甘油、ß阻滞剂、吗啡等药物治疗,并进行溶栓治疗、低分子量肝素、钙通道阻滞剂等辅助治疗。此外需要及时停用可卡因等药物,以防止病情加重。"

We provide a training dataset of the model, with a total of more than 8,000 pieces. It should be noted that although the construction of the training set incorporates knowledge, there are still errors and imperfections. In the future, we will use better strategies to iterate over and update the dataset.

The quality of the instruction fine-tuning data set is still limited, and iteration will be carried out in the future. At the same time, the medical knowledge base and data set construction code are still being sorted out, and will be released after the sorting is completed.

In addition, we collected Chinese medical literature on liver cancer diseases in 2023 and used the GPT3.5 interface to construct multiple rounds of question-and-answer data around the [Conclusion] of medical literature. In · ./data_literature/liver_cancer.json we provide 1k training examples. At present, the quality of the training samples is still limited. In the future, we will further iterate the data and publish it to the public in the form of公开数据集. Examples of training samples are as follows:

Currently, we only open model parameters for single disease training of "hepatitis cancer". In the future, we plan to publish medical dialogue datasets that incorporate literature conclusions and will train models for 16 diseases related to "hepatobiliary and pancreatic".

For details, please refer to our article: "Exploring the acquisition of interactive knowledge from medical literature for big models"

If you want to fine-tune the large language model with your own dataset, please build your own dataset in the format in ./data/llama_data.json

Run the finetune script

bash ./scripts/finetune.sh

During the instruction fine-tuning process based on the LLaMA model, we trained on an A100-SXM-80GB graphics card. The total training rounds were 10 rounds, which took about 2h17m. With batch_size=128, the video memory occupies about 40G. It is expected that 3090/4090 graphics cards (24GB graphics memory) or above can be better supported, and batch_size is adjusted according to the size of the graphics memory.

For hyperparameter settings and experimental procedures, please refer to the link of Wandb:

https://wandb.ai/thinksoso/llama_med/runs/a5wgcnzt/overview?workspace=user-thinksoso

Note: Tested in March 2023

| Test input | Llama output | Alpaca output | BenTsao Materia Medica |

|---|---|---|---|

| Xiao Zhang has been feeling unwell recently and has symptoms such as palpitations and shortness of breath. The physical examination found that the heart was enlarged and the pulsation was weakened. | Question: Xiao Zhang has been feeling unwell recently and has symptoms such as palpitations and shortness of breath. The physical examination found that the heart was enlarged and the pulsation was weakened. | Recently, Xiao Zhang has been feeling uncomfortable and has symptoms such as upset and shortness of breath. Physical examination shows that the heart is enlarged and the muscles are weak. | Xiao Zhang may suffer from myocarditis, so it is recommended to go to the hospital for examination and treatment as soon as possible. Treatment options include medication and surgical treatment. |

| Are the causes of leprosy and childhood asthma consistent? | The causes of asthma and leprosy in children are consistent, but the causes of asthma and leprosy in children are not consistent | Yes, asthma and children have similar causes. | Inconsistent, the cause of leprosy is leprosy infection, while the cause of asthma in children is factors such as poor feeding and smoking. |

| A patient developed persistent swelling and jaundice. After imaging examination, hepatobiliary duct stone disease. What treatment plans should be taken? | What treatment plans should patients with hepatobiliary duct stone disease take? | Patients should undergo a combination of surgery and chemotherapy. | For hepatobiliary duct stone disease, treatment plans include surgical treatment, drug treatment and auxiliary treatment. The specific treatment plan needs to be determined according to the patient's specific circumstances. |

Q: Why was it renamed "Bencao"?

A: The name consistency of the SCIR laboratory large language model, and the name of the Chinese medical model is adjusted to "Material Herbal Medicine".

Q: Why is it "Hua Tuo" instead of "Hua Tuo"?

A: It is called "camel" because our basic model LLaMA is llama and Alpaca is alpaca. Inspired by their names and the homophonic phon of Hua Tuo, we named our model Hua Tuo.

Q: Are there any traditional Chinese medicine theory or traditional Chinese medicine data used?

A: No

Q: The results of the model run are different and the effects are limited

A: Due to the consideration of generative diversity in generative models, the results of multiple runs may vary. The current open source model is limited in Chinese corpus of LLaMA and Alpaca and the way of combining knowledge is relatively rough, please try the bloom-based and movable type-based models.

Q: The model cannot run/the inference content is completely unacceptable

A: Please determine the dependencies in installed requirements, configure the cuda environment and add environment variables, correctly enter the downloaded model and lora storage location; if the inference content is duplicated or some wrong content belongs to the occasional phenomenon of the llama-based model, it has a certain relationship with the Chinese ability of the llama model, the training data scale and the hyperparameter settings. Please try a new model based on movable type. If there are serious problems, please describe the running file name, model name, lora and other configuration information in the issue in detail. Thank you.

Q: Which of the several models released is the best?

A: According to our experience, the effect based on the movable type model is relatively better.

This project was completed by Wang Haochun, Du Yanrui, Liu Chi, Bai Rui, Cinuwa, Chen Yuhan, Qiang Zewen, Chen Jianyu and Li Zijian, the Health Intelligence Group of the Center for Social Computing and Information Retrieval of Harbin Institute of Technology. The instructors are Associate Professor Zhao Sendong, Professor Qin Bing and Professor Liu Ting.

This project refers to the following open source projects, and we would like to express our gratitude to the relevant projects and research and development staff.

The resources related to this project are for academic research only and are strictly prohibited for commercial purposes. When using parts involving third-party code, please strictly follow the corresponding open source protocol. The content generated by the model is affected by factors such as model calculation, randomness and quantitative accuracy losses, and this project cannot guarantee its accuracy. Most of the data sets of this project are generated by models and cannot be used as the basis for actual medical diagnosis even if they comply with certain medical facts. This project assumes no legal liability for any content output by the model, nor is it liable for any losses that may arise from the use of relevant resources and output results.

If you have used the data or code of this project, or our work is helpful to you, please declare a quote

First edition technical report: Huatuo: Tuning llama model with chinese medical knowledge

@misc{wang2023huatuo,

title={HuaTuo: Tuning LLaMA Model with Chinese Medical Knowledge},

author={Haochun Wang and Chi Liu and Nuwa Xi and Zewen Qiang and Sendong Zhao and Bing Qin and Ting Liu},

year={2023},

eprint={2304.06975},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

Knowledge-tuning Large Language Models with Structured Medical Knowledge Bases for Reliable Response Generation in Chinese

@misc{wang2023knowledgetuning,

title={Knowledge-tuning Large Language Models with Structured Medical Knowledge Bases for Reliable Response Generation in Chinese},

author={Haochun Wang and Sendong Zhao and Zewen Qiang and Zijian Li and Nuwa Xi and Yanrui Du and MuZhen Cai and Haoqiang Guo and Yuhan Chen and Haoming Xu and Bing Qin and Ting Liu},

year={2023},

eprint={2309.04175},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

The CALLA Dataset: Probing LLMs' Interactive Knowledge Acquisition from Chinese Medical Literature

@misc{du2023calla,

title={The CALLA Dataset: Probing LLMs' Interactive Knowledge Acquisition from Chinese Medical Literature},

author={Yanrui Du and Sendong Zhao and Muzhen Cai and Jianyu Chen and Haochun Wang and Yuhan Chen and Haoqiang Guo and Bing Qin},

year={2023},

eprint={2309.04198},

archivePrefix={arXiv},

primaryClass={cs.CL}

}