LLM security and privacy

1.0.0

A curated list of papers and tools covering LLM threats and vulnerabilities, both from a security and privacy standpoint. Summaries, key takeaway points, and additional details for each paper are found in the paper-summaries folder.

main.bib file contains the latest citations of the papers listed here.

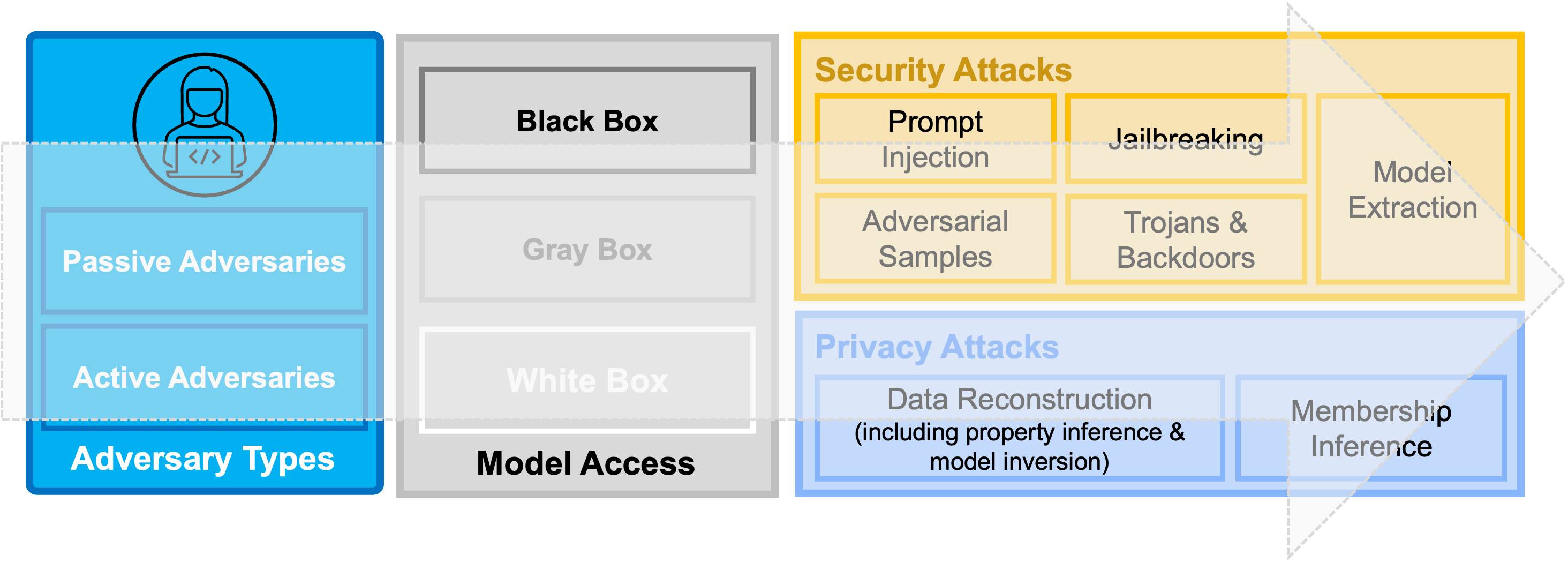

Overview Figure: A taxonomy of current security and privacy threats against deep learning models and consecutively Large Language Models (LLMs).

Overview Figure: A taxonomy of current security and privacy threats against deep learning models and consecutively Large Language Models (LLMs).

| No. | Paper Title | Venue | Year | Category | Code | Summary |

|---|---|---|---|---|---|---|

| 1. | InjectAgent: Benchmarking Indirect Prompt Injections in Tool-Integrated Large Language Model Agents | pre-print | 2024 | Prompt Injection | N/A | TBD |

| 2. | LLM Agents can Autonomously Hack Websites | pre-print | 2024 | Applications | N/A | TBD |

| 3. | An Overview of Catastrophic AI Risks | pre-print | 2023 | General | N/A | TBD |

| 4. | Use of LLMs for Illicit Purposes: Threats, Prevention Measures, and Vulnerabilities | pre-print | 2023 | General | N/A | TBD |

| 5. | LLM Censorship: A Machine Learning Challenge or a Computer Security Problem? | pre-print | 2023 | General | N/A | TBD |

| 6. | Beyond the Safeguards: Exploring the Security Risks of ChatGPT | pre-print | 2023 | General | N/A | TBD |

| 7. | Prompt Injection attack against LLM-integrated Applications | pre-print | 2023 | Prompt Injection | N/A | TBD |

| 8. | Identifying and Mitigating the Security Risks of Generative AI | pre-print | 2023 | General | N/A | TBD |

| 9. | PassGPT: Password Modeling and (Guided) Generation with Large Language Models | ESORICS | 2023 | Applications | TBD | |

| 10. | Harnessing GPT-4 for generation of cybersecurity GRC policies: A focus on ransomware attack mitigation | Computers & Security | 2023 | Applications | N/A | TBD |

| 11. | Not what you've signed up for: Compromising Real-World LLM-Integrated Applications with Indirect Prompt Injection | pre-print | 2023 | Prompt Injection | TBD | |

| 12. | Examining Zero-Shot Vulnerability Repair with Large Language Models | IEEE S&P | 2023 | Applications | N/A | TBD |

| 13. | LLM Platform Security: Applying a Systematic Evaluation Framework to OpenAI's ChatGPT Plugins | pre-print | 2023 | General | N/A | TBD |

| 14. | Chain-of-Verification Reduces Hallucination in Large Language Models | pre-print | 2023 | Hallucinations | N/A | TBD |

| 15. | Pop Quiz! Can a Large Language Model Help With Reverse Engineering? | pre-print | 2022 | Applications | N/A | TBD |

| 16. | Extracting Training Data from Large Language Models | Usenix Security | 2021 | Data Extraction | TBD | |

| 17. | Here Comes The AI Worm: Unleashing Zero-click Worms that Target GenAI-Powered Applications | pre-print | 2024 | Prompt-Injection | TBD | |

| 18. | CLIFF: Contrastive Learning for Improving Faithfulness and Factuality in Abstractive Summarization | EMNLP | 2021 | Hallucinations | TBD |

If you are interested in contributing to this repository, please see CONTRIBUTING.md for details on the guidelines.

A list of current contributors is found HERE.

For any questions regarding this repository and/or potential (research) collaborations please contact Briland Hitaj.