Mechanical dog

Castle Sea Sunset Miyazaki Animation

How many flowers fall

Half a chicken and half a man, strong

Chicken, you are so beautiful

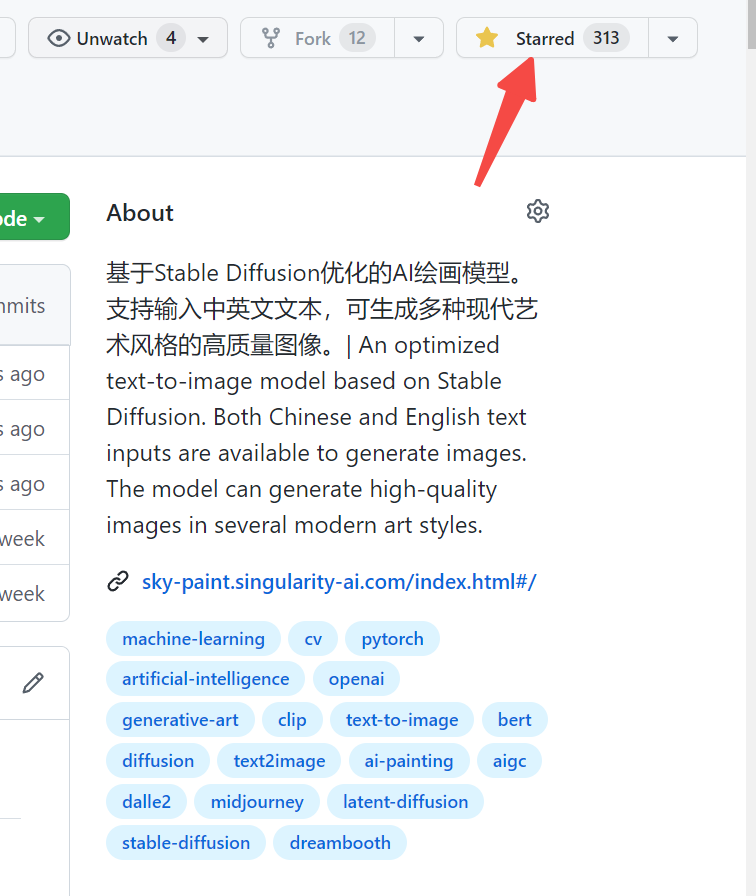

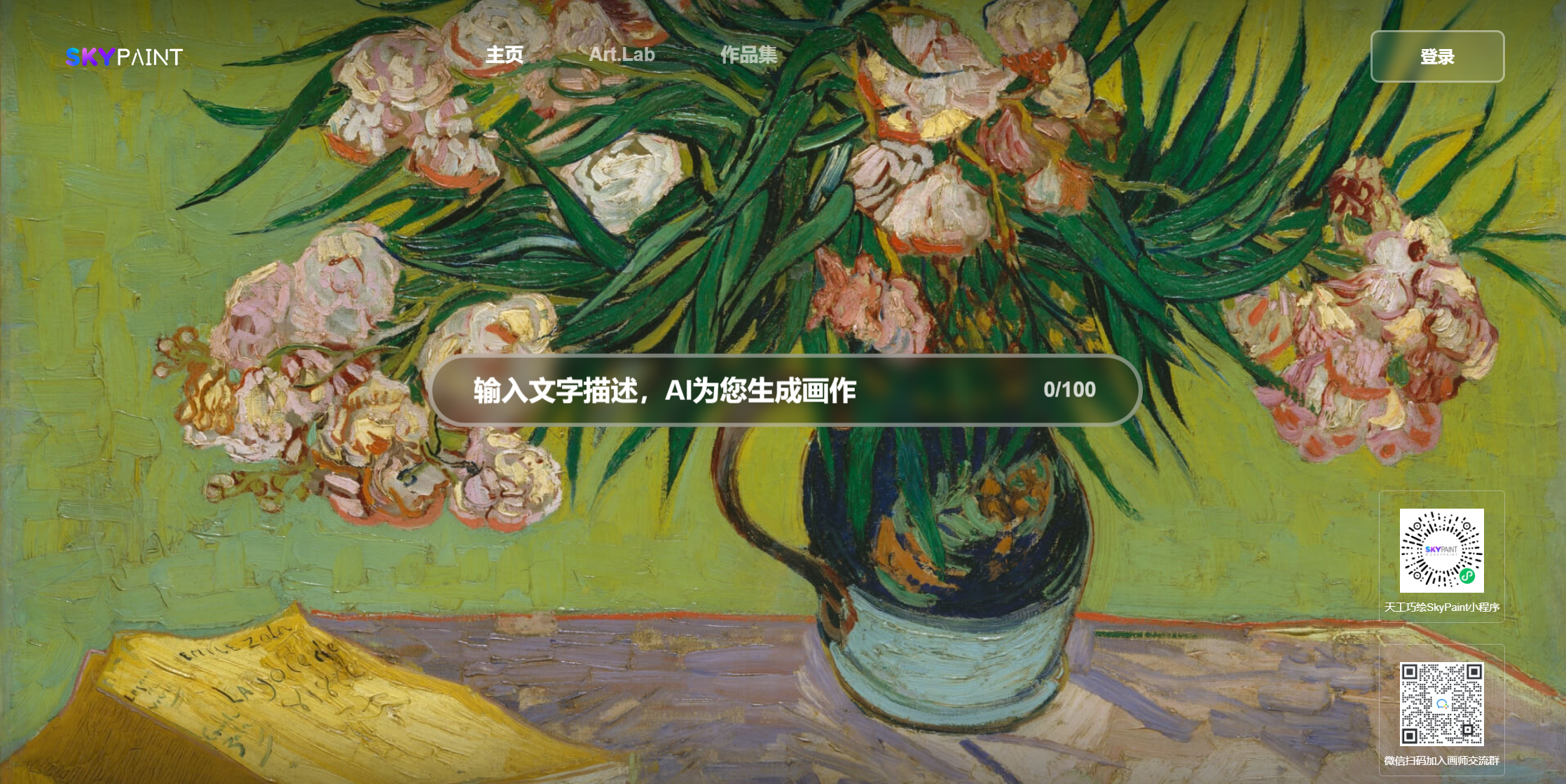

Please visit SkyPaint, SkyPaint

You can also scan the QR code on WeChat and experience it on the mini program:

SkyPaint text generation picture model mainly consists of two parts, namely the prompt word text encoder model and the diffusion model. Therefore, our optimization is also divided into two steps: First, based on OpenAI-CLIP, the prompt word text encoder model is optimized to enable SkyPaint to have Chinese and English recognition capabilities, and then, the diffusion model is optimized, so that SkyPaint has modern art capabilities to produce high-quality pictures.

Model download address SkyPaint-v1.0

from diffusers import StableDiffusionPipeline

device = 'cuda'

pipe = StableDiffusionPipeline . from_pretrained ( "path_to_our_model" ). to ( device )

prompts = [

'机械狗' ,

'城堡 大海 夕阳 宫崎骏动画' ,

'花落知多少' ,

'鸡你太美' ,

]

for prompt in prompts :

prompt = 'sai-v1 art, ' + prompt

image = pipe ( prompt ). images [ 0 ]

image . save ( "%s.jpg" % prompt )————————————————————————————————————————————————————

SkyCLIP is a CLIP model obtained by us using an efficient method of training Chinese and English bilingual CLIP models. This method only requires the use of text data to achieve efficient distillation of the OpenAI-CLIP model, which greatly reduces the data threshold. At the same time, the computing power required for training is reduced by more than 90% compared to the original CLIP model, which is convenient for the open source community to reproduce/fine-tune. This method only changes the text encoder of OpenAI-CLIP, and can be used to realize the image and text retrieval function using OpenAI-CLIP.

The text_encoder of OpenAI-CLIP is used as the teacher model and the parameters are frozen. The student model adopts a multilingual BERT model of the same size as the teacher model. During training, the English input is used to obtain the corresponding t_en_hiddent_state through the teacher model. The corresponding s_en_hiddent_state and s_zh_hidden_state are obtained through the student model respectively. The loss functions such as l1, l2, cos distances are used to gradually make the Chinese and English hidden_state of the student model gradually approach the hidden_state of the teacher model. Because the Chinese and English of parallel corpus have natural inequality properties, in order to make parallel Chinese and English as close as possible, we also added a Chinese decoder during the training process, using the Chinese and English hidden_state of the student model as the hidden_state input of the decoder, and assisting in achieving the alignment of Chinese and English through translation tasks.

At present, we mainly evaluated the zero-shot performance of SkyCLIP in Flickr30K-CN, and mainly compared several relevant open source models with Chinese capabilities. In order to ensure fairness of the comparison, we all selected models based on OpenAI-CLIP ViT-L/14 sizes for multiple model sizes. Our evaluation process refers to the evaluation script provided by Chinese-CLIP.

Flickr30K-CN Retrieval :

| Task | Text-to-Image | Image-to-Text | MR | ||||

|---|---|---|---|---|---|---|---|

| Setup | Zero-shot | Zero-shot | |||||

| Metric | R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | |

| Taiyi-326M | 53.8 | 79.9 | 86.6 | 64.0 | 90.4 | 96.1 | 78.47 |

| AltCLIP | 50.7 | 75.4 | 83.1 | 73.4 | 92.8 | 96.9 | 78.72 |

| Wukong | 51.9 | 78.6 | 85.9 | 75 | 94.4 | 97.7 | 80.57 |

| R2D2 | 42.6 | 69.5 | 78.6 | 63.0 | 90.1 | 96.4 | 73.37 |

| CN-CLIP | 68.1 | 89.7 | 94.5 | 80.2 | 96.6 | 98.2 | 87.87 |

| SkyCLIP | 58.8 | 82.6 | 89.6 | 78.8 | 96.1 | 98.3 | 84.04 |

from PIL import Image

import requests

import clip

import torch

from transformers import BertTokenizer

from transformers import CLIPProcessor , CLIPModel , CLIPTextModel

import numpy as np

query_texts = [ '一个人' , '一辆汽车' , '两个男人' , '两个女人' ] # 这里是输入提示词,可以随意替换。

# 加载SkyCLIP 中英文双语 text_encoder

text_tokenizer = BertTokenizer . from_pretrained ( "./tokenizer" )

text_encoder = CLIPTextModel . from_pretrained ( "./text_encoder" ). eval ()

text = text_tokenizer ( query_texts , return_tensors = 'pt' , padding = True )[ 'input_ids' ]

url = "http://images.cocodataset.org/val2017/000000040083.jpg" #这里可以换成任意图片的url

# 加载CLIP的image encoder

clip_model = CLIPModel . from_pretrained ( "openai/clip-vit-large-patch14" )

clip_text_proj = clip_model . text_projection

processor = CLIPProcessor . from_pretrained ( "openai/clip-vit-large-patch14" )

image = processor ( images = Image . open ( requests . get ( url , stream = True ). raw ), return_tensors = "pt" )

with torch . no_grad ():

image_features = clip_model . get_image_features ( ** image )

text_features = text_encoder ( text )[ 0 ]

# sep_token对应于openai-clip的eot_token

sep_index = torch . nonzero ( text == student_tokenizer . sep_token_id )

text_features = text_features [ torch . arange ( text . shape [ 0 ]), sep_index [:, 1 ]]

# 乘text投影矩阵

text_features = clip_text_proj ( text_features )

image_features = image_features / image_features . norm ( dim = 1 , keepdim = True )

text_features = text_features / text_features . norm ( dim = 1 , keepdim = True )

# 计算余弦相似度 logit_scale是尺度系数

logit_scale = clip_model . logit_scale . exp ()

logits_per_image = logit_scale * image_features @ text_features . t ()

logits_per_text = logits_per_image . t ()

probs = logits_per_image . softmax ( dim = - 1 ). cpu (). numpy ()

print ( np . around ( probs , 3 ))Our data uses the filtered Laion dataset as the training data, and the text is preceded by 'sai-v1 art' as the tag to enable the model to learn the style and quality we want more quickly. The pre-trained model uses stable-diffusion-v1-5 as pre-training, and 16 A100s were used for 50 hours. At present, the model is still being optimized continuously, and there will be more stable model updates in the future.