Simple, but Powerful.

English Doc | 中文文档 | 中文Readme

Help wanted. Translation, rap lyrics, all wanted. Feel free to create an issue.

Pinferencia tries to be the simplest machine learning inference server ever!

Three extra lines and your model goes online.

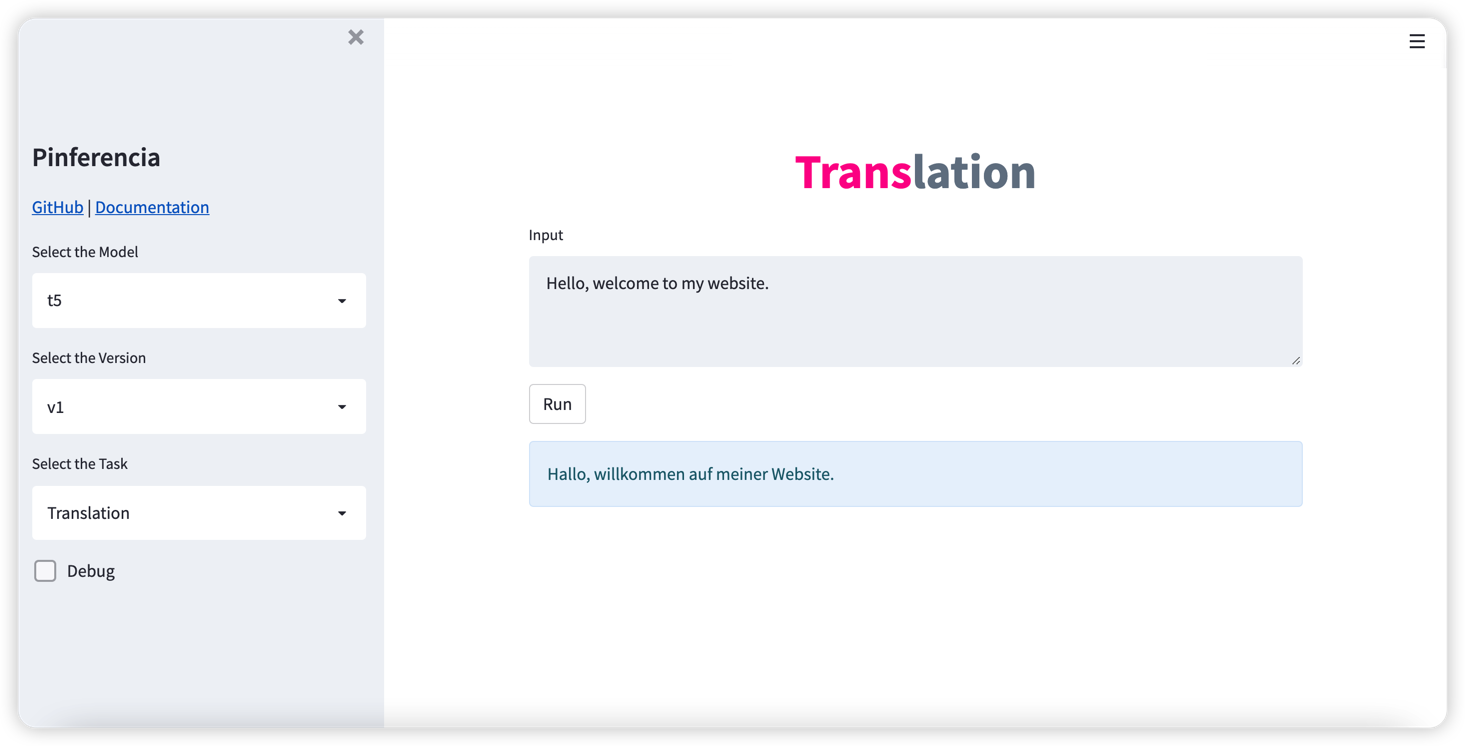

Serving a model with GUI and REST API has never been so easy.

If you want to

You're at the right place.

Pinferencia features include:

pip install "pinferencia[streamlit]"pip install "pinferencia"Serve Any Model

from pinferencia import Server

class MyModel:

def predict(self, data):

return sum(data)

model = MyModel()

service = Server()

service.register(model_name="mymodel", model=model, entrypoint="predict")Just run:

pinfer app:service

Hooray, your service is alive. Go to http://127.0.0.1:8501/ and have fun.

Any Deep Learning Models? Just as easy. Simple train or load your model, and register it with the service. Go alive immediately.

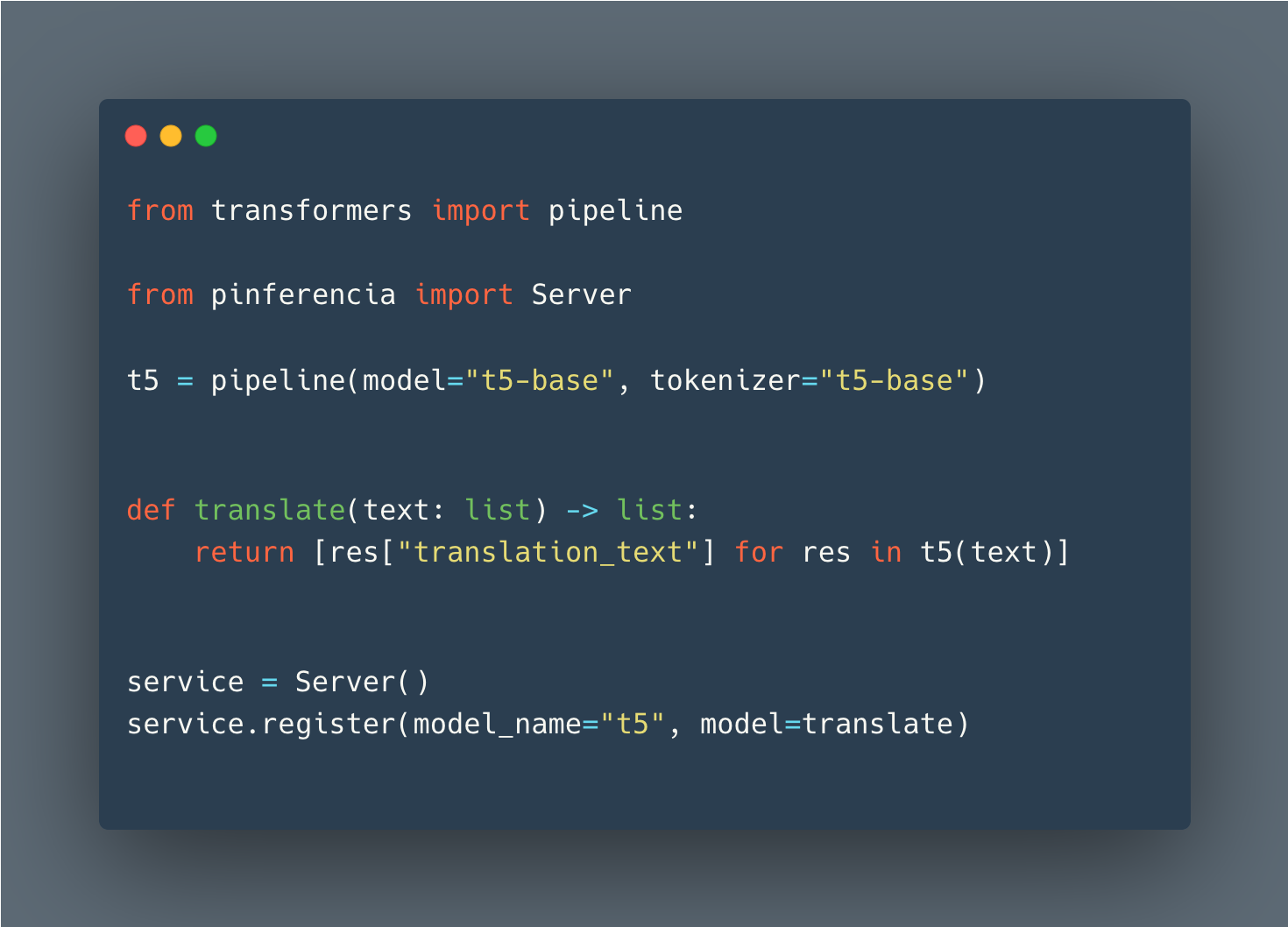

Hugging Face

Details: HuggingFace Pipeline - Vision

from transformers import pipeline

from pinferencia import Server

vision_classifier = pipeline(task="image-classification")

def predict(data):

return vision_classifier(images=data)

service = Server()

service.register(model_name="vision", model=predict)Pytorch

import torch

from pinferencia import Server

# train your models

model = "..."

# or load your models (1)

# from state_dict

model = TheModelClass(*args, **kwargs)

model.load_state_dict(torch.load(PATH))

# entire model

model = torch.load(PATH)

# torchscript

model = torch.jit.load('model_scripted.pt')

model.eval()

service = Server()

service.register(model_name="mymodel", model=model)Tensorflow

import tensorflow as tf

from pinferencia import Server

# train your models

model = "..."

# or load your models (1)

# saved_model

model = tf.keras.models.load_model('saved_model/model')

# HDF5

model = tf.keras.models.load_model('model.h5')

# from weights

model = create_model()

model.load_weights('./checkpoints/my_checkpoint')

loss, acc = model.evaluate(test_images, test_labels, verbose=2)

service = Server()

service.register(model_name="mymodel", model=model, entrypoint="predict")Any model of any framework will just work the same way. Now run uvicorn app:service --reload and enjoy!

If you'd like to contribute, details are here