BERTweet

1.0.0

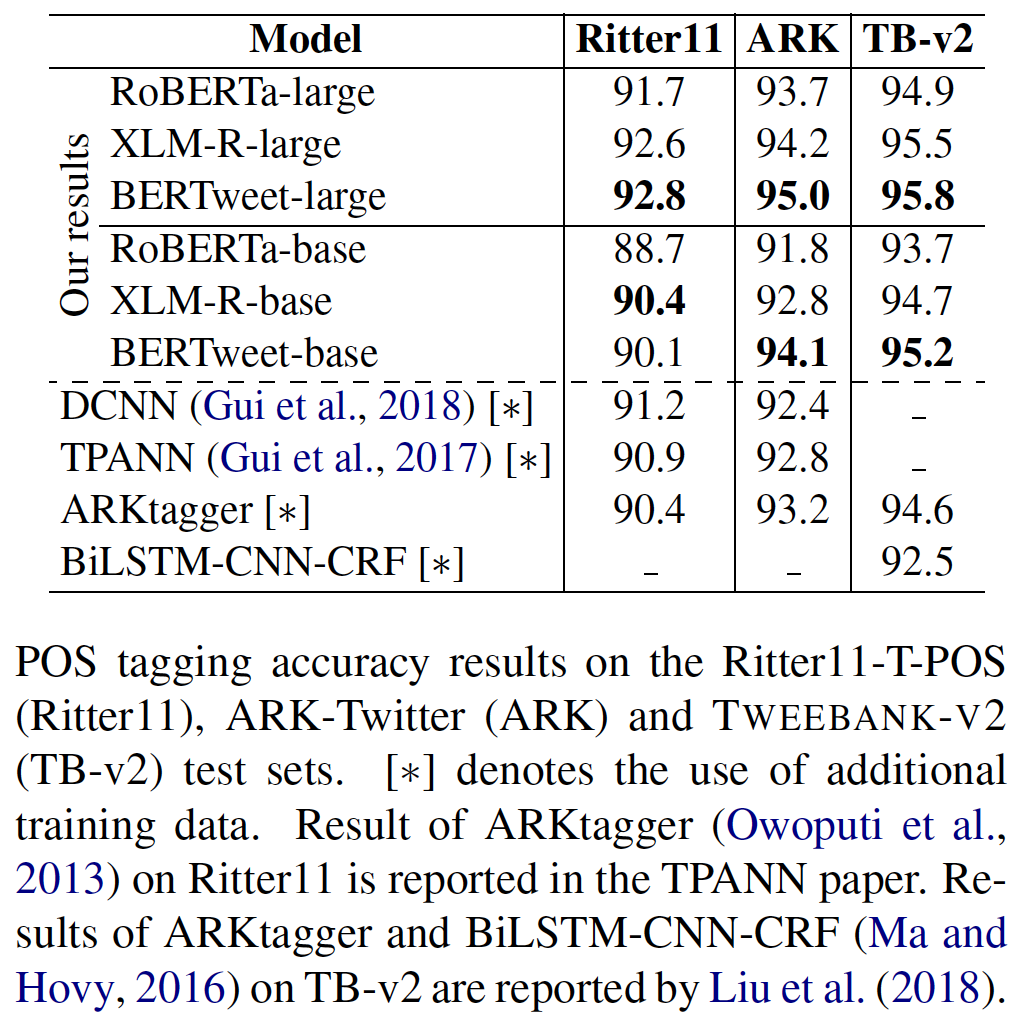

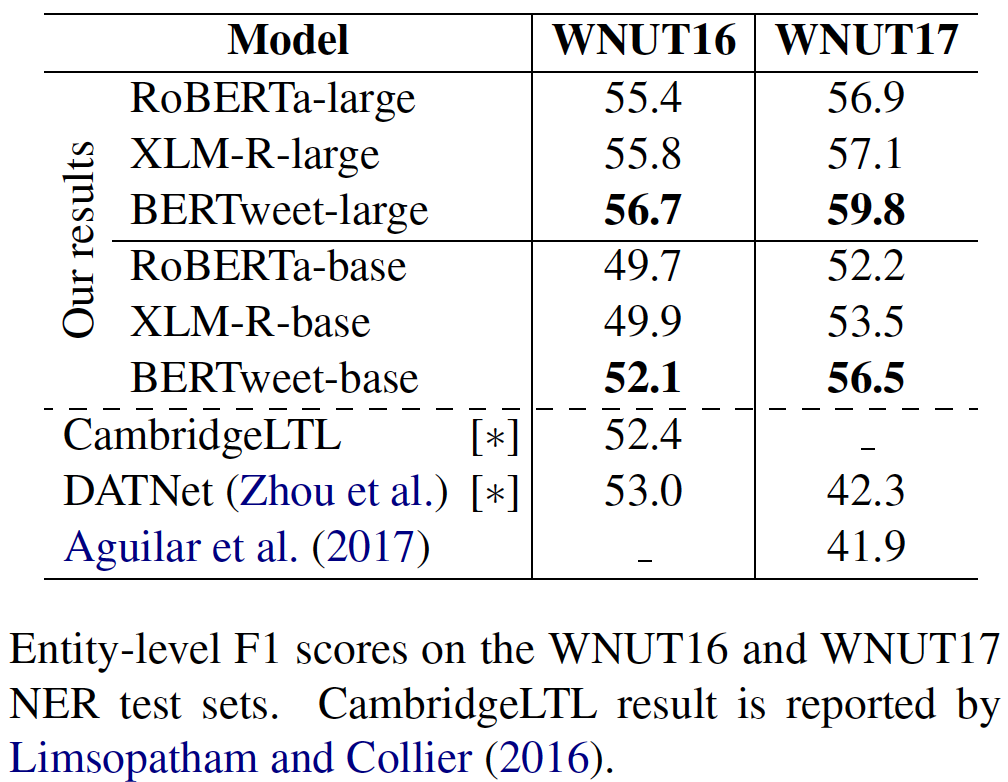

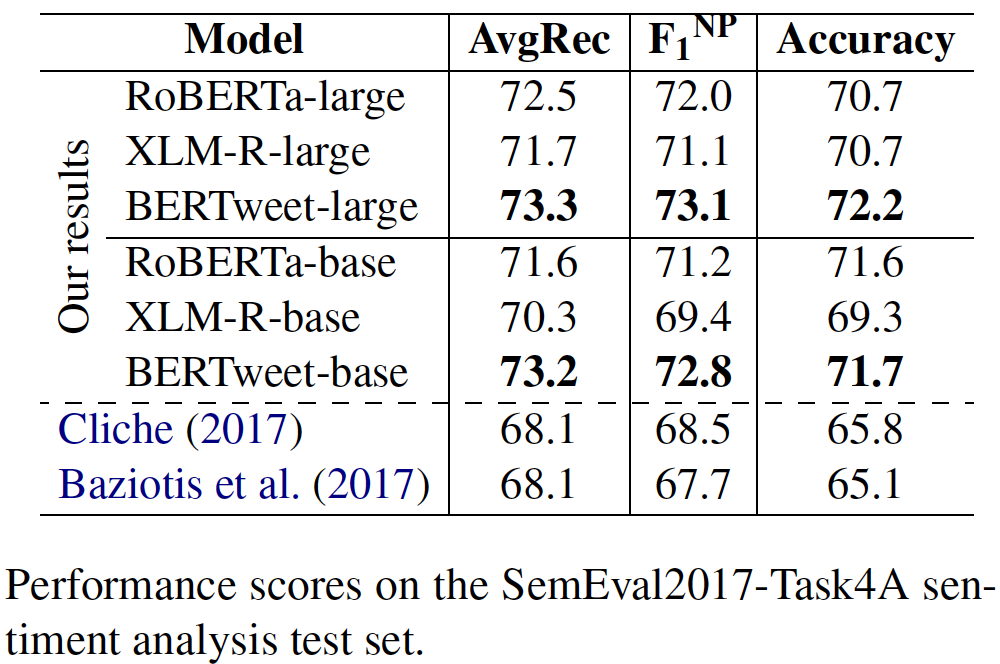

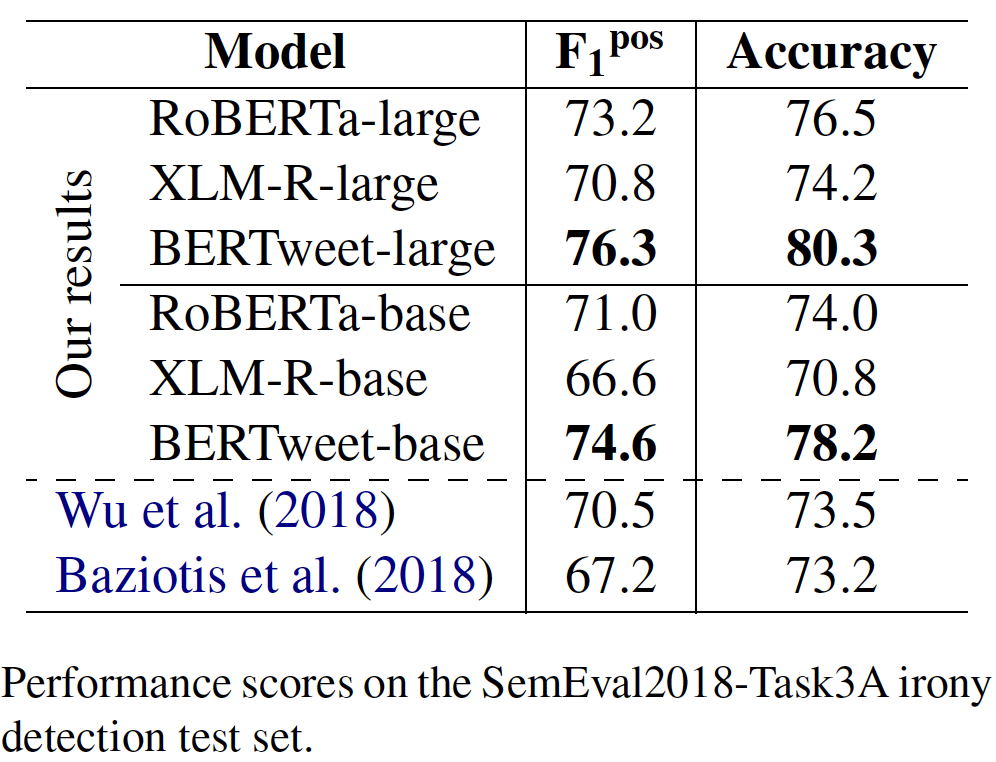

transformers一起使用fairseq一起使用Bertweet是第一個針對英語推文預先訓練的公共大規模語言模型。根據羅伯塔(Roberta)的培訓程序,對Bertweet進行了培訓。用於預訓練的Bertweet的語料庫由850m英語推文(16B字代幣〜80GB)組成,其中包含從01/2012到08/2019和5m Tweet的845m推文,與COVID-119 PANDEMIC相關的5m推文。 Bertweet的一般體系結構和實驗結果可以在我們的論文中找到:

@inproceedings{bertweet,

title = {{BERTweet: A pre-trained language model for English Tweets}},

author = {Dat Quoc Nguyen and Thanh Vu and Anh Tuan Nguyen},

booktitle = {Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations},

pages = {9--14},

year = {2020}

}

當使用Bertweet來幫助產生已發布的結果或已納入其他軟件時,請引用我們的論文。

transformers一起使用transformers : pip install transformers ,或從源安裝transformers 。transformers分支中。正如本提取請求中提到的那樣,討論中合併快速令牌的過程是在討論中。如果用戶想利用快速令牌,則用戶可能會按照以下方式安裝transformers : git clone --single-branch --branch fast_tokenizers_BARTpho_PhoBERT_BERTweet https://github.com/datquocnguyen/transformers.git

cd transformers

pip3 install -e .

tokenizers : pip3 install tokenizers| 模型 | #params | 拱。 | 最大長度 | 預訓練數據 |

|---|---|---|---|---|

vinai/bertweet-base | 135m | 根據 | 128 | 850m英語推文(外殼) |

vinai/bertweet-covid19-base-cased | 135m | 根據 | 128 | 23M Covid-19英文推文(CASED) |

vinai/bertweet-covid19-base-uncased | 135m | 根據 | 128 | 23M COVID-19英語推文(未表面) |

vinai/bertweet-large | 35.5m | 大的 | 512 | 8.73億英語推文(CASED) |

vinai/bertweet-covid19-base-cased and vinai/bertweet-covid19-base-uncased are resulted by further pre-training the pre-trained model vinai/bertweet-base on a corpus of 23M COVID-19 English Tweets.vinai/bertweet-large 。 import torch

from transformers import AutoModel , AutoTokenizer

bertweet = AutoModel . from_pretrained ( "vinai/bertweet-large" )

tokenizer = AutoTokenizer . from_pretrained ( "vinai/bertweet-large" )

# INPUT TWEET IS ALREADY NORMALIZED!

line = "DHEC confirms HTTPURL via @USER :crying_face:"

input_ids = torch . tensor ([ tokenizer . encode ( line )])

with torch . no_grad ():

features = bertweet ( input_ids ) # Models outputs are now tuples

## With TensorFlow 2.0+:

# from transformers import TFAutoModel

# bertweet = TFAutoModel.from_pretrained("vinai/bertweet-large")在將BPE應用於英語推文前培訓語料庫之前,我們使用NLTK Toolkit的TweetTokenizer將這些推文進行了示意,並使用emoji軟件包將情感圖標轉換為文本字符串(在這裡,每個圖標都稱為單詞令牌)。我們還通過將用戶提及和Web/URL鏈接分別轉換為特殊令牌@USER和HTTPURL來使這些推文歸一化。因此,建議還為基於Bertweet的下游應用程序應用相同的預處理步驟WRT在原始輸入推文中。

給定原始輸入推文,要獲得相同的預處理輸出,用戶可以使用我們的TweetNormalizer模塊。

pip3 install nltk emoji==0.6.0emoji版本必須為0.5.4或0.6.0。較新的emoji版本已更新為表情符號圖表的較新版本,因此與預處理預訓練的推文語料庫的使用不一致。 import torch

from transformers import AutoTokenizer

from TweetNormalizer import normalizeTweet

tokenizer = AutoTokenizer . from_pretrained ( "vinai/bertweet-large" )

line = normalizeTweet ( "DHEC confirms https://postandcourier.com/health/covid19/sc-has-first-two-presumptive-cases-of-coronavirus-dhec-confirms/article_bddfe4ae-5fd3-11ea-9ce4-5f495366cee6.html?utm_medium=social&utm_source=twitter&utm_campaign=user-share… via @postandcourier ?" )

input_ids = torch . tensor ([ tokenizer . encode ( line )])fairseq一起使用請在這裡查看詳細信息!

MIT License

Copyright (c) 2020-2021 VinAI

Permission is hereby granted, free of charge, to any person obtaining a copy

of this software and associated documentation files (the "Software"), to deal

in the Software without restriction, including without limitation the rights

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

copies of the Software, and to permit persons to whom the Software is

furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all

copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

SOFTWARE.