提示:深度学习指导,目标检测、目标跟踪、语义分割等,小型数据集详询QQ3419923783

| 姓名 | 火车数据集 | 测试数据集 | 测试尺寸 | 地图 | 推理时间(MS) | 参数(m) | 模型链接 |

|---|---|---|---|---|---|---|---|

| Mobilenetv2-Yolov4 | VOC Trainval(07+12) | VOC测试(07) | 416 | 0.851 | 11.29 | 46.34 | args |

Mobilenetv3-Yolov4即将到达!(您只需要更改config/yolov4_config.py中的model_type)

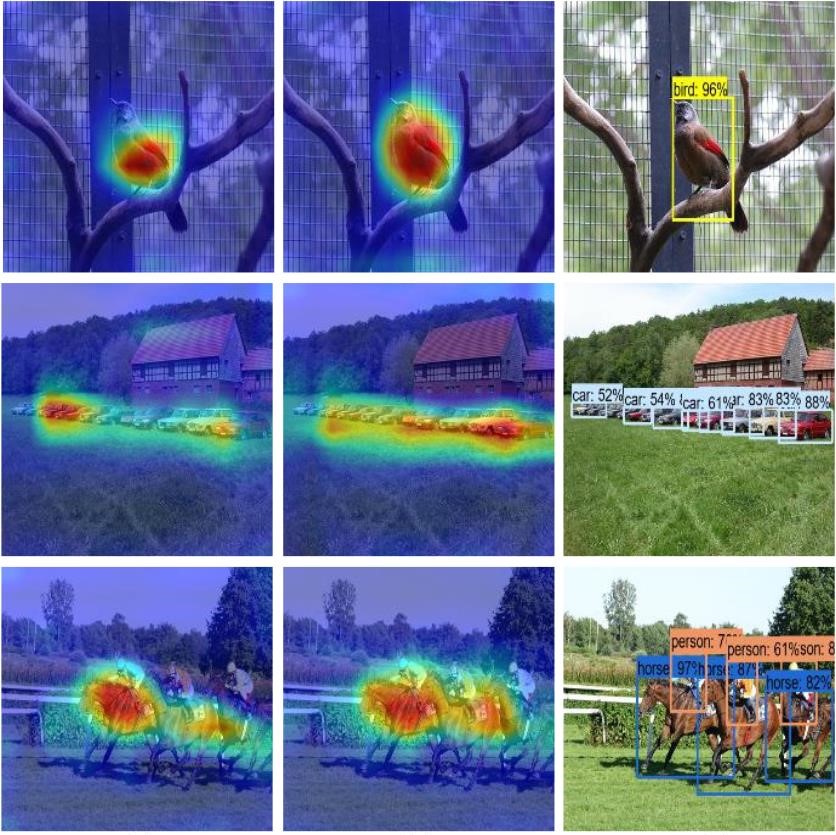

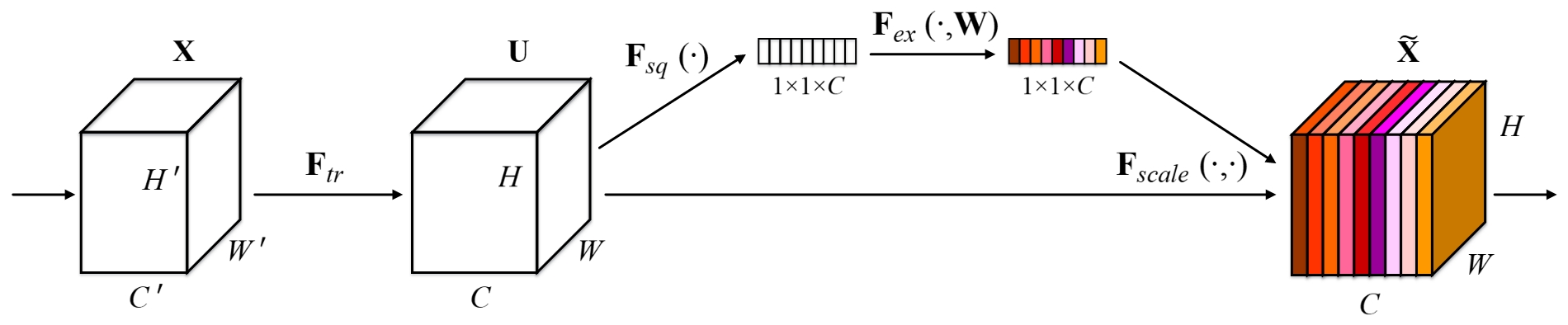

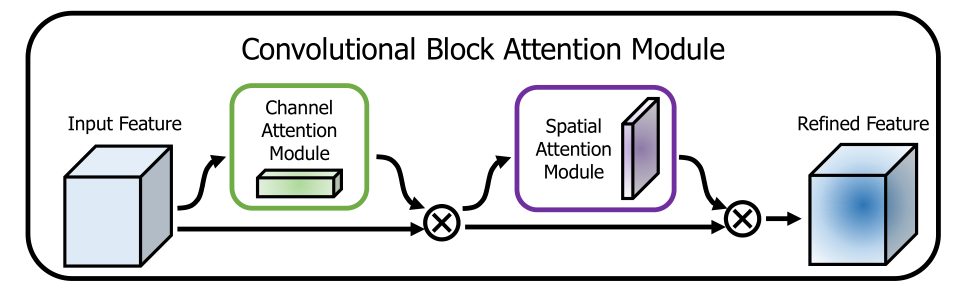

此存储库在主干中添加了一些有用的注意方法。以下图片说明了这样的事情:

此存储库易于使用,易于阅读和与其他人相比,它不复杂以改进!!!

运行安装脚本以安装所有依赖项。您需要提供CONDA安装路径(例如/anaconda3)和创建的conda环境(此处YOLOv4-pytorch )的名称。

pip3 install -r requirements.txt --user注意:安装脚本已在Ubuntu 18.04和Window 10系统上进行了测试。如果出现问题,请检查详细的安装说明。

git clone github.com/argusswift/YOLOv4-pytorch.git在config/yolov4_config.py中更新"PROJECT_PATH" 。

# Download the data.

cd $HOME /data

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2012/VOCtrainval_11-May-2012.tar

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCtrainval_06-Nov-2007.tar

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCtest_06-Nov-2007.tar

# Extract the data.

tar -xvf VOCtrainval_11-May-2012.tar

tar -xvf VOCtrainval_06-Nov-2007.tar

tar -xvf VOCtest_06-Nov-2007.tar # step1: download the following data and annotation

2017 Train images [118K/18GB]

2017 Val images [5K/1GB]

2017 Test images [41K/6GB]

2017 Train/Val annotations [241MB]

# step2: arrange the data to the following structure

COCO

---train

---test

---val

---annotations"DATA_PATH" 。weight/将重量文件放入。运行以下命令开始培训并查看config/yolov4_config.py中的详细信息,然后在运行培训程序时设置data_type是voc或可可。

CUDA_VISIBLE_DEVICES=0 nohup python -u train.py --weight_path weight/yolov4.weights --gpu_id 0 > nohup.log 2>&1 &另外 *它支持恢复培训添加--resume ,它将通过使用COMMAD自动last.pt

CUDA_VISIBLE_DEVICES=0 nohup python -u train.py --weight_path weight/last.pt --gpu_id 0 > nohup.log 2>&1 & 修改检测到IMG路径:data_test =/path/to/your/test_data#您自己的图像

for VOC dataset:

CUDA_VISIBLE_DEVICES=0 python3 eval_voc.py --weight_path weight/best.pt --gpu_id 0 --visiual $DATA_TEST --eval --mode det

for COCO dataset:

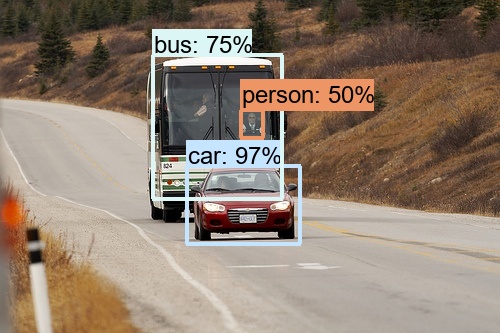

CUDA_VISIBLE_DEVICES=0 python3 eval_coco.py --weight_path weight/best.pt --gpu_id 0 --visiual $DATA_TEST --eval --mode det图像可以在output/中看到。您可以看到如下的图片:

调整:

CUDA_VISIBLE_DEVICES=0 python3 video_test.py --weight_path best.pt --gpu_id 0 --video_path video.mp4 --output_dir --output_dir修改您的评估数据集路径:data_path =/path/to/your/test_data#您自己的图像

for VOC dataset:

CUDA_VISIBLE_DEVICES=0 python3 eval_voc.py --weight_path weight/best.pt --gpu_id 0 --visiual $DATA_TEST --eval --mode val

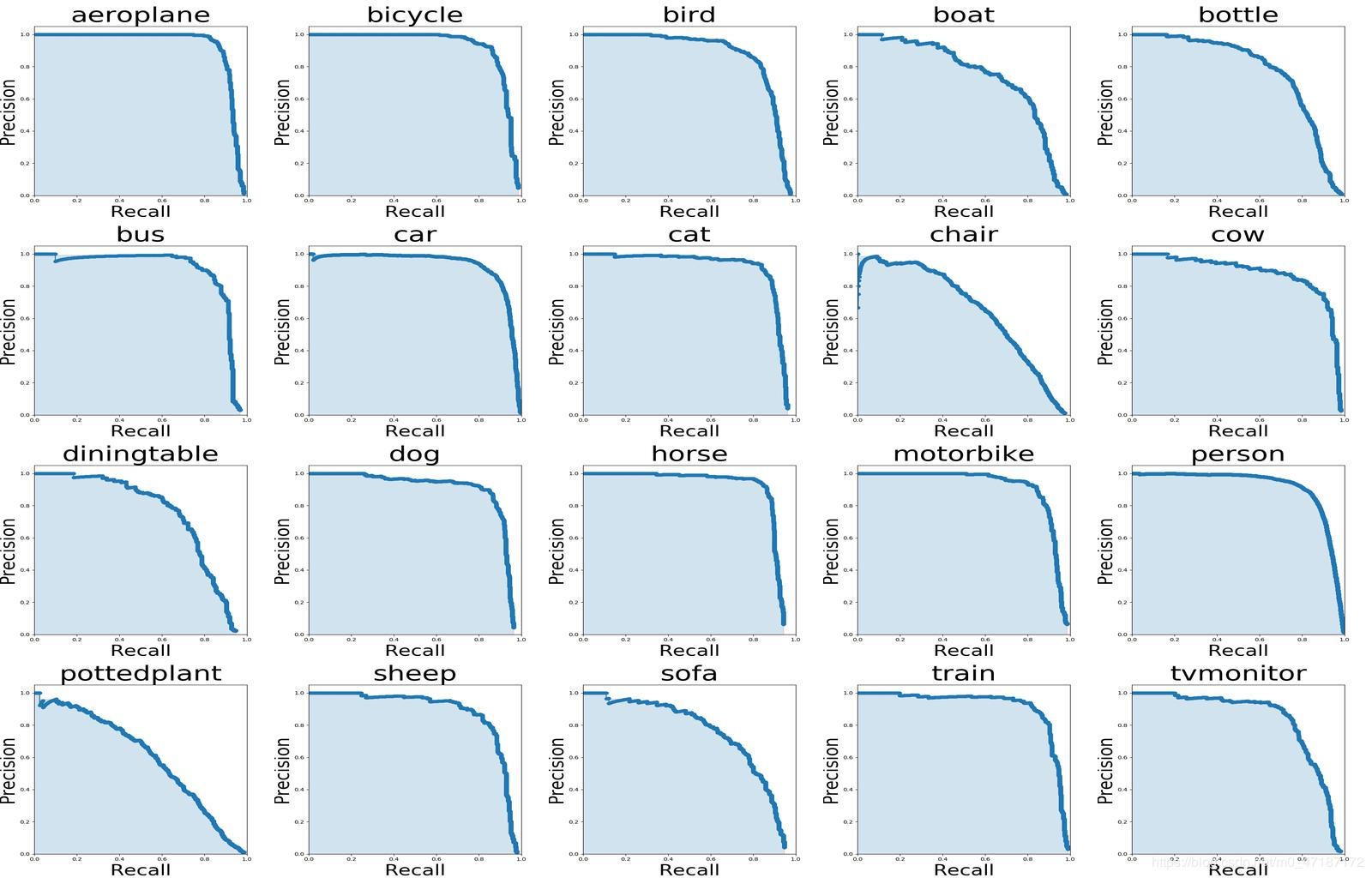

如果要查看上面的图片,则应使用以下命令:

# To get ground truths of your dataset

python3 utils/get_gt_txt.py

# To plot P-R curve and calculate mean average precision

python3 utils/get_map.py 修改您的评估数据集路径:data_path =/path/to/your/test_data#您自己的图像

CUDA_VISIBLE_DEVICES=0 python3 eval_coco.py --weight_path weight/best.pt --gpu_id 0 --visiual $DATA_TEST --eval --mode val

type=bbox

Running per image evaluation... DONE (t=0.34s).

Accumulating evaluation results... DONE (t=0.08s).

Average Precision (AP) @[ IoU = 0.50:0.95 | area = all | maxDets = 100 ] = 0.438

Average Precision (AP) @[ IoU = 0.50 | area = all | maxDets = 100 ] = 0.607

Average Precision (AP) @[ IoU = 0.75 | area = all | maxDets = 100 ] = 0.469

Average Precision (AP) @[ IoU = 0.50:0.95 | area = small | maxDets = 100 ] = 0.253

Average Precision (AP) @[ IoU = 0.50:0.95 | area = medium | maxDets = 100 ] = 0.486

Average Precision (AP) @[ IoU = 0.50:0.95 | area = large | maxDets = 100 ] = 0.567

Average Recall (AR) @[ IoU = 0.50:0.95 | area = all | maxDets = 1 ] = 0.342

Average Recall (AR) @[ IoU = 0.50:0.95 | area = all | maxDets = 10 ] = 0.571

Average Recall (AR) @[ IoU = 0.50:0.95 | area = all | maxDets = 100 ] = 0.632

Average Recall (AR) @[ IoU = 0.50:0.95 | area = small | maxDets = 100 ] = 0.458

Average Recall (AR) @[ IoU = 0.50:0.95 | area = medium | maxDets = 100 ] = 0.691

Average Recall (AR) @[ IoU = 0.50:0.95 | area = large | maxDets = 100 ] = 0.790python3 utils/modelsize.pySET ASWATT = val_voc.py中的ture,您将看到网络输出中出现的热图

for VOC dataset:

CUDA_VISIBLE_DEVICES=0 python3 eval_voc.py --weight_path weight/best.pt --gpu_id 0 --visiual $DATA_TEST --eval

for COCO dataset:

CUDA_VISIBLE_DEVICES=0 python3 eval_coco.py --weight_path weight/best.pt --gpu_id 0 --visiual $DATA_TEST --eval热图可以在output/这样看到: