Chinese artificial intelligence startup DeepSeek recently quietly released its latest large-scale language model, DeepSeek-V3-0324, which has attracted widespread attention in the artificial intelligence industry. The model appears on the AI resource library Hugging Face at 641GB, continuing DeepSeek's usual low-key style. It does not carry out large-scale publicity, and only comes with an empty README file and model weights.

This model is licensed with MIT, allowing it to be used for free for commercial purposes and can run directly on consumer-grade hardware, such as the Apple Mac Studio with the M3 Ultra chip. AI researcher Awni Hannun revealed on social media that the 4-bit quantitative version of DeepSeek-V3-0324 runs faster than 20 tokens per second on a 512GB M3 Ultra chip. Despite the high price of Mac Studio, being able to run such a large-scale model locally breaks the previous dependence of top AI on data centers.

DeepSeek-V3-0324 adopts a Hybrid Expert (MoE) architecture, which activates only about 37 billion parameters instead of all 685 billion parameters when performing tasks, thereby greatly improving efficiency. At the same time, the model also incorporates long potential attention (MLA) and multi-token prediction (MTP) technologies. MLA enhances the model's contextual understanding ability in long texts. MTP enables the model to generate multiple tokens at a time, and the output speed is increased by nearly 80%. The 4-bit quantized version reduces storage demand to 352GB, making it possible to run on high-end consumer-grade hardware.

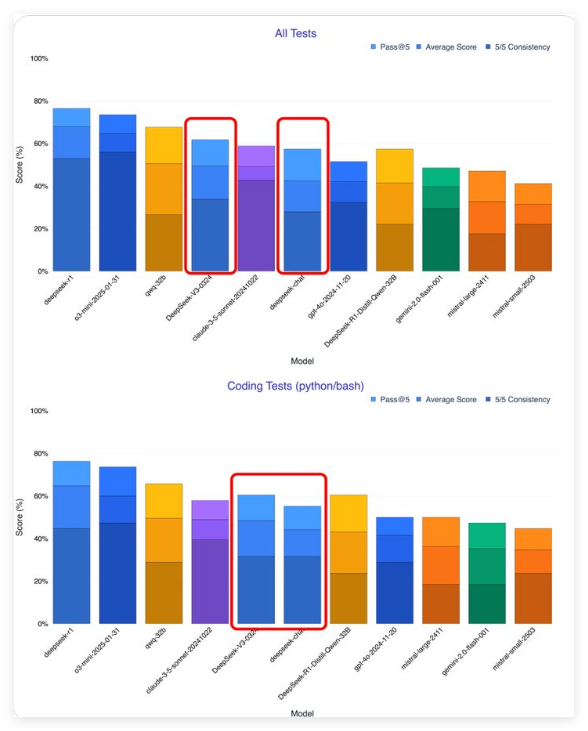

According to early testers, DeepSeek-V3-0324 has significantly improved compared with the previous version. AI researcher Xeophon claims that the model has made a huge leap in all test metrics, surpassing Anthropic's Claude Sonnet 3.5 to become the best non-inference model. Moreover, unlike Sonnet that needs to be subscribed to, the weight of DeepSeek-V3-0324 can be downloaded for free.

DeepSeek's open source release strategy is in sharp contrast to Western AI companies. The United States' OpenAI and Anthropic have set payment thresholds for models, while Chinese AI companies are increasingly inclined to loose open source licenses. This strategy has accelerated the development of China's AI ecosystem, and technology giants such as Baidu, Alibaba and Tencent have also followed suit and released open source AI models. Facing Nvidia chip restrictions, Chinese companies have transformed their disadvantages into competitive advantages by emphasizing efficiency and optimization.

DeepSeek-V3-0324 is likely to be the basis of the upcoming DeepSeek-R2 inference model. The current inference model computing demand is huge. If DeepSeek-R2 performs well, it will pose a direct challenge to the rumored GPT-5 of OpenAI.

For users and developers who want to experience DeepSeek-V3-0324, the full model weight can be downloaded from Hugging Face, but the files are large and require high storage and computing resources. You can also choose cloud services, such as OpenRouter, which provides free API access and a friendly chat interface; DeepSeek's own chat interface may also have been updated to support new versions. Developers can also integrate the model through reasoning service providers such as Hyperbolic Labs.

It is worth noting that DeepSeek-V3-0324 has changed in communication style, from a human-like dialogue style to a more formal and technical style. This shift is intended to adapt to professional and technical application scenarios, but may affect its appeal in consumer-oriented applications.

DeepSeek's open source strategy is reshaping the global AI landscape. Previously, China's AI gap with the United States was 1-2 years away, but now it has narrowed significantly to 3-6 months, and some areas have even achieved catching up. Just as Android systems gain global dominance through open source, the open source AI model is expected to stand out in the competition with a wide range of applications and collective innovations of developers and promote the wider application of AI technology.