On February 24, 2024, an important breakthrough was made in the field of artificial intelligence. The medium-scale inference model Tiny-R1-32B-Preview jointly developed by the 360 Intelligent Brain Team and Peking University was officially released. With its only 5% parameter volume, this innovative model successfully approaches the full-health performance of Deepseek-R1-671B, opening up new possibilities for the field of efficient reasoning.

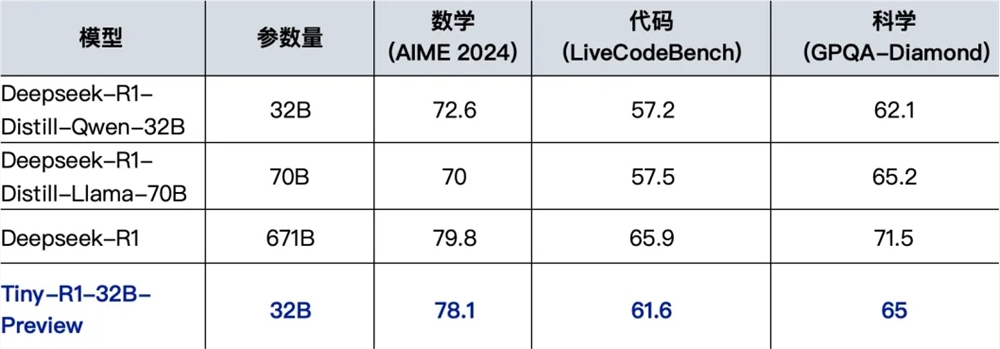

In performance testing, Tiny-R1-32B-Preview showed impressive performance. Especially in the field of mathematics, the model achieved an excellent score of 78.1 in the AIME2024 review, which is only 1.7 points away from the 79.8 score of the original R1 model, and is also significantly ahead of the 70.0 score of the Deepseek-R1-Distill-Llama-70B. In the fields of programming and science, the model also performed well, achieving 61.6 and 65.0 points in LiveCodeBench and GPQA-Diamond tests, respectively, surpassing the current best open source 70B model. This series of achievements not only proves the excellent performance of Tiny-R1-32B-Preview, but also achieves a significant improvement in efficiency by significantly reducing inference costs.

Behind this breakthrough result is the innovative "dividing and converging-integration" strategy of the research team. This strategy first generates massive field data based on DeepSeek-R1, and trains professional models in the three vertical fields of mathematics, programming and science. Subsequently, the research team used the Arcee team's Mergekit tool for intelligent integration, successfully breaking through the performance limit of a single model and achieving balanced optimization of multi-tasks. This innovative technical path not only significantly improves the overall performance of the model, but also provides new ideas and directions for the future development of inference models.

The 360 Intelligent Brain Team and the joint R&D team of Peking University particularly emphasized that the success of Tiny-R1-32B-Preview is inseparable from the strong support of the open source community. This model benefits fully from DeepSeek-R1 distillation technology, DeepSeek-R1-Distill-32B incremental training and advanced model fusion technology. The accumulation of these technical achievements has laid a solid foundation for the development of models.

To promote technology inclusiveness, the R&D team promises to disclose a complete model warehouse, including detailed technical reports, training codes and some data sets. At present, the model warehouse has been officially launched on the Hugging Face platform, and the access address is https://huggingface.co/qihoo360/TinyR1-32B-Preview. This open initiative will provide valuable resources to the artificial intelligence research community and promote the further development of related technologies.