Google's research team recently released a breakthrough model - TransNAR, which cleverly combines the Transformer architecture with neural algorithm reasoning (NAR) technology, significantly improving performance in complex algorithm tasks. This innovation not only solves the limitations of traditional Transformers in algorithmic reasoning, but also provides a completely new solution for processing structured data.

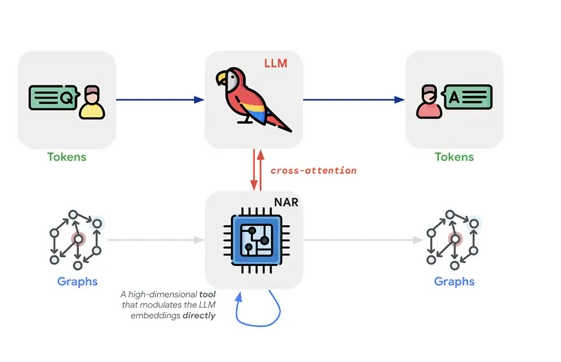

Traditional Transformers often face the challenge of not being able to effectively capture structured data when dealing with algorithm problems, while NAR technology performs well in this regard, especially in generalization capabilities. TransNAR successfully integrates Transformer's text processing capabilities with NAR's graph representation capabilities by introducing a cross-attention mechanism. This combination allows the model to process text description and graph structure data simultaneously, thus showing unprecedented advantages in algorithmic inference tasks.

TransNAR's training strategy is also unique. During the pre-training phase, the NAR module is trained independently, and learns the inherent logic and calculation steps by performing multiple algorithmic tasks. This process lays a solid algorithmic foundation for the model. In the fine-tuning stage, TransNAR receives dual inputs from text description and graph representation, and uses the node embedding information provided by the pre-trained NAR module to dynamically adjust its own mark embedding through cross-attention mechanisms. This multi-level training method allows the model to better adapt to complex algorithmic tasks.

In actual testing, TransNAR's performance far exceeds that of the traditional Transformer model, especially in the generalization capability outside the distribution, showing a performance improvement of more than 20%. This achievement not only verifies the powerful capabilities of the model, but also provides strong support for future application in a wider range of algorithm tasks.

Key points:

⭐ Google launched the TransNAR model, combining Transformer with NAR technology, significantly improving algorithm reasoning capabilities.

⭐ TransNAR adopts a cross-attention mechanism, deeply integrates text processing and graph representation capabilities, and performs excellently in complex algorithm tasks.

⭐ The multi-level training strategy makes TransNAR significantly better than traditional Transformers in algorithmic tasks, especially in generalization capabilities.