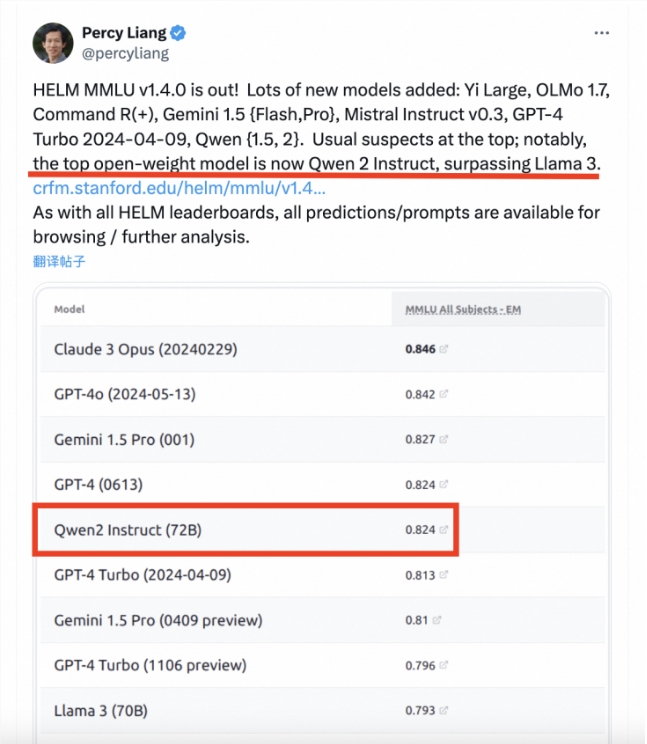

Recently, Stanford University released the latest results of the HELM MMLU large model evaluation list, which has attracted widespread attention in the industry. With its strict evaluation standards and transparent evaluation process, this list provides a reliable reference for the performance evaluation of large models. The results of the list show that Alibaba's Tongyi Qianwen Qwen2-72B model stands out among many models and has achieved impressive results, demonstrating the significant progress of China's large model technology.

Recently, Stanford University’s large model evaluation list HELM MMLU released the latest results. Percy Liang, director of the Basic Model Research Center at Stanford University, published an article pointing out that Alibaba’s Tongyi Qianwen Qwen2-72B model surpassed Llama3-70B in rankings and became the best-performing open source large model. MMLU (Massive Multitask Language Understanding) is one of the most influential large model evaluation benchmarks in the industry. It covers 57 tasks including basic mathematics, computer science, law, history, etc., and is designed to test world knowledge and problem-solving abilities in large models. However, in actual evaluation, the results of different models often lack consistency and comparability. This is mainly due to the use of non-standard prompt word technology and the failure to uniformly adopt open source evaluation frameworks.

The outstanding performance of Qwen2-72B not only reflects Alibaba’s strong technical strength in the field of AI, but also injects strong impetus into the development of large models in China. I believe that in the future, more Chinese large models will show their outstanding strength on the international stage.