Hugging Face has made a major update to its Open LLM Leaderboard rankings. This move is aimed at responding to the slowdown in large language model (LLM) performance improvements and providing the open source artificial intelligence community with more comprehensive and rigorous evaluation standards. This update is not a simple adjustment, but a comprehensive upgrade of evaluation indicators and testing methods, aiming to more accurately reflect the capabilities of LLM in actual applications, rather than relying solely on a single performance number. The updated rankings will have a profound impact on the development direction of open source artificial intelligence and promote the development of models in a more practical and reliable direction.

Hugging Face has updated its Open LLM Leaderboard, a move that will have a significant impact on the landscape of open source artificial intelligence development. The improvements come at a critical time in the development of artificial intelligence, as researchers and companies face a seemingly stalled performance improvement in large language models (LLMs).

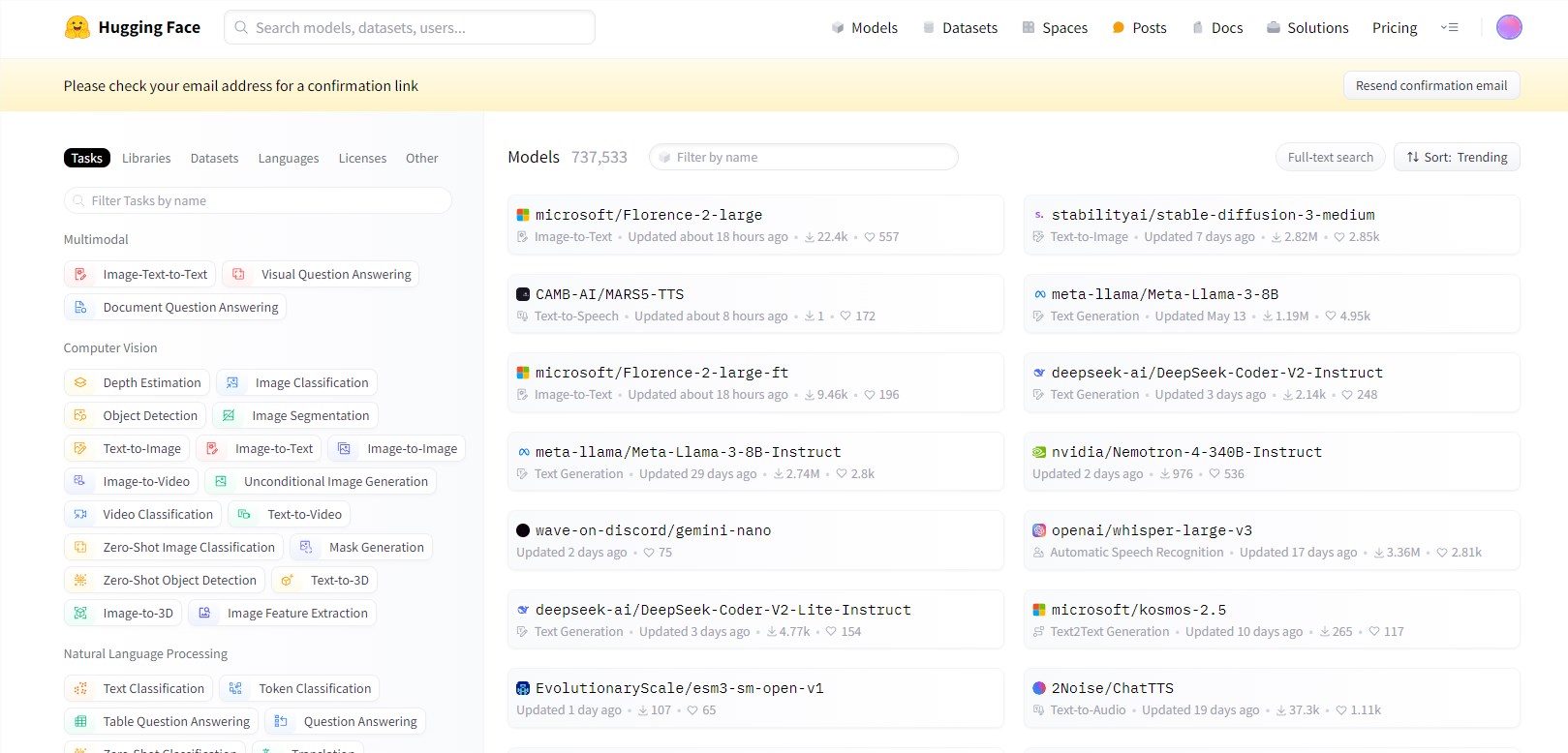

The Open LLM Leaderboard, a benchmark tool used to measure progress in artificial intelligence language models, has been redesigned to provide more rigorous and granular evaluation. The update comes at a time when the artificial intelligence community is observing a slowdown in the pace of breakthrough improvements despite the constant release of new models.

This update to the ranking introduces more sophisticated evaluation metrics and provides detailed analysis to help users understand which tests are most relevant for specific applications. The move reflects a growing recognition in the AI community that performance numbers alone are insufficient to assess a model's usefulness in the real world.

The updated rankings introduce more sophisticated evaluation metrics and provide detailed analysis to help users understand which tests are most relevant for specific applications. This reflects a growing awareness in the AI community that performance numbers alone are insufficient to assess a model's usefulness in the real world. Key changes to the leaderboard include:

- Introduce more challenging data sets to test advanced reasoning and real-world knowledge applications.

- Implement multi-round dialogue evaluation to more comprehensively evaluate the conversational capabilities of the model.

- Expanding non-English language assessments to better represent global AI capabilities.

- Add tests for instruction following and few-shot learning, which are increasingly important for practical applications.

These updates are designed to create a more comprehensive and challenging set of benchmarks, better distinguish the best-performing models, and identify areas for improvement.

Highlights:

⭐ Hugging Face updates the Open LLM Leaderboard to provide more rigorous and detailed evaluation to solve the problem of slow performance improvement of large language models.

⭐ Updates include the introduction of more challenging datasets, implementation of multi-round conversational assessments, and expansion of non-English language assessments to create more comprehensive and challenging benchmarks.

⭐ The launch of LMSYS Chatbot Arena complements the Open LLM Leaderboard, emphasizing real-time and dynamic evaluation methods, bringing new ideas to artificial intelligence evaluation.

All in all, Hugging Face’s Open LLM Leaderboard update marks an important upgrade in the artificial intelligence evaluation method. It will promote the healthier and faster development of the open source LLM field, and ultimately promote the birth of artificial intelligence technology that is more practical and closer to practical applications. .