Repo ini

(1) Perpustakaan Pytorch yang menyediakan algoritma distilasi pengetahuan klasik pada tolok ukur CV utama,

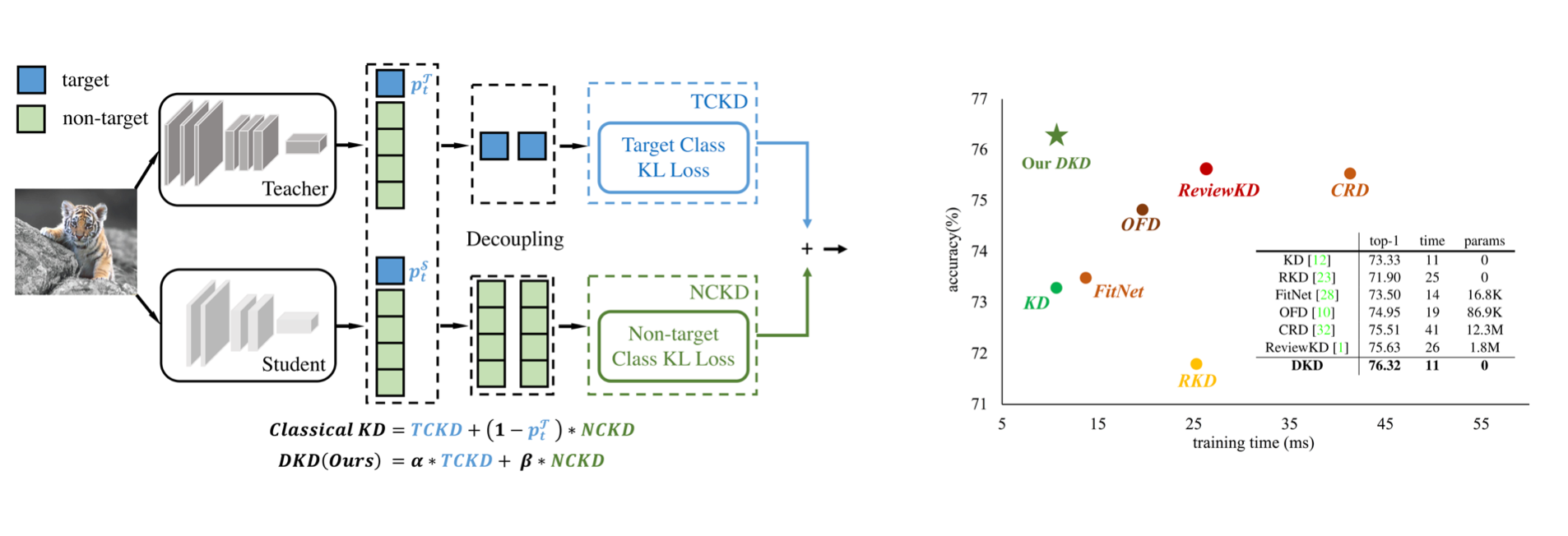

(2) Implementasi resmi makalah CVPR-2022: Distilasi Pengetahuan Dipoupled.

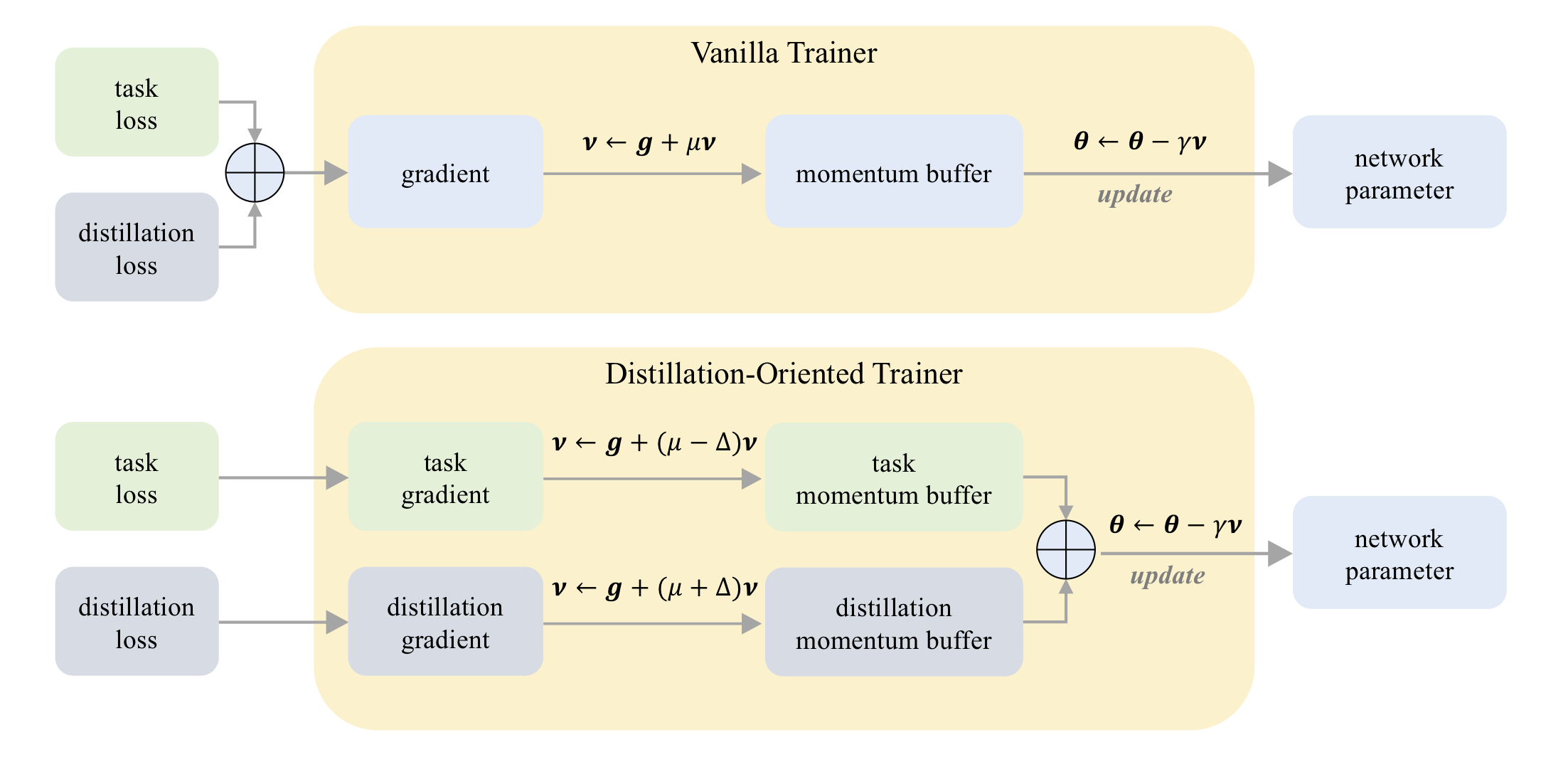

(3) Implementasi resmi kertas ICCV-2023: DOT: Pelatih berorientasi distilasi.

Di CIFAR-100:

| Guru Murid | Resnet32x4 Resnet8x4 | VGG13 VGG8 | Resnet32x4 Shufflenet-v2 |

|---|---|---|---|

| KD | 73.33 | 72.98 | 74.45 |

| KD+DOT | 75.12 | 73.77 | 75.55 |

Di Tiny-Imagenet:

| Guru Murid | Resnet18 MobileNet-V2 | Resnet18 Shufflenet-v2 |

|---|---|---|

| KD | 58.35 | 62.26 |

| KD+DOT | 64.01 | 65.75 |

Di imagenet:

| Guru Murid | Resnet34 Resnet18 | Resnet50 MobileNet-V1 |

|---|---|---|

| KD | 71.03 | 70.50 |

| KD+DOT | 71.72 | 73.09 |

Di CIFAR-100:

| Guru Murid | Resnet56 Resnet20 | Resnet110 Resnet32 | Resnet32x4 Resnet8x4 | WRN-40-2 WRN-16-2 | WRN-40-2 WRN-40-1 | VGG13 VGG8 |

|---|---|---|---|---|---|---|

| KD | 70.66 | 73.08 | 73.33 | 74.92 | 73.54 | 72.98 |

| DKD | 71.97 | 74.11 | 76.32 | 76.23 | 74.81 | 74.68 |

| Guru Murid | Resnet32x4 Shufflenet-v1 | WRN-40-2 Shufflenet-v1 | VGG13 MobileNet-V2 | Resnet50 MobileNet-V2 | Resnet32x4 MobileNet-V2 |

|---|---|---|---|---|---|

| KD | 74.07 | 74.83 | 67.37 | 67.35 | 74.45 |

| DKD | 76.45 | 76.70 | 69.71 | 70.35 | 77.07 |

Di imagenet:

| Guru Murid | Resnet34 Resnet18 | Resnet50 MobileNet-V1 |

|---|---|---|

| KD | 71.03 | 70.50 |

| DKD | 71.70 | 72.05 |

MDistiller mendukung metode distilasi berikut pada CIFAR-100, Imagenet dan MS-COCO:

| Metode | Tautan kertas | CIFAR-100 | Imagenet | MS-COCO |

|---|---|---|---|---|

| KD | https://arxiv.org/abs/1503.02531 | ✓ | ✓ | |

| Fitnet | https://arxiv.org/abs/1412.6550 | ✓ | ||

| PADA | https://arxiv.org/abs/1612.03928 | ✓ | ✓ | |

| Nst | https://arxiv.org/abs/1707.01219 | ✓ | ||

| PKT | https://arxiv.org/abs/1803.10837 | ✓ | ||

| Kdsvd | https://arxiv.org/abs/1807.06819 | ✓ | ||

| Ofd | https://arxiv.org/abs/1904.01866 | ✓ | ✓ | |

| Rkd | https://arxiv.org/abs/1904.05068 | ✓ | ||

| Vid | https://arxiv.org/abs/1904.05835 | ✓ | ||

| Sp | https://arxiv.org/abs/1907.09682 | ✓ | ||

| CRD | https://arxiv.org/abs/1910.10699 | ✓ | ✓ | |

| Ulasankd | https://arxiv.org/abs/2104.09044 | ✓ | ✓ | ✓ |

| DKD | https://arxiv.org/abs/2203.08679 | ✓ | ✓ | ✓ |

Lingkungan:

Instal paket:

sudo pip3 install -r requirements.txt

sudo python3 setup.py develop

CFG.LOG.WANDB sebagai False di mdistiller/engine/cfg.py .Anda dapat mengevaluasi kinerja model atau model kami yang dilatih sendiri.

Model kami berada di https://github.com/megvii-research/mdistiller/releases/tag/checkpoints, silakan unduh pos pemeriksaan ke ./download_ckpts

Jika menguji model di imagenet, silakan unduh dataset di https://image-net.org/ dan letakkan di ./data/imagenet

# evaluate teachers

python3 tools/eval.py -m resnet32x4 # resnet32x4 on cifar100

python3 tools/eval.py -m ResNet34 -d imagenet # ResNet34 on imagenet

# evaluate students

python3 tools/eval.p -m resnet8x4 -c download_ckpts/dkd_resnet8x4 # dkd-resnet8x4 on cifar100

python3 tools/eval.p -m MobileNetV1 -c download_ckpts/imgnet_dkd_mv1 -d imagenet # dkd-mv1 on imagenet

python3 tools/eval.p -m model_name -c output/your_exp/student_best # your checkpoints Unduh cifar_teachers.tar di https://github.com/megvii-research/mdistiller/releases/tag/checkpoints dan untarnya ke ./download_ckpts melalui tar xvf cifar_teachers.tar .

# for instance, our DKD method.

python3 tools/train.py --cfg configs/cifar100/dkd/res32x4_res8x4.yaml

# you can also change settings at command line

python3 tools/train.py --cfg configs/cifar100/dkd/res32x4_res8x4.yaml SOLVER.BATCH_SIZE 128 SOLVER.LR 0.1 Unduh dataset di https://image-net.org/ dan letakkan di ./data/imagenet

# for instance, our DKD method.

python3 tools/train.py --cfg configs/imagenet/r34_r18/dkd.yamlmdistiller/distillers/ dan tentukan penyuling from . _base import Distiller

class MyDistiller ( Distiller ):

def __init__ ( self , student , teacher , cfg ):

super ( MyDistiller , self ). __init__ ( student , teacher )

self . hyper1 = cfg . MyDistiller . hyper1

...

def forward_train ( self , image , target , ** kwargs ):

# return the output logits and a Dict of losses

...

# rewrite the get_learnable_parameters function if there are more nn modules for distillation.

# rewrite the get_extra_parameters if you want to obtain the extra cost.

... Mendaftarkan penyuling di distiller_dict di mdistiller/distillers/__init__.py

Mendaftarkan hyper-parameter yang sesuai di mdistiller/engines/cfg.py

Buat file konfigurasi baru dan uji.

Jika repo ini bermanfaat untuk penelitian Anda, harap pertimbangkan mengutip kertas:

@article { zhao2022dkd ,

title = { Decoupled Knowledge Distillation } ,

author = { Zhao, Borui and Cui, Quan and Song, Renjie and Qiu, Yiyu and Liang, Jiajun } ,

journal = { arXiv preprint arXiv:2203.08679 } ,

year = { 2022 }

}

@article { zhao2023dot ,

title = { DOT: A Distillation-Oriented Trainer } ,

author = { Zhao, Borui and Cui, Quan and Song, Renjie and Liang, Jiajun } ,

journal = { arXiv preprint arXiv:2307.08436 } ,

year = { 2023 }

}MDistiller dirilis di bawah lisensi MIT. Lihat lisensi untuk detailnya.

Terima kasih untuk CRD dan ReviewKD. Kami membangun perpustakaan ini berdasarkan basis kode CRD dan basis kode ulasan.

Terima kasih Yiyu Qiu dan Yi Shi atas kontribusi kode selama magang mereka dalam teknologi Megvii.

Terima kasih Xin Jin untuk diskusi tentang DKD.