Refer to https://neosheets.com (https://github.com/suhjohn/neosheets) for the v2 of this idea.

https://www.llmwb.com/

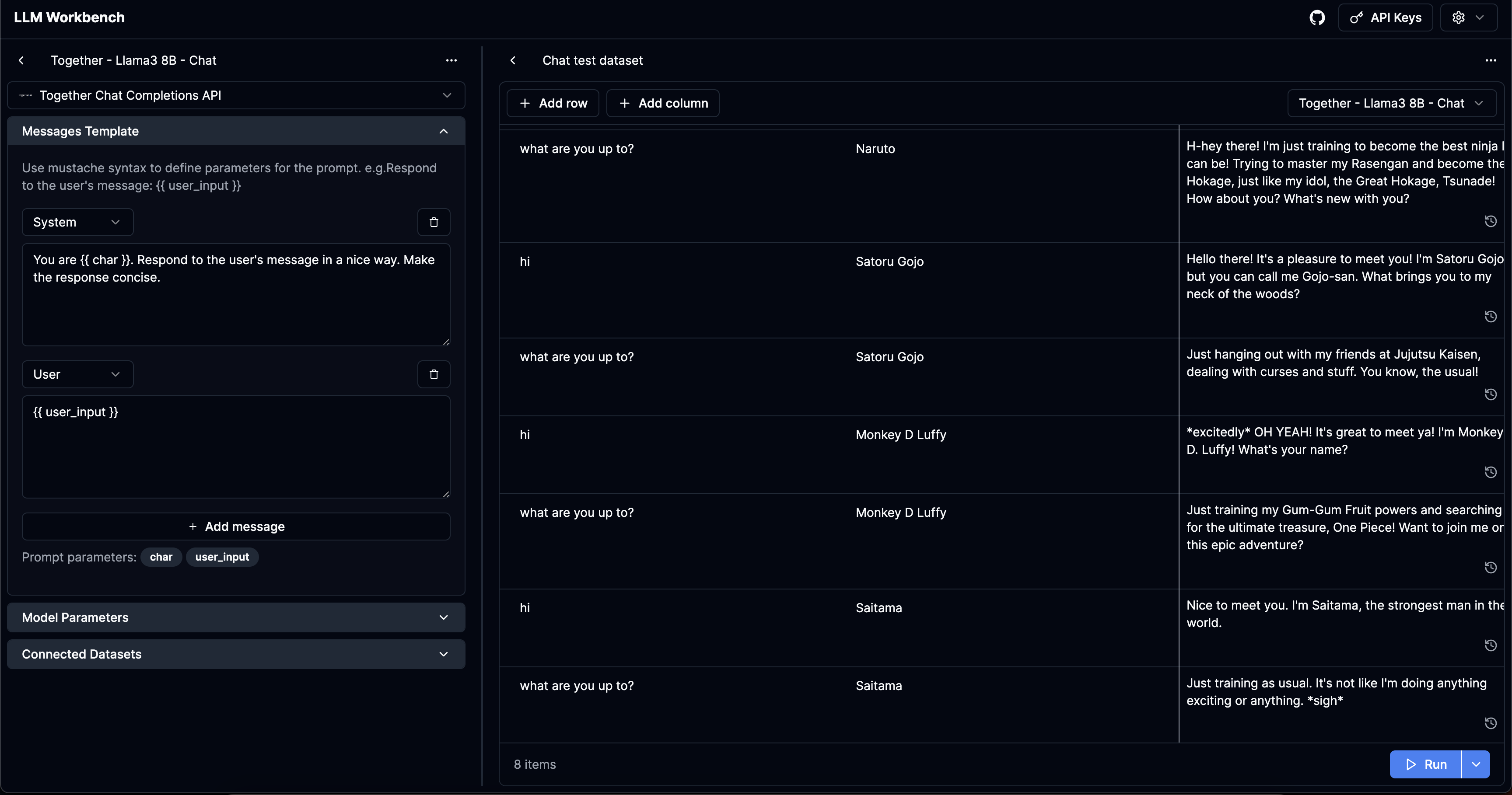

Supercharged workbench for LLMs. Test prompt templates from different models and providers with datasets of prompt arguments to replace the placeholders.

I've built three different AI chatbots now. In the process, I've had to shoddily build subsets of the features supported by this application. I've also wanted to have a no-code platform to test prompts for various arguments to see how the prompt works.

I personally don't find the auto prompt-writer libraries that appealing - I want to get a decent enough vibe-check across a variety of parameters.

I also personally don't use any of the abstraction libraries and don't find them that useful. However, there seemed little tooling for people who want to raw-dog test prompts.

I was inspired by Anthropic's recent workbench platform seemed like a good step-up from OpenAI's Playground.

The application solves these specific user problems:

args: Record<string, string> as its prompt parameters, I want to be able to test different model parameters.{{ }} for denoting variables using Mustache.js.The templates / datasets / API keys that you add on the website are only stored locally on your browser.

LLM Workbench as of now is just a Next.js App. Assuming you have yarn, you can run with the following:

yarn

yarn dev