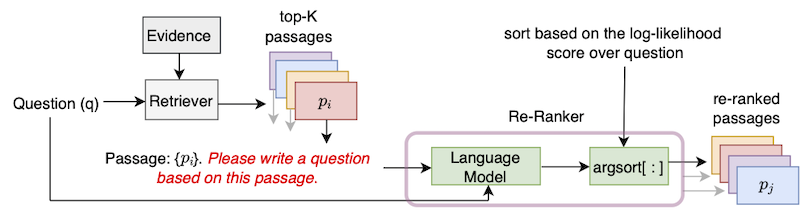

該存儲庫包含在論文“通過零片問題產生的改善段落檢索”中引入的UPR(無監督段落)算法的正式實施。

要使用此存儲庫,需要使用標準的Pytorch安裝。我們在需求中提供依賴項.txt文件。

我們建議使用NGC最近的Pytorch容器之一。可以使用命令docker pull nvcr.io/nvidia/pytorch:22.01-py3繪製Docker圖像:22.01-PY3。要使用此Docker映像,還需要安裝NVIDIA容器工具包。

在Docker容器上,請使用PIP安裝安裝庫transformers和sentencepiece 。

我們遵循DPR公約,並將Wikipedia文章分為100字的長段落。 DPR提供的證據文件可以通過命令下載

python utils / download_data . py - - resource data . wikipedia - split . psgs_w100該證據文件包含用於段落ID,通過文本和段落標題的選項卡分隔字段。

id text title

1 " Aaron Aaron ( or ; " " Ahärôn " " ) is a prophet, high priest, and the brother of Moses in the Abrahamic religions. Knowledge of Aaron, along with his brother Moses, comes exclusiv

ely from religious texts, such as the Bible and Quran. The Hebrew Bible relates that, unlike Moses, who grew up in the Egyptian royal court, Aaron and his elder sister Miriam remained

with their kinsmen in the eastern border-land of Egypt (Goshen). When Moses first confronted the Egyptian king about the Israelites, Aaron served as his brother's spokesman ( " " prophet "

" ) to the Pharaoh. Part of the Law (Torah) that Moses received from " Aaron

2 " God at Sinai granted Aaron the priesthood for himself and his male descendants, and he became the first High Priest of the Israelites. Aaron died before the Israelites crossed

the North Jordan river and he was buried on Mount Hor (Numbers 33:39; Deuteronomy 10:6 says he died and was buried at Moserah). Aaron is also mentioned in the New Testament of the Bib

le. According to the Book of Exodus, Aaron first functioned as Moses' assistant. Because Moses complained that he could not speak well, God appointed Aaron as Moses' " " prophet " " (Exodu

s 4:10-17; 7:1). At the command of Moses, he let " Aaron

... ... ...輸入數據格式是JSON。 JSON文件中的每個字典都包含一個問題,一個包含Top-K檢索到的數據的列表以及(可選)可能答案的列表。對於每個Top-K段落,我們包括(證據)ID,HAS_ANSWER和(可選的)檢索器得分屬性。 id屬性是Wikipedia證據文件中的段落ID, has_answer表示段落文本是否包含答案跨度。以下是.json文件的模板

[

{

"question" : " .... " ,

"answers" : [ " ... " , " ... " , " ... " ],

"ctxs" : [

{

"id" : " .... " ,

"score" : " ... " ,

"has_answer" : " .... " ,

},

...

]

},

...

]當使用自然問題設置查詢時,使用BM25檢索段落時的一個示例。

[

{

"question" : " who sings does he love me with reba " ,

"answers" : [ " Linda Davis " ],

"ctxs" : [

{

"id" : 11828871 ,

"score" : 18.3 ,

"has_answer" : false

},

{

"id" : 11828872 ,

"score" : 14.7 ,

"has_answer" : false ,

},

{

"id" : 11828866 ,

"score" : 14.4 ,

"has_answer" : true ,

},

...

]

},

...

]我們提供了前1000個檢索的段落,以供媒體Questions-Open(NQ),Triviaqa,squad-open,webQeestions(WebQ)和EntityQuestions(EQ)(EQ)數據集(EQ)數據集(EQ)數據集(EQ)數據集:BM25,MSS,MSS,Contriever,DPR和MSS-DPR。請使用以下命令下載這些數據集

python utils / download_data . py

- - resource { key from download_data . py 's RESOURCES_MAP}

[ optional - - output_dir { your location }]例如,要下載所有TOP-K數據,請使用--resource data 。要下載特定的檢索員的TOP-K數據,例如BM25,請使用--resource data.retriever-outputs.bm25 。

要將檢索到的段落與UPR重新排列,請使用以下命令,其中需要指示證據文件和TOP-K檢索到的top-K的路徑。

DISTRIBUTED_ARGS= " -m torch.distributed.launch --nproc_per_node 8 --nnodes 1 --node_rank 0 --master_addr localhost --master_port 6000 "

python ${DISTRIBUTED_ARGS} upr.py

--num-workers 2

--log-interval 1

--topk-passages 1000

--hf-model-name " bigscience/T0_3B "

--use-gpu

--use-bf16

--report-topk-accuracies 1 5 20 100

--evidence-data-path " wikipedia-split/psgs_w100.tsv "

--retriever-topk-passages-path " bm25/nq-dev.json " --use-bf16選項可在Ampere GPU上(例如A100或A6000)上節省速度和內存。但是,在使用V100 GPU時,應刪除此參數。

我們在目錄“示例”下提供了一個示例腳本“ upr-demo.sh”。要使用此腳本,請相應地修改數據和輸入 /輸出文件路徑。

在UPR中使用T0-3B語言模型時,我們在數據集的測試集上提供評估得分。

| 獵犬(+重新排名) | 小隊開場 | Triviaqa | 自然問題 - 開放 | 網絡問題 | 實體問題 |

|---|---|---|---|---|---|

| MSS | 51.3 | 67.2 | 60.0 | 49.2 | 51.2 |

| MSS + UPR | 75.7 | 81.3 | 77.3 | 71.8 | 71.3 |

| BM25 | 71.1 | 76.4 | 62.9 | 62.4 | 71.2 |

| BM25 + UPR | 83.6 | 83.0 | 78.6 | 72.9 | 79.3 |

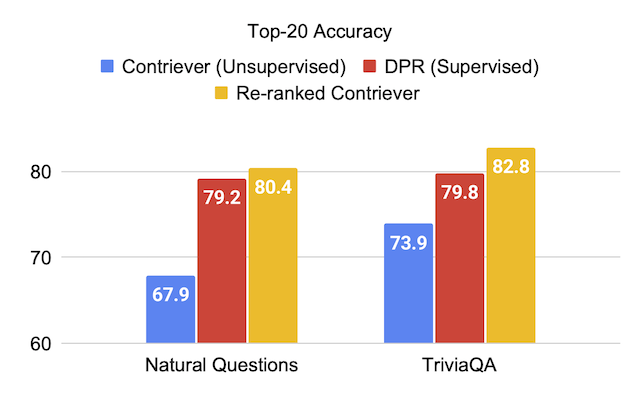

| 反對 | 63.4 | 73.9 | 67.9 | 65.7 | 63.0 |

| CRONTIEVER + UPR | 81.3 | 82.8 | 80.4 | 75.7 | 76.0 |

| 獵犬(+重新排名) | 小隊開場 | Triviaqa | 自然問題 - 開放 | 網絡問題 | 實體問題 |

|---|---|---|---|---|---|

| dpr | 59.4 | 79.8 | 79.2 | 74.6 | 51.1 |

| DPR + UPR | 80.7 | 84.3 | 83.4 | 76.5 | 65.4 |

| MSS-DPR | 73.1 | 81.9 | 81.4 | 76.9 | 60.6 |

| MSS-DPR + UPR | 85.2 | 84.8 | 83.9 | 77.2 | 73.9 |

我們將從BM25和MSS獵犬自然問題開發的每一個中檢索到的前1000個段落的結合。該數據文件可以下載為:

python utils/download_data.py --resource data.retriever-outputs.mss-bm25-union.nq-dev對於這些消融實驗,我們通過參數--topk-passages 2000 ,因為該文件包含兩組前1000段的聯合。

| 語言模型 | 獵犬 | top-1 | 前五名 | 前20名 | 前100名 |

|---|---|---|---|---|---|

| - | BM25 | 22.3 | 43.8 | 62.3 | 76.0 |

| - | MSS | 17.7 | 38.6 | 57.4 | 72.4 |

| T5(3b) | BM25 + MSS | 22.0 | 50.5 | 71.4 | 84.0 |

| gpt-neo(2.7b) | BM25 + MSS | 27.2 | 55.0 | 73.9 | 84.2 |

| GPT-J(6b) | BM25 + MSS | 29.8 | 59.5 | 76.8 | 85.6 |

| T5-LM-ADAPT(250m) | BM25 + MSS | 23.9 | 51.4 | 70.7 | 83.1 |

| T5-LM-ADAPT(800m) | BM25 + MSS | 29.1 | 57.5 | 75.1 | 84.8 |

| T5-LM-ADAPT(3B) | BM25 + MSS | 29.7 | 59.9 | 76.9 | 85.6 |

| T5-LM-ADAPT(11B) | BM25 + MSS | 32.1 | 62.3 | 78.5 | 85.8 |

| T0-3B | BM25 + MSS | 36.7 | 64.9 | 79.1 | 86.1 |

| T0-11b | BM25 + MSS | 37.4 | 64.9 | 79.1 | 86.0 |

可以通過使用腳本gpt/upr_gpt.py在UPR中運行GPT模型。該腳本具有與upr.py腳本相似的選項,但是我們需要將--use-fp16作為參數而不是--use-bf16 。 --hf-model-name的論點可以是EleutherAI/gpt-neo-2.7B或EleutherAI/gpt-j-6B 。

請參閱目錄Open-Domain-QA,以獲取有關培訓和推理預先訓練檢查點的詳細信息。

對於代碼庫中的任何錯誤或錯誤,請打開新問題,或向Devendra Singh Sachan([email protected])發送電子郵件。

如果您發現此代碼或數據有用,請考慮將我們的論文視為:

@article{sachan2022improving,

title = " Improving Passage Retrieval with Zero-Shot Question Generation " ,

author = " Sachan, Devendra Singh and Lewis, Mike and Joshi, Mandar and Aghajanyan, Armen and Yih, Wen-tau and Pineau, Joelle and Zettlemoyer, Luke " ,

booktitle = " Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing " ,

publisher = " Association for Computational Linguistics " ,

url = " https://arxiv.org/abs/2204.07496 " ,

year = " 2022 "

}