vits2_pytorch

1.0.0

vits2紙的非官方實施,續集Vits Paper。 (感謝作者的工作!)

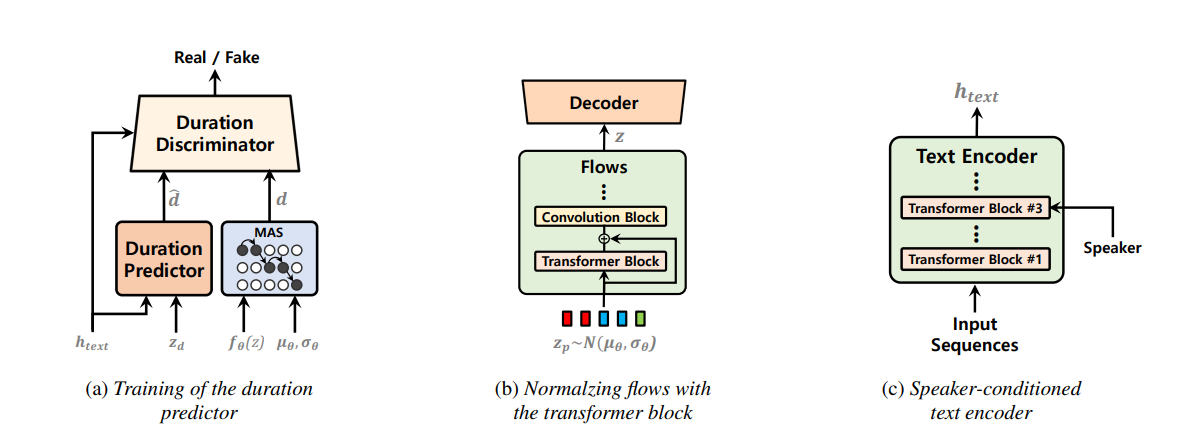

最近已經對單階段的文本到語音模型進行了積極研究,其結果表現優於兩階段管道系統。儘管以前的單階段模型取得了長足的進步,但其間歇性的不自然,計算效率和對音素轉化的強烈依賴有改善的餘地。在這項工作中,我們介紹了VITS2,這是一種單階段的文本到語音模型,通過改進以前工作的幾個方面來有效地綜合了更自然的語音。我們提出了改進的結構和訓練機制,並提出了所提出的方法可有效改善自然性,多演講者模型中語音特徵的相似性以及培訓和推理的效率。此外,我們證明,在我們的方法中可以大大降低對先前作品中音素轉換的強大依賴性,從而可以完全終端單級方法。

apt-get install espeakln -s /path/to/LJSpeech-1.1/wavs DUMMY1ln -s /path/to/VCTK-Corpus/downsampled_wavs DUMMY2 # Cython-version Monotonoic Alignment Search

cd monotonic_align

python setup.py build_ext --inplace

# Preprocessing (g2p) for your own datasets. Preprocessed phonemes for LJ Speech and VCTK have been already provided.

# python preprocess.py --text_index 1 --filelists filelists/ljs_audio_text_train_filelist.txt filelists/ljs_audio_text_val_filelist.txt filelists/ljs_audio_text_test_filelist.txt

# python preprocess.py --text_index 2 --filelists filelists/vctk_audio_sid_text_train_filelist.txt filelists/vctk_audio_sid_text_val_filelist.txt filelists/vctk_audio_sid_text_test_filelist.txt import torch

from models import SynthesizerTrn

net_g = SynthesizerTrn (

n_vocab = 256 ,

spec_channels = 80 , # <--- vits2 parameter (changed from 513 to 80)

segment_size = 8192 ,

inter_channels = 192 ,

hidden_channels = 192 ,

filter_channels = 768 ,

n_heads = 2 ,

n_layers = 6 ,

kernel_size = 3 ,

p_dropout = 0.1 ,

resblock = "1" ,

resblock_kernel_sizes = [ 3 , 7 , 11 ],

resblock_dilation_sizes = [[ 1 , 3 , 5 ], [ 1 , 3 , 5 ], [ 1 , 3 , 5 ]],

upsample_rates = [ 8 , 8 , 2 , 2 ],

upsample_initial_channel = 512 ,

upsample_kernel_sizes = [ 16 , 16 , 4 , 4 ],

n_speakers = 0 ,

gin_channels = 0 ,

use_sdp = True ,

use_transformer_flows = True , # <--- vits2 parameter

# (choose from "pre_conv", "fft", "mono_layer_inter_residual", "mono_layer_post_residual")

transformer_flow_type = "fft" , # <--- vits2 parameter

use_spk_conditioned_encoder = True , # <--- vits2 parameter

use_noise_scaled_mas = True , # <--- vits2 parameter

use_duration_discriminator = True , # <--- vits2 parameter

)

x = torch . LongTensor ([[ 1 , 2 , 3 ],[ 4 , 5 , 6 ]]) # token ids

x_lengths = torch . LongTensor ([ 3 , 2 ]) # token lengths

y = torch . randn ( 2 , 80 , 100 ) # mel spectrograms

y_lengths = torch . Tensor ([ 100 , 80 ]) # mel spectrogram lengths

net_g (

x = x ,

x_lengths = x_lengths ,

y = y ,

y_lengths = y_lengths ,

)

# calculate loss and backpropagate # LJ Speech

python train.py -c configs/vits2_ljs_nosdp.json -m ljs_base # no-sdp; (recommended)

python train.py -c configs/vits2_ljs_base.json -m ljs_base # with sdp;

# VCTK

python train_ms.py -c configs/vits2_vctk_base.json -m vctk_base

# for onnx export of trained models

python export_onnx.py --model-path= " G_64000.pth " --config-path= " config.json " --output= " vits2.onnx "

python infer_onnx.py --model= " vits2.onnx " --config-path= " config.json " --output-wav-path= " output.wav " --text= " hello world, how are you? "