deep_gcns_torch

1.0.0

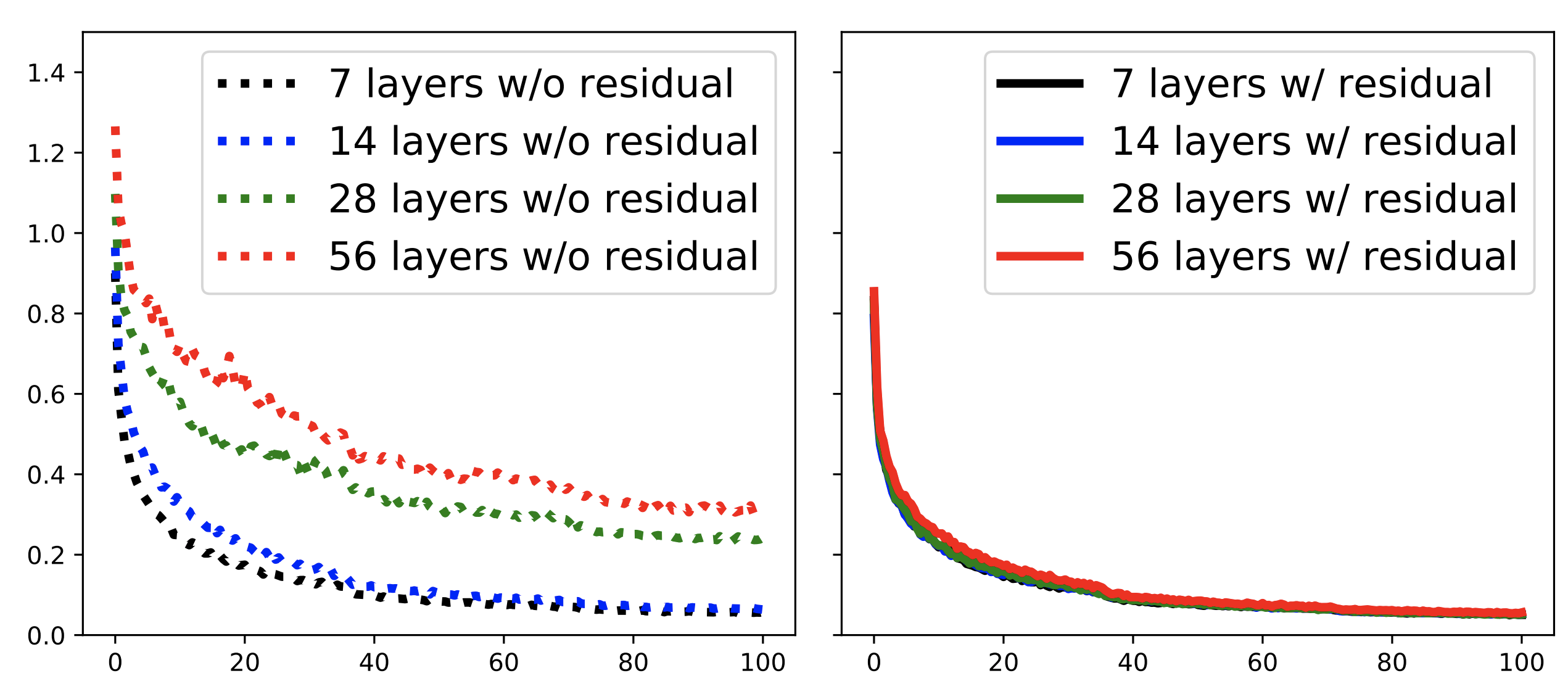

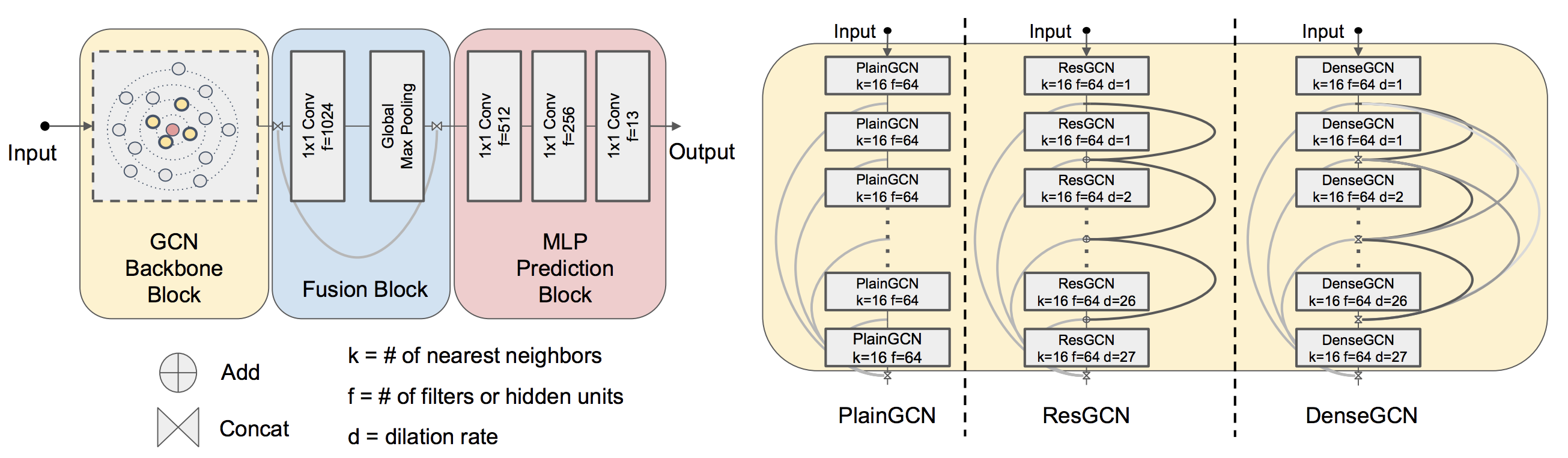

在這項工作中,我們提出了成功培訓非常深入GCN的新方法。我們從CNN借用概念,主要是殘留/密集的連接和擴張的捲積,並將其適應GCN體系結構。通過廣泛的實驗,我們顯示了這些深GCN框架的積極作用。

[Project] [Paper] [幻燈片] [TensorFlow代碼] [Pytorch代碼]

我們進行了廣泛的實驗,以顯示不同的組件(#layers,#filters,#nearest鄰居,擴張等)如何影響DeepGCNs 。我們還提供有關不同類型的深GCN(MRGCN,EDGECONV,GraphSage和Gin)的消融研究。

請查看examples文件夾中每個任務的Readme.md中的詳細信息。代碼,數據和驗證模型的所有信息都可以在此處找到。

examples/ogb_eff/ogbn_arxiv_dgl通過運行安裝環境:

source deepgcn_env_install.sh

.

├── misc # Misc images

├── utils # Common useful modules

├── gcn_lib # gcn library

│ ├── dense # gcn library for dense data (B x C x N x 1)

│ └── sparse # gcn library for sparse data (N x C)

├── eff_gcn_modules # modules for mem efficient gnns

├── examples

│ ├── modelnet_cls # code for point clouds classification on ModelNet40

│ ├── sem_seg_dense # code for point clouds semantic segmentation on S3DIS (data type: dense)

│ ├── sem_seg_sparse # code for point clouds semantic segmentation on S3DIS (data type: sparse)

│ ├── part_sem_seg # code for part segmentation on PartNet

│ ├── ppi # code for node classification on PPI dataset

│ └── ogb # code for node/graph property prediction on OGB datasets

│ └── ogb_eff # code for node/graph property prediction on OGB datasets with memory efficient GNNs

└── ...

如果您發現任何有用的話,請引用我們的論文

@InProceedings{li2019deepgcns,

title={DeepGCNs: Can GCNs Go as Deep as CNNs?},

author={Guohao Li and Matthias Müller and Ali Thabet and Bernard Ghanem},

booktitle={The IEEE International Conference on Computer Vision (ICCV)},

year={2019}

}

@article{li2021deepgcns_pami,

title={Deepgcns: Making gcns go as deep as cnns},

author={Li, Guohao and M{"u}ller, Matthias and Qian, Guocheng and Perez, Itzel Carolina Delgadillo and Abualshour, Abdulellah and Thabet, Ali Kassem and Ghanem, Bernard},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

year={2021},

publisher={IEEE}

}

@misc{li2020deepergcn,

title={DeeperGCN: All You Need to Train Deeper GCNs},

author={Guohao Li and Chenxin Xiong and Ali Thabet and Bernard Ghanem},

year={2020},

eprint={2006.07739},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

@InProceedings{li2021gnn1000,

title={Training Graph Neural Networks with 1000 layers},

author={Guohao Li and Matthias Müller and Bernard Ghanem and Vladlen Koltun},

booktitle={International Conference on Machine Learning (ICML)},

year={2021}

}

麻省理工學院許可證

有關更多信息,請聯繫Guocheng Qian Matthias Muller的Guohao Li。