Java庫以最簡單的方式使用OpenAI API。

Simple-Openai是Java HTTP客戶庫庫,用於向OpenAI API發送請求並接收響應。它揭示了所有服務中一致的接口,但是像Python或nodejs一樣簡單,就像您可以找到的那樣。這是一個非正式的圖書館。

Simple-Openai使用cleverclient庫進行HTTP通信,傑克遜進行JSON解析和Lombok,以最大程度地減少樣板代碼等庫。

Simple-Openai試圖與Openai的最新變化保持最新狀態。目前,它支持大多數現有功能,並將繼續隨後更改。

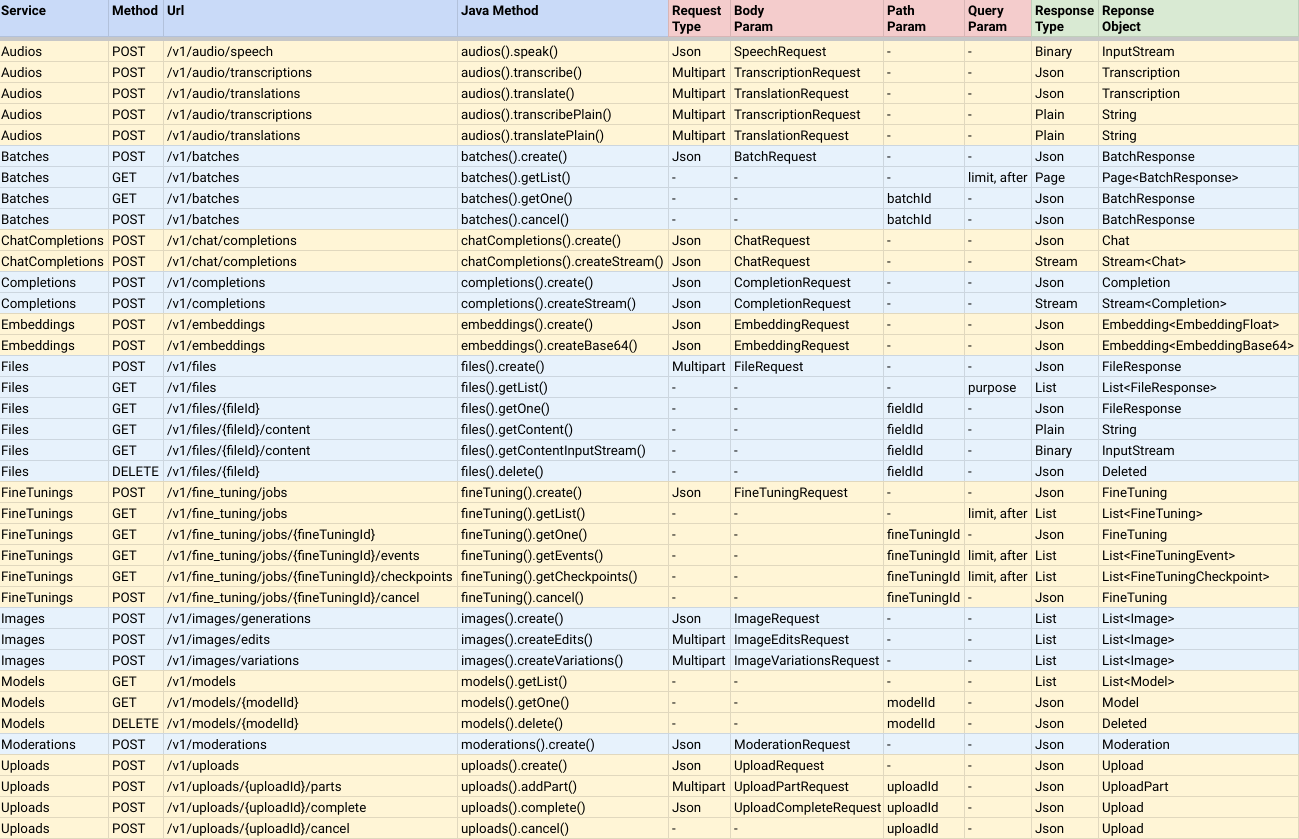

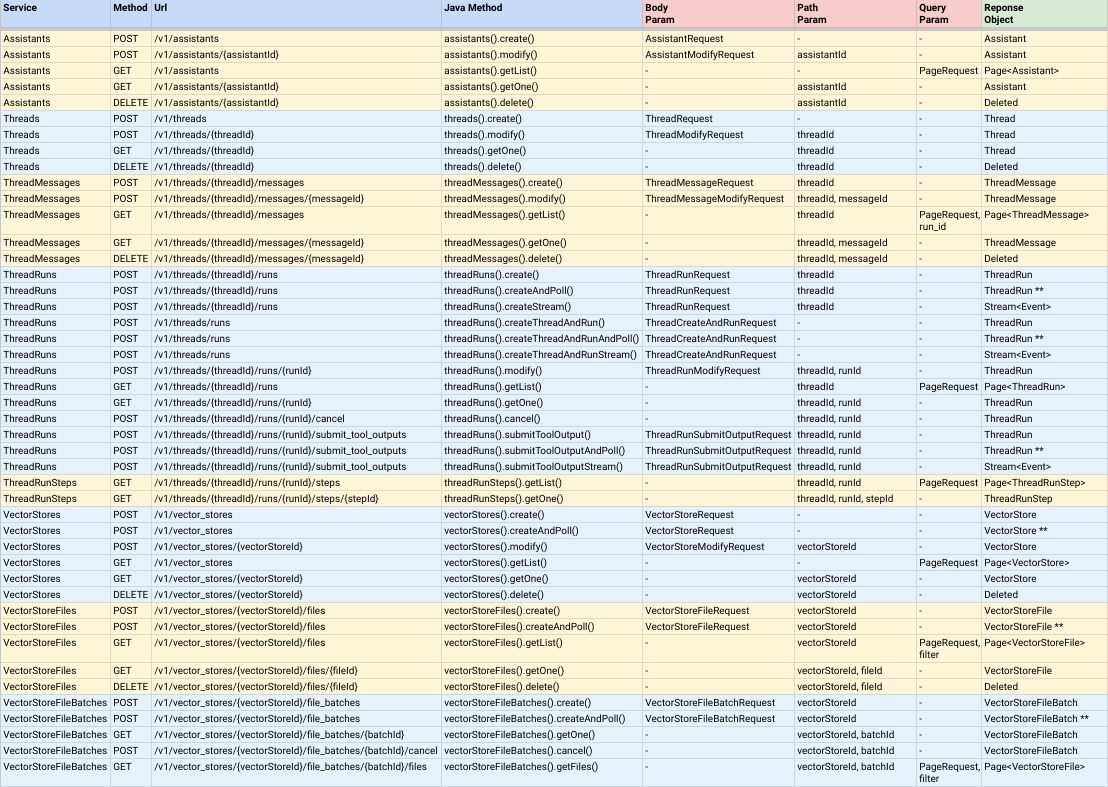

對大多數OpenAI服務的全部支持:

筆記:

CompletableFuture<ResponseObject> ,這意味著它們是異步的,但是您可以調用join()方法以返回結果值後返回結果值。AndPoll()結尾的方法。這些方法是同步的,並且阻止了您提供返回false的謂詞函數。 您可以通過在Maven項目中添加以下依賴性來安裝簡單的Openai:

< dependency >

< groupId >io.github.sashirestela</ groupId >

< artifactId >simple-openai</ artifactId >

< version >[latest version]</ version >

</ dependency >或者使用gradle:

dependencies {

implementation ' io.github.sashirestela:simple-openai:[latest version] '

}這是您在使用服務之前需要做的第一步。您必須至少提供OpenAI API密鑰(有關更多詳細信息,請參見此處)。在下面的示例中,我們將從一個稱為OPENAI_API_KEY的環境變量中獲取API鍵,我們創建了以保留它:

var openAI = SimpleOpenAI . builder ()

. apiKey ( System . getenv ( "OPENAI_API_KEY" ))

. build ();如果您有多個組織,並且要通過組織識別使用情況,則可以通過您的OpenAI組織ID來傳遞您的OpenAI組織ID,並且/或者可以通過OpenAI Project ID通過,以防您要提供對單個項目的訪問權限。在下面的示例中,我們正在為這些ID使用環境變量:

var openAI = SimpleOpenAI . builder ()

. apiKey ( System . getenv ( "OPENAI_API_KEY" ))

. organizationId ( System . getenv ( "OPENAI_ORGANIZATION_ID" ))

. projectId ( System . getenv ( "OPENAI_PROJECT_ID" ))

. build ();另外,如果您想為HTTP連接提供更多選項,例如執行者,代理,超時,cookie等,則可以提供自定義的Java HTTPClient對象(有關更多詳細信息)。在下面的示例中,我們提供了自定義的httpclient:

var httpClient = HttpClient . newBuilder ()

. version ( Version . HTTP_1_1 )

. followRedirects ( Redirect . NORMAL )

. connectTimeout ( Duration . ofSeconds ( 20 ))

. executor ( Executors . newFixedThreadPool ( 3 ))

. proxy ( ProxySelector . of ( new InetSocketAddress ( "proxy.example.com" , 80 )))

. build ();

var openAI = SimpleOpenAI . builder ()

. apiKey ( System . getenv ( "OPENAI_API_KEY" ))

. httpClient ( httpClient )

. build ();創建了一個簡單的對像後,您準備致電其服務,以便與OpenAI API通信。讓我們看看一些例子。

示例調用音頻服務以將文本轉換為音頻。我們要求以二進制格式(InputStream)接收音頻:

var speechRequest = SpeechRequest . builder ()

. model ( "tts-1" )

. input ( "Hello world, welcome to the AI universe!" )

. voice ( Voice . ALLOY )

. responseFormat ( SpeechResponseFormat . MP3 )

. speed ( 1.0 )

. build ();

var futureSpeech = openAI . audios (). speak ( speechRequest );

var speechResponse = futureSpeech . join ();

try {

var audioFile = new FileOutputStream ( speechFileName );

audioFile . write ( speechResponse . readAllBytes ());

System . out . println ( audioFile . getChannel (). size () + " bytes" );

audioFile . close ();

} catch ( Exception e ) {

e . printStackTrace ();

}示例致電音頻服務以將音頻轉錄為文本。我們要求以純文本格式接收轉錄(請參閱方法的名稱):

var audioRequest = TranscriptionRequest . builder ()

. file ( Paths . get ( "hello_audio.mp3" ))

. model ( "whisper-1" )

. responseFormat ( AudioResponseFormat . VERBOSE_JSON )

. temperature ( 0.2 )

. timestampGranularity ( TimestampGranularity . WORD )

. timestampGranularity ( TimestampGranularity . SEGMENT )

. build ();

var futureAudio = openAI . audios (). transcribe ( audioRequest );

var audioResponse = futureAudio . join ();

System . out . println ( audioResponse );示例調用圖像服務以生成兩個圖像,以響應我們的提示。我們要求接收圖像的URL,並在控制台中打印出圖像:

var imageRequest = ImageRequest . builder ()

. prompt ( "A cartoon of a hummingbird that is flying around a flower." )

. n ( 2 )

. size ( Size . X256 )

. responseFormat ( ImageResponseFormat . URL )

. model ( "dall-e-2" )

. build ();

var futureImage = openAI . images (). create ( imageRequest );

var imageResponse = futureImage . join ();

imageResponse . stream (). forEach ( img -> System . out . println ( " n " + img . getUrl ()));示例致電聊天完成服務以提出問題並等待完整的答案。我們正在控制台上打印出來:

var chatRequest = ChatRequest . builder ()

. model ( "gpt-4o-mini" )

. message ( SystemMessage . of ( "You are an expert in AI." ))

. message ( UserMessage . of ( "Write a technical article about ChatGPT, no more than 100 words." ))

. temperature ( 0.0 )

. maxCompletionTokens ( 300 )

. build ();

var futureChat = openAI . chatCompletions (). create ( chatRequest );

var chatResponse = futureChat . join ();

System . out . println ( chatResponse . firstContent ());示例致電聊天完成服務以提出問題,並在部分消息中等待答案。我們將在每個三角洲到達後立即將其打印出來:

var chatRequest = ChatRequest . builder ()

. model ( "gpt-4o-mini" )

. message ( SystemMessage . of ( "You are an expert in AI." ))

. message ( UserMessage . of ( "Write a technical article about ChatGPT, no more than 100 words." ))

. temperature ( 0.0 )

. maxCompletionTokens ( 300 )

. build ();

var futureChat = openAI . chatCompletions (). createStream ( chatRequest );

var chatResponse = futureChat . join ();

chatResponse . filter ( chatResp -> chatResp . getChoices (). size () > 0 && chatResp . firstContent () != null )

. map ( Chat :: firstContent )

. forEach ( System . out :: print );

System . out . println ();此功能授權聊天完成服務以解決我們上下文的特定問題。在此示例中,我們設置了三個功能,並且正在輸入一個提示,該提示將需要調用其中一個(功能product )。對於設置功能,我們使用的其他類實現了接口Functional 。這些類通過每個函數參數定義一個字段,註釋它們來描述它們,每個類都必須使用函數的邏輯覆蓋execute方法。請注意,我們正在使用FunctionExecutor實用程序類來註冊函數並執行openai.chatCompletions()調用:

public void demoCallChatWithFunctions () {

var functionExecutor = new FunctionExecutor ();

functionExecutor . enrollFunction (

FunctionDef . builder ()

. name ( "get_weather" )

. description ( "Get the current weather of a location" )

. functionalClass ( Weather . class )

. strict ( Boolean . TRUE )

. build ());

functionExecutor . enrollFunction (

FunctionDef . builder ()

. name ( "product" )

. description ( "Get the product of two numbers" )

. functionalClass ( Product . class )

. strict ( Boolean . TRUE )

. build ());

functionExecutor . enrollFunction (

FunctionDef . builder ()

. name ( "run_alarm" )

. description ( "Run an alarm" )

. functionalClass ( RunAlarm . class )

. strict ( Boolean . TRUE )

. build ());

var messages = new ArrayList < ChatMessage >();

messages . add ( UserMessage . of ( "What is the product of 123 and 456?" ));

chatRequest = ChatRequest . builder ()

. model ( "gpt-4o-mini" )

. messages ( messages )

. tools ( functionExecutor . getToolFunctions ())

. build ();

var futureChat = openAI . chatCompletions (). create ( chatRequest );

var chatResponse = futureChat . join ();

var chatMessage = chatResponse . firstMessage ();

var chatToolCall = chatMessage . getToolCalls (). get ( 0 );

var result = functionExecutor . execute ( chatToolCall . getFunction ());

messages . add ( chatMessage );

messages . add ( ToolMessage . of ( result . toString (), chatToolCall . getId ()));

chatRequest = ChatRequest . builder ()

. model ( "gpt-4o-mini" )

. messages ( messages )

. tools ( functionExecutor . getToolFunctions ())

. build ();

futureChat = openAI . chatCompletions (). create ( chatRequest );

chatResponse = futureChat . join ();

System . out . println ( chatResponse . firstContent ());

}

public static class Weather implements Functional {

@ JsonPropertyDescription ( "City and state, for example: León, Guanajuato" )

@ JsonProperty ( required = true )

public String location ;

@ JsonPropertyDescription ( "The temperature unit, can be 'celsius' or 'fahrenheit'" )

@ JsonProperty ( required = true )

public String unit ;

@ Override

public Object execute () {

return Math . random () * 45 ;

}

}

public static class Product implements Functional {

@ JsonPropertyDescription ( "The multiplicand part of a product" )

@ JsonProperty ( required = true )

public double multiplicand ;

@ JsonPropertyDescription ( "The multiplier part of a product" )

@ JsonProperty ( required = true )

public double multiplier ;

@ Override

public Object execute () {

return multiplicand * multiplier ;

}

}

public static class RunAlarm implements Functional {

@ Override

public Object execute () {

return "DONE" ;

}

}示例調用聊天完成服務以允許模型攝入外部圖像並回答有關它們的問題:

var chatRequest = ChatRequest . builder ()

. model ( "gpt-4o-mini" )

. messages ( List . of (

UserMessage . of ( List . of (

ContentPartText . of (

"What do you see in the image? Give in details in no more than 100 words." ),

ContentPartImageUrl . of ( ImageUrl . of (

"https://upload.wikimedia.org/wikipedia/commons/e/eb/Machu_Picchu%2C_Peru.jpg" ))))))

. temperature ( 0.0 )

. maxCompletionTokens ( 500 )

. build ();

var chatResponse = openAI . chatCompletions (). createStream ( chatRequest ). join ();

chatResponse . filter ( chatResp -> chatResp . getChoices (). size () > 0 && chatResp . firstContent () != null )

. map ( Chat :: firstContent )

. forEach ( System . out :: print );

System . out . println ();示例調用聊天完成服務以允許該模型錄製本地圖像並回答有關它們的問題(在此存儲庫中檢查Base64util的代碼):

var chatRequest = ChatRequest . builder ()

. model ( "gpt-4o-mini" )

. messages ( List . of (

UserMessage . of ( List . of (

ContentPartText . of (

"What do you see in the image? Give in details in no more than 100 words." ),

ContentPartImageUrl . of ( ImageUrl . of (

Base64Util . encode ( "src/demo/resources/machupicchu.jpg" , MediaType . IMAGE )))))))

. temperature ( 0.0 )

. maxCompletionTokens ( 500 )

. build ();

var chatResponse = openAI . chatCompletions (). createStream ( chatRequest ). join ();

chatResponse . filter ( chatResp -> chatResp . getChoices (). size () > 0 && chatResp . firstContent () != null )

. map ( Chat :: firstContent )

. forEach ( System . out :: print );

System . out . println ();示例調用聊天完成服務以生成對提示的口語響應,並使用音頻輸入來提示該模型(在此存儲庫中檢查Base64util的代碼):

var messages = new ArrayList < ChatMessage >();

messages . add ( SystemMessage . of ( "Respond in a short and concise way." ));

messages . add ( UserMessage . of ( List . of ( ContentPartInputAudio . of ( InputAudio . of (

Base64Util . encode ( "src/demo/resources/question1.mp3" , null ), InputAudioFormat . MP3 )))));

chatRequest = ChatRequest . builder ()

. model ( "gpt-4o-audio-preview" )

. modality ( Modality . TEXT )

. modality ( Modality . AUDIO )

. audio ( Audio . of ( Voice . ALLOY , AudioFormat . MP3 ))

. messages ( messages )

. build ();

var chatResponse = openAI . chatCompletions (). create ( chatRequest ). join ();

var audio = chatResponse . firstMessage (). getAudio ();

Base64Util . decode ( audio . getData (), "src/demo/resources/answer1.mp3" );

System . out . println ( "Answer 1: " + audio . getTranscript ());

messages . add ( AssistantMessage . builder (). audioId ( audio . getId ()). build ());

messages . add ( UserMessage . of ( List . of ( ContentPartInputAudio . of ( InputAudio . of (

Base64Util . encode ( "src/demo/resources/question2.mp3" , null ), InputAudioFormat . MP3 )))));

chatRequest = ChatRequest . builder ()

. model ( "gpt-4o-audio-preview" )

. modality ( Modality . TEXT )

. modality ( Modality . AUDIO )

. audio ( Audio . of ( Voice . ALLOY , AudioFormat . MP3 ))

. messages ( messages )

. build ();

chatResponse = openAI . chatCompletions (). create ( chatRequest ). join ();

audio = chatResponse . firstMessage (). getAudio ();

Base64Util . decode ( audio . getData (), "src/demo/resources/answer2.mp3" );

System . out . println ( "Answer 2: " + audio . getTranscript ());示例調用聊天完成服務以確保模型始終生成遵守通過Java類定義的JSON模式的響應:

public void demoCallChatWithStructuredOutputs () {

var chatRequest = ChatRequest . builder ()

. model ( "gpt-4o-mini" )

. message ( SystemMessage

. of ( "You are a helpful math tutor. Guide the user through the solution step by step." ))

. message ( UserMessage . of ( "How can I solve 8x + 7 = -23" ))

. responseFormat ( ResponseFormat . jsonSchema ( JsonSchema . builder ()

. name ( "MathReasoning" )

. schemaClass ( MathReasoning . class )

. build ()))

. build ();

var chatResponse = openAI . chatCompletions (). createStream ( chatRequest ). join ();

chatResponse . filter ( chatResp -> chatResp . getChoices (). size () > 0 && chatResp . firstContent () != null )

. map ( Chat :: firstContent )

. forEach ( System . out :: print );

System . out . println ();

}

public static class MathReasoning {

public List < Step > steps ;

public String finalAnswer ;

public static class Step {

public String explanation ;

public String output ;

}

}此示例模擬了命令控制台的對話聊天,並通過流函數和呼叫功能演示了聊天量的用法。

您可以看到完整的演示代碼以及運行演示代碼的結果:

package io . github . sashirestela . openai . demo ;

import com . fasterxml . jackson . annotation . JsonProperty ;

import com . fasterxml . jackson . annotation . JsonPropertyDescription ;

import io . github . sashirestela . openai . SimpleOpenAI ;

import io . github . sashirestela . openai . common . function . FunctionDef ;

import io . github . sashirestela . openai . common . function . FunctionExecutor ;

import io . github . sashirestela . openai . common . function . Functional ;

import io . github . sashirestela . openai . common . tool . ToolCall ;

import io . github . sashirestela . openai . domain . chat . Chat ;

import io . github . sashirestela . openai . domain . chat . Chat . Choice ;

import io . github . sashirestela . openai . domain . chat . ChatMessage ;

import io . github . sashirestela . openai . domain . chat . ChatMessage . AssistantMessage ;

import io . github . sashirestela . openai . domain . chat . ChatMessage . ResponseMessage ;

import io . github . sashirestela . openai . domain . chat . ChatMessage . ToolMessage ;

import io . github . sashirestela . openai . domain . chat . ChatMessage . UserMessage ;

import io . github . sashirestela . openai . domain . chat . ChatRequest ;

import java . util . ArrayList ;

import java . util . List ;

import java . util . stream . Stream ;

public class ConversationDemo {

private SimpleOpenAI openAI ;

private FunctionExecutor functionExecutor ;

private int indexTool ;

private StringBuilder content ;

private StringBuilder functionArgs ;

public ConversationDemo () {

openAI = SimpleOpenAI . builder (). apiKey ( System . getenv ( "OPENAI_API_KEY" )). build ();

}

public void prepareConversation () {

List < FunctionDef > functionList = new ArrayList <>();

functionList . add ( FunctionDef . builder ()

. name ( "getCurrentTemperature" )

. description ( "Get the current temperature for a specific location" )

. functionalClass ( CurrentTemperature . class )

. strict ( Boolean . TRUE )

. build ());

functionList . add ( FunctionDef . builder ()

. name ( "getRainProbability" )

. description ( "Get the probability of rain for a specific location" )

. functionalClass ( RainProbability . class )

. strict ( Boolean . TRUE )

. build ());

functionExecutor = new FunctionExecutor ( functionList );

}

public void runConversation () {

List < ChatMessage > messages = new ArrayList <>();

var myMessage = System . console (). readLine ( " n Welcome! Write any message: " );

messages . add ( UserMessage . of ( myMessage ));

while (! myMessage . toLowerCase (). equals ( "exit" )) {

var chatStream = openAI . chatCompletions ()

. createStream ( ChatRequest . builder ()

. model ( "gpt-4o-mini" )

. messages ( messages )

. tools ( functionExecutor . getToolFunctions ())

. temperature ( 0.2 )

. stream ( true )

. build ())

. join ();

indexTool = - 1 ;

content = new StringBuilder ();

functionArgs = new StringBuilder ();

var response = getResponse ( chatStream );

if ( response . getMessage (). getContent () != null ) {

messages . add ( AssistantMessage . of ( response . getMessage (). getContent ()));

}

if ( response . getFinishReason (). equals ( "tool_calls" )) {

messages . add ( response . getMessage ());

var toolCalls = response . getMessage (). getToolCalls ();

var toolMessages = functionExecutor . executeAll ( toolCalls ,

( toolCallId , result ) -> ToolMessage . of ( result , toolCallId ));

messages . addAll ( toolMessages );

} else {

myMessage = System . console (). readLine ( " n n Write any message (or write 'exit' to finish): " );

messages . add ( UserMessage . of ( myMessage ));

}

}

}

private Choice getResponse ( Stream < Chat > chatStream ) {

var choice = new Choice ();

choice . setIndex ( 0 );

var chatMsgResponse = new ResponseMessage ();

List < ToolCall > toolCalls = new ArrayList <>();

chatStream . forEach ( responseChunk -> {

var choices = responseChunk . getChoices ();

if ( choices . size () > 0 ) {

var innerChoice = choices . get ( 0 );

var delta = innerChoice . getMessage ();

if ( delta . getRole () != null ) {

chatMsgResponse . setRole ( delta . getRole ());

}

if ( delta . getContent () != null && ! delta . getContent (). isEmpty ()) {

content . append ( delta . getContent ());

System . out . print ( delta . getContent ());

}

if ( delta . getToolCalls () != null ) {

var toolCall = delta . getToolCalls (). get ( 0 );

if ( toolCall . getIndex () != indexTool ) {

if ( toolCalls . size () > 0 ) {

toolCalls . get ( toolCalls . size () - 1 ). getFunction (). setArguments ( functionArgs . toString ());

functionArgs = new StringBuilder ();

}

toolCalls . add ( toolCall );

indexTool ++;

} else {

functionArgs . append ( toolCall . getFunction (). getArguments ());

}

}

if ( innerChoice . getFinishReason () != null ) {

if ( content . length () > 0 ) {

chatMsgResponse . setContent ( content . toString ());

}

if ( toolCalls . size () > 0 ) {

toolCalls . get ( toolCalls . size () - 1 ). getFunction (). setArguments ( functionArgs . toString ());

chatMsgResponse . setToolCalls ( toolCalls );

}

choice . setMessage ( chatMsgResponse );

choice . setFinishReason ( innerChoice . getFinishReason ());

}

}

});

return choice ;

}

public static void main ( String [] args ) {

var demo = new ConversationDemo ();

demo . prepareConversation ();

demo . runConversation ();

}

public static class CurrentTemperature implements Functional {

@ JsonPropertyDescription ( "The city and state, e.g., San Francisco, CA" )

@ JsonProperty ( required = true )

public String location ;

@ JsonPropertyDescription ( "The temperature unit to use. Infer this from the user's location." )

@ JsonProperty ( required = true )

public String unit ;

@ Override

public Object execute () {

double centigrades = Math . random () * ( 40.0 - 10.0 ) + 10.0 ;

double fahrenheit = centigrades * 9.0 / 5.0 + 32.0 ;

String shortUnit = unit . substring ( 0 , 1 ). toUpperCase ();

return shortUnit . equals ( "C" ) ? centigrades : ( shortUnit . equals ( "F" ) ? fahrenheit : 0.0 );

}

}

public static class RainProbability implements Functional {

@ JsonPropertyDescription ( "The city and state, e.g., San Francisco, CA" )

@ JsonProperty ( required = true )

public String location ;

@ Override

public Object execute () {

return Math . random () * 100 ;

}

}

}Welcome! Write any message: Hi, can you help me with some quetions about Lima, Peru?

Of course! What would you like to know about Lima, Peru?

Write any message (or write 'exit' to finish): Tell me something brief about Lima Peru, then tell me how's the weather there right now. Finally give me three tips to travel there.

## # Brief About Lima, Peru

Lima, the capital city of Peru, is a bustling metropolis that blends modernity with rich historical heritage. Founded by Spanish conquistador Francisco Pizarro in 1535, Lima is known for its colonial architecture, vibrant culture, and delicious cuisine, particularly its world-renowned ceviche. The city is also a gateway to exploring Peru's diverse landscapes, from the coastal deserts to the Andean highlands and the Amazon rainforest.

## # Current Weather in Lima, Peru

I'll check the current temperature and the probability of rain in Lima for you. ## # Current Weather in Lima, Peru

- ** Temperature: ** Approximately 11.8°C

- ** Probability of Rain: ** Approximately 97.8%

## # Three Tips for Traveling to Lima, Peru

1. ** Explore the Historic Center: **

- Visit the Plaza Mayor, the Government Palace, and the Cathedral of Lima. These landmarks offer a glimpse into Lima's colonial past and are UNESCO World Heritage Sites.

2. ** Savor the Local Cuisine: **

- Don't miss out on trying ceviche, a traditional Peruvian dish made from fresh raw fish marinated in citrus juices. Also, explore the local markets and try other Peruvian delicacies.

3. ** Visit the Coastal Districts: **

- Head to Miraflores and Barranco for stunning ocean views, vibrant nightlife, and cultural experiences. These districts are known for their beautiful parks, cliffs, and bohemian atmosphere.

Enjoy your trip to Lima! If you have any more questions, feel free to ask.

Write any message (or write 'exit' to finish): exit此示例模擬了命令控制台的對話聊天,並演示了最新助手API V2功能的使用:

您可以看到完整的演示代碼以及運行演示代碼的結果:

package io . github . sashirestela . openai . demo ;

import com . fasterxml . jackson . annotation . JsonProperty ;

import com . fasterxml . jackson . annotation . JsonPropertyDescription ;

import io . github . sashirestela . cleverclient . Event ;

import io . github . sashirestela . openai . SimpleOpenAI ;

import io . github . sashirestela . openai . common . content . ContentPart . ContentPartTextAnnotation ;

import io . github . sashirestela . openai . common . function . FunctionDef ;

import io . github . sashirestela . openai . common . function . FunctionExecutor ;

import io . github . sashirestela . openai . common . function . Functional ;

import io . github . sashirestela . openai . domain . assistant . AssistantRequest ;

import io . github . sashirestela . openai . domain . assistant . AssistantTool ;

import io . github . sashirestela . openai . domain . assistant . ThreadMessageDelta ;

import io . github . sashirestela . openai . domain . assistant . ThreadMessageRequest ;

import io . github . sashirestela . openai . domain . assistant . ThreadMessageRole ;

import io . github . sashirestela . openai . domain . assistant . ThreadRequest ;

import io . github . sashirestela . openai . domain . assistant . ThreadRun ;

import io . github . sashirestela . openai . domain . assistant . ThreadRun . RunStatus ;

import io . github . sashirestela . openai . domain . assistant . ThreadRunRequest ;

import io . github . sashirestela . openai . domain . assistant . ThreadRunSubmitOutputRequest ;

import io . github . sashirestela . openai . domain . assistant . ThreadRunSubmitOutputRequest . ToolOutput ;

import io . github . sashirestela . openai . domain . assistant . ToolResourceFull ;

import io . github . sashirestela . openai . domain . assistant . ToolResourceFull . FileSearch ;

import io . github . sashirestela . openai . domain . assistant . VectorStoreRequest ;

import io . github . sashirestela . openai . domain . assistant . events . EventName ;

import io . github . sashirestela . openai . domain . file . FileRequest ;

import io . github . sashirestela . openai . domain . file . FileRequest . PurposeType ;

import java . nio . file . Paths ;

import java . util . ArrayList ;

import java . util . List ;

import java . util . stream . Stream ;

public class ConversationV2Demo {

private SimpleOpenAI openAI ;

private String fileId ;

private String vectorStoreId ;

private FunctionExecutor functionExecutor ;

private String assistantId ;

private String threadId ;

public ConversationV2Demo () {

openAI = SimpleOpenAI . builder (). apiKey ( System . getenv ( "OPENAI_API_KEY" )). build ();

}

public void prepareConversation () {

List < FunctionDef > functionList = new ArrayList <>();

functionList . add ( FunctionDef . builder ()

. name ( "getCurrentTemperature" )

. description ( "Get the current temperature for a specific location" )

. functionalClass ( CurrentTemperature . class )

. strict ( Boolean . TRUE )

. build ());

functionList . add ( FunctionDef . builder ()

. name ( "getRainProbability" )

. description ( "Get the probability of rain for a specific location" )

. functionalClass ( RainProbability . class )

. strict ( Boolean . TRUE )

. build ());

functionExecutor = new FunctionExecutor ( functionList );

var file = openAI . files ()

. create ( FileRequest . builder ()

. file ( Paths . get ( "src/demo/resources/mistral-ai.txt" ))

. purpose ( PurposeType . ASSISTANTS )

. build ())

. join ();

fileId = file . getId ();

System . out . println ( "File was created with id: " + fileId );

var vectorStore = openAI . vectorStores ()

. createAndPoll ( VectorStoreRequest . builder ()

. fileId ( fileId )

. build ());

vectorStoreId = vectorStore . getId ();

System . out . println ( "Vector Store was created with id: " + vectorStoreId );

var assistant = openAI . assistants ()

. create ( AssistantRequest . builder ()

. name ( "World Assistant" )

. model ( "gpt-4o" )

. instructions ( "You are a skilled tutor on geo-politic topics." )

. tools ( functionExecutor . getToolFunctions ())

. tool ( AssistantTool . fileSearch ())

. toolResources ( ToolResourceFull . builder ()

. fileSearch ( FileSearch . builder ()

. vectorStoreId ( vectorStoreId )

. build ())

. build ())

. temperature ( 0.2 )

. build ())

. join ();

assistantId = assistant . getId ();

System . out . println ( "Assistant was created with id: " + assistantId );

var thread = openAI . threads (). create ( ThreadRequest . builder (). build ()). join ();

threadId = thread . getId ();

System . out . println ( "Thread was created with id: " + threadId );

System . out . println ();

}

public void runConversation () {

var myMessage = System . console (). readLine ( " n Welcome! Write any message: " );

while (! myMessage . toLowerCase (). equals ( "exit" )) {

openAI . threadMessages ()

. create ( threadId , ThreadMessageRequest . builder ()

. role ( ThreadMessageRole . USER )

. content ( myMessage )

. build ())

. join ();

var runStream = openAI . threadRuns ()

. createStream ( threadId , ThreadRunRequest . builder ()

. assistantId ( assistantId )

. parallelToolCalls ( Boolean . FALSE )

. build ())

. join ();

handleRunEvents ( runStream );

myMessage = System . console (). readLine ( " n Write any message (or write 'exit' to finish): " );

}

}

private void handleRunEvents ( Stream < Event > runStream ) {

runStream . forEach ( event -> {

switch ( event . getName ()) {

case EventName . THREAD_RUN_CREATED :

case EventName . THREAD_RUN_COMPLETED :

case EventName . THREAD_RUN_REQUIRES_ACTION :

var run = ( ThreadRun ) event . getData ();

System . out . println ( "=====>> Thread Run: id=" + run . getId () + ", status=" + run . getStatus ());

if ( run . getStatus (). equals ( RunStatus . REQUIRES_ACTION )) {

var toolCalls = run . getRequiredAction (). getSubmitToolOutputs (). getToolCalls ();

var toolOutputs = functionExecutor . executeAll ( toolCalls ,

( toolCallId , result ) -> ToolOutput . builder ()

. toolCallId ( toolCallId )

. output ( result )

. build ());

var runSubmitToolStream = openAI . threadRuns ()

. submitToolOutputStream ( threadId , run . getId (), ThreadRunSubmitOutputRequest . builder ()

. toolOutputs ( toolOutputs )

. stream ( true )

. build ())

. join ();

handleRunEvents ( runSubmitToolStream );

}

break ;

case EventName . THREAD_MESSAGE_DELTA :

var msgDelta = ( ThreadMessageDelta ) event . getData ();

var content = msgDelta . getDelta (). getContent (). get ( 0 );

if ( content instanceof ContentPartTextAnnotation ) {

var textContent = ( ContentPartTextAnnotation ) content ;

System . out . print ( textContent . getText (). getValue ());

}

break ;

case EventName . THREAD_MESSAGE_COMPLETED :

System . out . println ();

break ;

default :

break ;

}

});

}

public void cleanConversation () {

var deletedFile = openAI . files (). delete ( fileId ). join ();

var deletedVectorStore = openAI . vectorStores (). delete ( vectorStoreId ). join ();

var deletedAssistant = openAI . assistants (). delete ( assistantId ). join ();

var deletedThread = openAI . threads (). delete ( threadId ). join ();

System . out . println ( "File was deleted: " + deletedFile . getDeleted ());

System . out . println ( "Vector Store was deleted: " + deletedVectorStore . getDeleted ());

System . out . println ( "Assistant was deleted: " + deletedAssistant . getDeleted ());

System . out . println ( "Thread was deleted: " + deletedThread . getDeleted ());

}

public static void main ( String [] args ) {

var demo = new ConversationV2Demo ();

demo . prepareConversation ();

demo . runConversation ();

demo . cleanConversation ();

}

public static class CurrentTemperature implements Functional {

@ JsonPropertyDescription ( "The city and state, e.g., San Francisco, CA" )

@ JsonProperty ( required = true )

public String location ;

@ JsonPropertyDescription ( "The temperature unit to use. Infer this from the user's location." )

@ JsonProperty ( required = true )

public String unit ;

@ Override

public Object execute () {

double centigrades = Math . random () * ( 40.0 - 10.0 ) + 10.0 ;

double fahrenheit = centigrades * 9.0 / 5.0 + 32.0 ;

String shortUnit = unit . substring ( 0 , 1 ). toUpperCase ();

return shortUnit . equals ( "C" ) ? centigrades : ( shortUnit . equals ( "F" ) ? fahrenheit : 0.0 );

}

}

public static class RainProbability implements Functional {

@ JsonPropertyDescription ( "The city and state, e.g., San Francisco, CA" )

@ JsonProperty ( required = true )

public String location ;

@ Override

public Object execute () {

return Math . random () * 100 ;

}

}

}File was created with id: file-oDFIF7o4SwuhpwBNnFIILhMK

Vector Store was created with id: vs_lG1oJmF2s5wLhqHUSeJpELMr

Assistant was created with id: asst_TYS5cZ05697tyn3yuhDrCCIv

Thread was created with id: thread_33n258gFVhZVIp88sQKuqMvg

Welcome! Write any message: Hello

=====>> Thread Run: id=run_nihN6dY0uyudsORg4xyUvQ5l, status=QUEUED

Hello! How can I assist you today?

=====>> Thread Run: id=run_nihN6dY0uyudsORg4xyUvQ5l, status=COMPLETED

Write any message (or write 'exit' to finish): Tell me something brief about Lima Peru, then tell me how's the weather there right now. Finally give me three tips to travel there.

=====>> Thread Run: id=run_QheimPyP5UK6FtmH5obon0fB, status=QUEUED

Lima, the capital city of Peru, is located on the country's arid Pacific coast. It's known for its vibrant culinary scene, rich history, and as a cultural hub with numerous museums, colonial architecture, and remnants of pre-Columbian civilizations. This bustling metropolis serves as a key gateway to visiting Peru’s more famous attractions, such as Machu Picchu and the Amazon rainforest.

Let me find the current weather conditions in Lima for you, and then I'll provide three travel tips.

=====>> Thread Run: id=run_QheimPyP5UK6FtmH5obon0fB, status=REQUIRES_ACTION

## # Current Weather in Lima, Peru:

- ** Temperature: ** 12.8°C

- ** Rain Probability: ** 82.7%

## # Three Travel Tips for Lima, Peru:

1. ** Best Time to Visit: ** Plan your trip during the dry season, from May to September, which offers clearer skies and milder temperatures. This period is particularly suitable for outdoor activities and exploring the city comfortably.

2. ** Local Cuisine: ** Don't miss out on tasting the local Peruvian dishes, particularly the ceviche, which is renowned worldwide. Lima is also known as the gastronomic capital of South America, so indulge in the wide variety of dishes available.

3. ** Cultural Attractions: ** Allocate enough time to visit Lima's rich array of museums, such as the Larco Museum, which showcases pre-Columbian art, and the historical center which is a UNESCO World Heritage Site. Moreover, exploring districts like Miraflores and Barranco can provide insights into the modern and bohemian sides of the city.

Enjoy planning your trip to Lima! If you need more information or help, feel free to ask.

=====>> Thread Run: id=run_QheimPyP5UK6FtmH5obon0fB, status=COMPLETED

Write any message (or write 'exit' to finish): Tell me something about the Mistral company

=====>> Thread Run: id=run_5u0t8kDQy87p5ouaTRXsCG8m, status=QUEUED

Mistral AI is a French company that specializes in selling artificial intelligence products. It was established in April 2023 by former employees of Meta Platforms and Google DeepMind. Notably, the company secured a significant amount of funding, raising €385 million in October 2023, and achieved a valuation exceeding $ 2 billion by December of the same year.

The prime focus of Mistral AI is on developing and producing open-source large language models. This approach underscores the foundational role of open-source software as a counter to proprietary models. As of March 2024, Mistral AI has published two models, which are available in terms of weights, while three more models—categorized as Small, Medium, and Large—are accessible only through an API[1].

=====>> Thread Run: id=run_5u0t8kDQy87p5ouaTRXsCG8m, status=COMPLETED

Write any message (or write 'exit' to finish): exit

File was deleted: true

Vector Store was deleted: true

Assistant was deleted: true

Thread was deleted: true在此示例中,您可以看到代碼,以使用麥克風和揚聲器在模型之間建立語音對話。請參閱完整的代碼:

Realtimedemo.java

Simple-Openai可以與與OpenAI API兼容的其他提供商一起使用。目前,對以下其他提供商有支持:

Azure Openia由簡單的Openai支持。我們可以使用擴展BaseSimpleOpenAI的SimpleOpenAIAzure開始使用此提供商。

var openai = SimpleOpenAIAzure . builder ()

. apiKey ( System . getenv ( "AZURE_OPENAI_API_KEY" ))

. baseUrl ( System . getenv ( "AZURE_OPENAI_BASE_URL" )) // Including resourceName and deploymentId

. apiVersion ( System . getenv ( "AZURE_OPENAI_API_VERSION" ))

//.httpClient(customHttpClient) Optionally you could pass a custom HttpClient

. build ();Azure Openai由具有不同功能的多種模型提供動力,並且每個模型都需要單獨的部署。模型可用性隨區域和雲而異。查看有關Azure Openai型號的更多詳細信息。

目前,我們僅支持以下服務:

chatCompletionService (文本生成,流式,功能調用,視覺,結構化輸出)fileService (上傳文件)Anyscale由簡單的Openai un。我們可以使用擴展BaseSimpleOpenAI SimpleOpenAIAnyscale類,以開始使用此提供商。

var openai = SimpleOpenAIAnyscale . builder ()

. apiKey ( System . getenv ( "ANYSCALE_API_KEY" ))

//.baseUrl(customUrl) Optionally you could pass a custom baseUrl

//.httpClient(customHttpClient) Optionally you could pass a custom HttpClient

. build ();目前,我們僅支持chatCompletionService服務。它通過Mistral模型進行了測試。

每個OpenAI服務的示例都是在文件夾演示中創建的,您可以按照下一步執行它們:

克隆這個存儲庫:

git clone https://github.com/sashirestela/simple-openai.git

cd simple-openai

建立項目:

mvn clean install

為您的OpenAI API密鑰創建環境變量:

export OPENAI_API_KEY=<here goes your api key>

授予腳本文件的執行權限:

chmod +x rundemo.sh

運行示例:

./rundemo.sh <demo> [debug]

在哪裡:

<demo>是強制性的,必須是值之一:

[debug]是可選的,可以創建demo.log文件,您可以在其中看到每個執行的日誌詳細信息。

例如,使用日誌文件運行聊天演示: ./rundemo.sh Chat debug

Azure Openai演示的指示

運行此演示的推薦模型是:

有關更多詳細信息,請參見Azure Openai文檔:Azure Openai文檔。具有部署URL和API密鑰後,設置以下環境變量:

export AZURE_OPENAI_BASE_URL=<https://YOUR_RESOURCE_NAME.openai.azure.com/openai/deployments/YOUR_DEPLOYMENT_NAME>

export AZURE_OPENAI_API_KEY=<here goes your regional API key>

export AZURE_OPENAI_API_VERSION=<for example: 2024-08-01-preview>

請注意,某些模型在所有區域都可能不可用。如果您難以找到模型,請嘗試其他區域。 API密鑰是區域性的(根據認知帳戶)。如果您在同一區域中提供多個模型,它們將共享相同的API密鑰(實際上每個區域有兩個鍵可以支持備用鍵旋轉)。

請閱讀我們學習和理解如何為該項目貢獻的貢獻指南。

Simple-Openai已獲得MIT許可證的許可。有關更多信息,請參見許可證文件。

我們庫的主要用戶列表:

感謝您使用Simple-Openai 。如果您發現這個項目很有價值,則有幾種方法可以向我們展示您的愛,最好是所有人?::::