The field of artificial intelligence has ushered in a major breakthrough, and Cohere's non-profit research laboratory recently released a multimodal AI model called Aya Vision. This innovative achievement has attracted widespread attention in the industry and is hailed by Cohere as one of the most advanced technologies at present.

Aya Vision demonstrates excellent versatility, capable of handling complex tasks including image description generation, photo-related questions, text translation, and abstract creation in 23 major languages. To promote global scientific research, Cohere provides this technology for free through the WhatsApp platform, allowing researchers around the world to easily access and utilize this cutting-edge achievement.

Cohere highlighted in his official blog that despite significant advances in AI technology, there are still significant gaps in multilingual processing and multimodal tasks. Aya Vision's research and development is to break through this technical bottleneck and promote the further development of artificial intelligence in the fields of cross-language and cross-modality.

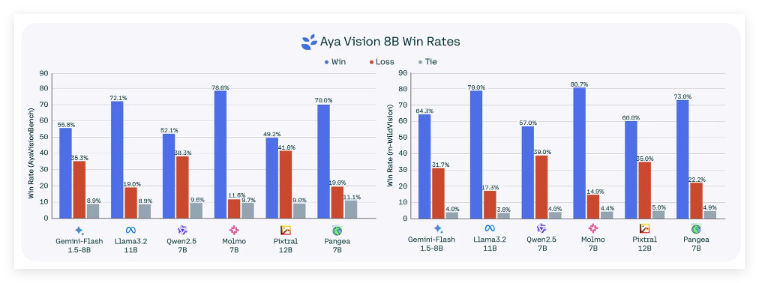

The model is available in two versions: Aya Vision32B and Aya Vision8B. Among them, Aya Vision32B performed well in several visual understanding benchmarks, even surpassing larger competitive models, including Meta's Llama-3.290B Vision. The Aya Vision8B also performed well, outperforming models ten times its size in some evaluations.

These two models have been released on the AI development platform Hugging Face, and are licensed under the Creative Commons4.0, and users are subject to Cohere's acceptable terms of use and are limited to non-commercial use.

In terms of training methods, Cohere adopts an innovative "diversified" English dataset to train models through translation and synthetic annotation techniques. This synthetic annotation technology is generated by AI. Although it has certain limitations, it has been adopted by many leading institutions, including OpenAI, showing its potential in improving model performance.

Cohere said that the use of synthetic annotation technology not only improves training efficiency, but also significantly reduces resource consumption, reflecting the company's dual advantages in technological innovation and resource optimization.

To support more in-depth research, Cohere has also launched AyaVisionBench, a new benchmark evaluation tool. The tool is designed to evaluate the performance of the model in visual and language-combining tasks such as image difference recognition and screenshot-to-code complex features.

Against the backdrop of the current "assessment crisis" in the artificial intelligence industry, the launch of AyaVisionBench provides a more comprehensive and challenging framework for model evaluation, which is expected to promote innovation in industry evaluation standards.

Official blog: https://cohere.com/blog/aya-vision