With the rapid development of multimodal large language models (MLLMs), image and video-related tasks have ushered in unprecedented breakthroughs, especially in the fields of visual question-and-answer, narrative generation and interactive editing. However, despite significant progress in these technologies, achieving fine-grained video content understanding remains a difficult problem to be solved. This challenge involves not only pixel-level segmentation and tracking with language descriptions, but also complex tasks such as visual Q&A on specific video prompts.

Although the current state-of-the-art video perception models perform well in segmentation and tracking tasks, they are still inadequate in open language understanding and dialogue capabilities. Video MLLMs, although perform well in video comprehension and Q&A tasks, are still unable to handle perceptual tasks and visual cues. This limitation limits their use in a wider range of scenarios.

The existing solutions are mainly divided into two categories: multimodal large language models (MLLMs) and reference segmentation systems. MLLMs initially focused on improving multimodal fusion methods and feature extractors, and gradually developed into a framework for instruction tuning on LLMs, such as LLaVA. Recently, researchers have tried to unify images, videos, and multi-image analysis into a single framework, such as LLaVA-OneVision. At the same time, the reference segmentation system has also undergone a transformation from basic fusion modules to integrated segmentation and tracking. However, these solutions still have shortcomings in the comprehensive integration of perception and language comprehension capabilities.

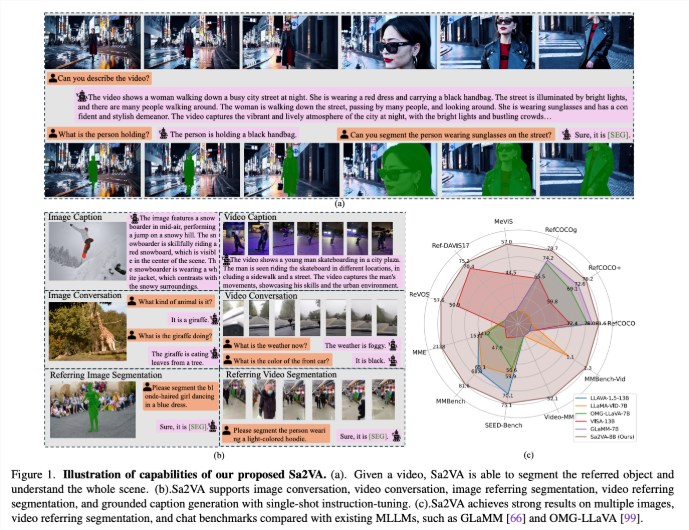

Researchers from UC Merced, ByteDance Seed Team, Wuhan University and Peking University have proposed Sa2VA, a groundbreaking unified model designed to achieve intensive basic understanding of images and videos. This model overcomes the limitations of existing multimodal large language models by minimizing one-time instruction tuning and supporting a wide range of image and video tasks.

Sa2VA innovatively integrates SAM-2 with LLaVA to unify text, images, and video into a shared LLM token space. In addition, the researchers have launched an extensive automatic annotation dataset called Ref-SAV, which contains object expressions in more than 72K complex video scenarios, and 2K manually verified video objects to ensure robust benchmarking capabilities.

The architecture of Sa2VA is mainly composed of two parts: a model similar to LLaVA and SAM-2, and adopts a novel decoupling design. The LLaVA-like component includes a visual encoder that processes images and videos, a visual projection layer, and an LLM for text token prediction. The system adopts a unique decoupling method that allows SAM-2 to operate next to the pre-trained LLaVA model without direct token exchange, thus maintaining computational efficiency and allowing plug-in and unplugging with various pre-trained MLLMs connect.

The research results show that Sa2VA achieved state-of-the-art results in the citation segmentation task, with its Sa2VA-8B model having cIoU scores on RefCOCO, RefCOCO+ and RefCOCOg, respectively, surpassing previous systems such as GLaMM-7B. . In terms of dialogue capabilities, Sa2VA achieved excellent results of 2128, 81.6 and 75.1 on MME, MMbench and SEED-Bench respectively.

In addition, Sa2VA's performance in video benchmarks significantly surpassed the previous state - of-the-art VISA-13B, showing its efficiency and effectiveness in image and video comprehension tasks.

Paper: https://arxiv.org/abs/2501.04001

Model: https://huggingface.co/collections/ByteDance/sa2va-model-zoo-677e3084d71b5f108d00e093

Key points:

Sa2VA is a novel unified AI framework that achieves in-depth understanding of images and videos and overcomes the limitations of existing multimodal models.

The model has achieved state-of-the-art results in several benchmarks such as citation segmentation and dialogue capabilities, showing outstanding performance.

The design of Sa2VA effectively integrates visual and language comprehension capabilities through a decoupling method, supporting a wide range of image and video tasks.