Meta AI, in conjunction with researchers from the University of California, Berkeley and New York University, has developed a new approach called Thinking Preference Optimization (TPO) that aims to significantly improve the response quality of large language models (LLM). Unlike traditional methods that focus only on the final answer, TPO allows the model to think internally before generating the answer, resulting in a more accurate and coherent response. This technology-improved thinking chain (CoT) reasoning method overcomes the shortcomings of the previous CoT method's low accuracy and difficulty in training through optimization and streamlining the internal thinking process of the model, and ultimately generates higher quality answers and in many ways. Excellent in each benchmark test.

Unlike traditional models that focus only on the final answer, the TPO method allows the model to think internally before generating a response, resulting in more accurate and coherent answers.

This new technology combines an improved Chain-of-Thought (CoT) reasoning method. During the training process, the method encourages the model to "think" before responding, helping it build a more systematic internal thinking process. Previous direct CoT prompts sometimes reduce accuracy, and the training process is difficult due to the lack of clear thinking steps. TPO overcomes these limitations by allowing the model to optimize and streamline its thinking process, and does not show intermediate thinking steps in front of the user.

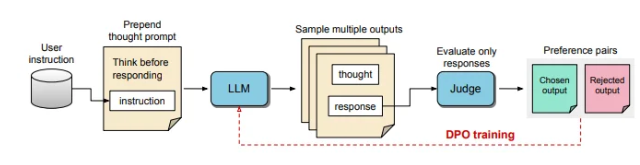

In the process of TPO, large language models are first prompted to generate multiple thought processes, and then these outputs are sampled and evaluated before forming the final response. An evaluation model will then score the output to determine the optimal and worst responses. By using these outputs as choice and rejection for Direct Preference Optimization (DPO), this iterative training method enhances the model's ability to generate more relevant, high-quality responses, thereby improving overall results.

In this method, training prompts are adjusted, encouraging the model to think internally before responding. The evaluated final response is scored by an LLM-based evaluation model, which allows the model to improve quality based solely on the effectiveness of the response without considering implicit thinking steps. In addition, TPO uses direct preference optimization to create preference and rejection responses that contain implicit thinking, and further refines the internal process of the model through multiple training cycles.

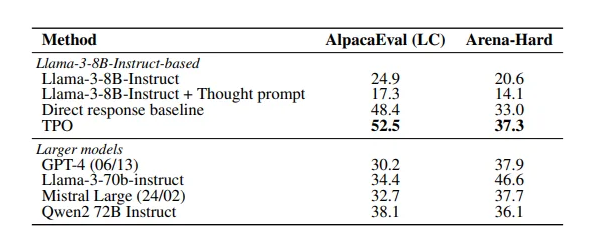

The results of the study show that the TPO method performed well in multiple benchmarks, surpassing a variety of existing models. This approach not only applies to logical and mathematical tasks, but also shows potential in creative fields such as marketing and health command-following tasks.

Paper: https://arxiv.org/pdf/2410.10630

Key points:

TPO technology improves the thinking ability of large language models before generating responses, ensuring that responses are more accurate.

Through improved thinking chain reasoning, the model can optimize and streamline its internal thinking process and improve the quality of response.

TPO is suitable for a variety of fields, not only for logical and mathematical tasks, but also for creative and health.

In short, the TPO method provides a new idea for the performance improvement of large language models. It has broad application prospects in multiple fields and is worth further research and exploration. The paper links are convenient for readers to gain insight into its technical details and experimental results.