This project is a tutorial on exclusive Chinese baby models for open source models, for domestic beginners and based on Linux platforms. It provides full-process guidance for various open source models including environmental configuration, local deployment, efficient fine-tuning and other skills, simplifying the deployment, use and application process of open source models, allowing more ordinary students and researchers to better use open source models, helping open source and free models to integrate into the lives of ordinary learners faster.

The main contents of this project include:

The main content of the project is tutorials, so that more students and future practitioners can understand and familiarize themselves with the methods of eating open source big models! Anyone can propose an issue or submit a PR to jointly build and maintain this project.

Students who want to participate deeply can contact us and we will add you to the project maintainer.

Learning Suggestions: The learning suggestions for this project are to first learn environment configuration, then learn model deployment and use, and finally learn fine-tuning. Because environment configuration is the basis, the deployment and use of the model is the basis, and fine-tuning is advanced. Beginners can choose Qwen1.5, InternLM2, MiniCPM and other models to prioritize learning.

Note: If students want to understand the model composition of the big model and write tasks such as RAG, Agent and Eval from scratch, they can learn another project of Datawhale. Big model is a hot topic in the field of deep learning at present, but most of the existing big model tutorials are only to teach you how to call APIs to complete the application of big models, and few people can explain the model structure, RAG, Agent and Eval from the principle level. Therefore, the repository will provide all handwriting and do not use the form of calling the API to complete the RAG, Agent, and Eval tasks of the big model.

Note: Considering that some students hope to learn the theoretical part of the big model before studying this project, if they want to further study the theoretical basis of LLM and further understand and apply LLM on the basis of theory, they can refer to Datawhale's so-large-llm course.

Note: If any student wants to develop large model applications by himself after studying this course. Students can refer to Datawhale's hands-on big model application development course, which is a big model application development tutorial for novices. It aims to fully present the big model application development process to students based on Alibaba Cloud server and combined with personal knowledge base assistant projects.

What is a big model?

Large model (LLM) narrowly refers to natural language processing (NLP) models trained based on deep learning algorithms. They are mainly used in fields such as natural language understanding and generation. In a broad sense, they also include machine vision (CV) large models, multimodal large models and scientific computing large models.

The battle of hundreds of models is in full swing, and open source LLMs are emerging one after another. Nowadays, many excellent open source LLMs have emerged at home and abroad, such as LLaMA and Alpaca, and domestically, such as ChatGLM, BaiChuan, InternLM (Scholar Puyu), etc. Open source LLM supports local deployment of users and fine-tuning of private domains. Everyone can create their own unique big model based on open source LLM.

However, if ordinary students and users want to use these big models, they need to have certain technical capabilities to complete the deployment and use of the models. For open source LLMs that are emerging one after another, it is a relatively challenging task to quickly master the application methods of open source LLM.

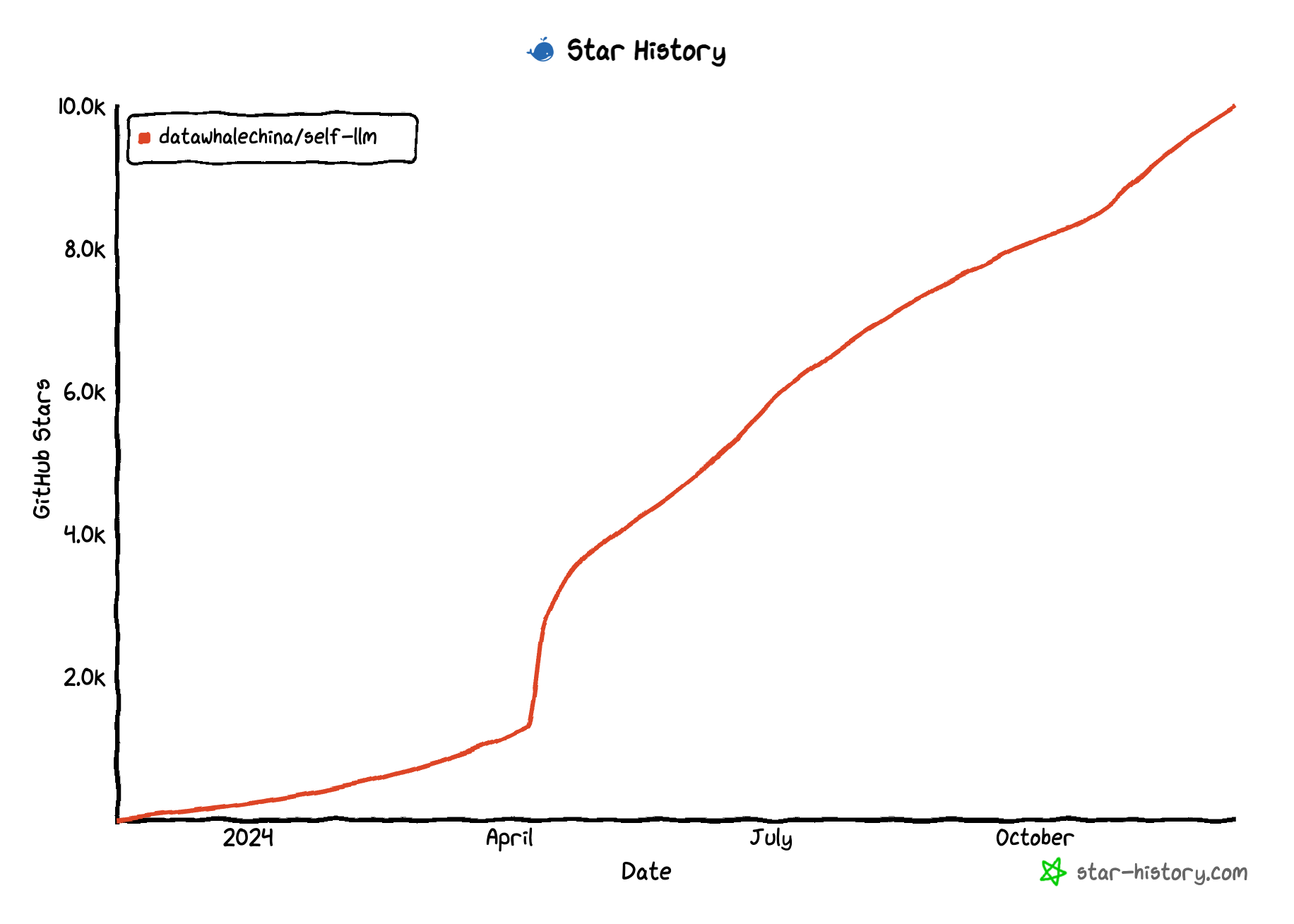

This project aims to first realize the deployment, use and fine-tuning tutorials of mainstream open source LLM at home and abroad based on the experience of core contributors; after realizing the relevant parts of mainstream LLM, we hope to fully gather co-creators to enrich this world of open source LLM and create more and more comprehensive tutorials for special LLMs. Sparks dotted, converging into the sea.

We hope to be the ladder for LLM and the general public, and embrace the more magnificent and vast LLM world with the open source spirit of freedom and equality.

This project is suitable for the following learners:

This project plans to organize the entire process of open source LLM application, including environmental configuration and use, deployment and application, fine-tuning, etc. Each part covers mainstream and features open source LLM:

Chat-Huanhuan: Chat-Zhen Huan is a chat language model that imitates Zhen Huan's tone using all the lines and sentences about Zhen Huan in the script "The Legend of Zhen Huan" and fine-tuning based on LLM.

Tianji: Tianji is a social scenario based on human feelings and worldly styles, covering the entire process of prompt word engineering, intelligent body production, data acquisition and model fine-tuning, RAG data cleaning and use, etc.

Qwen2.5-Coder

Qwen2-vl

Qwen2.5

Apple OpenELM

Llama3_1-8B-Instruct

Gemma-2-9b-it

Yuan2.0

Yuan2.0-M32

DeepSeek-Coder-V2

Bilibili Index-1.9B

Qwen2

GLM-4

Qwen 1.5

Google - Gemma

phi-3

CharacterGLM-6B

LLaMA3-8B-Instruct

XVERSE-7B-Chat

TransNormerLLM

BlueLM Vivo Blue Heart Model

InternLM2

DeepSeek In-depth search

MiniCPM

Qwen-Audio

Qwen

One thousand things

Baichuan Intelligent

InternLM

Atom (llama2)

ChatGLM3

pip, conda change source @ do not have onion, ginger, garlic

AutoDL Open Port @Don't Spicy Ginger and Garlic

Model download

Issue && PR

Note: Rankings are sorted by contribution level