semantic segmentation

v0.2.6

易於使用和可自定義的SOTA語義分割模型,並在Pytorch中具有豐富的數據集

自2022年以來,已經發生了很多變化,如今甚至有開放世界的細分模型(任何細分)。但是,傳統的細分模型仍然需要高精度和自定義用例。此存儲庫將根據新的Pytorch版本,更新的模型以及如何與自定義數據集一起使用的文檔進行更新。

預期發布日期 - > 2024年5月

計劃的功能:

當前要丟棄的功能:

支持的骨幹:

支持的頭/方法:

支持的獨立模型:

支持的模塊:

請參閱基準和可用預訓練模型的模型。

並檢查骨架是否有支撐的骨架。

注意:大多數方法沒有預訓練的模型。在一個存儲庫中將不同的模型與預先訓練的權重相結合和有限的資源以重新培訓自己非常困難。

場景解析:

人解析:

面對解析:

其他的:

有關更多詳細信息和數據集準備,請參閱數據集。

在此處檢查筆記本以測試增強效果。

像素級變換:

空間級別的變換:

然後,克隆回購,並使用以下方式安裝項目

$ git clone https://github.com/sithu31296/semantic-segmentation

$ cd semantic-segmentation

$ pip install -e .在configs中創建一個配置文件。可以在此處找到ADE20K數據集的示例配置。然後編輯您認為是否需要的字段。所有培訓,評估和預測腳本都需要此配置文件。

用單個GPU訓練:

$ python tools/train.py --cfg configs/CONFIG_FILE.yaml要使用多個GPU訓練,請將配置文件中的DDP字段設置為true ,然後運行如下:

$ python -m torch.distributed.launch --nproc_per_node=2 --use_env tools/train.py --cfg configs/ < CONFIG_FILE_NAME > .yaml確保將配置文件的MODEL_PATH設置為訓練有素的模型目錄。

$ python tools/val.py --cfg configs/ < CONFIG_FILE_NAME > .yaml要評估多尺度和翻轉,請將MSF中的ENABLE字段更改為true ,並運行與上述相同的命令。

要進行推斷,請從下面編輯配置文件的參數。

MODEL >> NAME和BACKBONE更改為您所需的預處理模型。DATASET >> NAME更改為數據集名稱。TEST >> MODEL_PATH設置為測試模型的預處理重量。TEST >> FILE更改為您要測試的文件或圖像文件夾路徑。SAVE_DIR中。 # # example using ade20k pretrained models

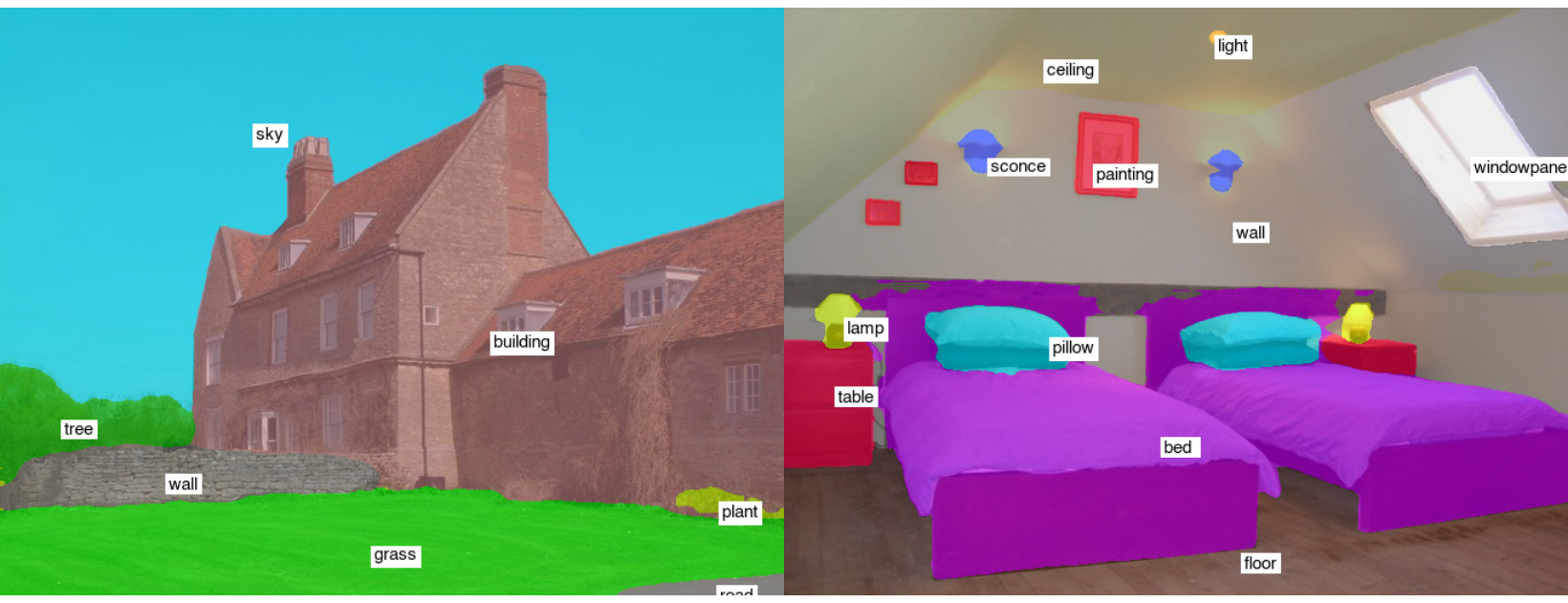

$ python tools/infer.py --cfg configs/ade20k.yaml示例測試結果(segformer-b2):

要轉換為ONNX和Coreml,請運行:

$ python tools/export.py --cfg configs/ < CONFIG_FILE_NAME > .yaml要轉換為OpenVino和Tflite,請參見Torch_optimize。

# # ONNX Inference

$ python scripts/onnx_infer.py --model < ONNX_MODEL_PATH > --img-path < TEST_IMAGE_PATH >

# # OpenVINO Inference

$ python scripts/openvino_infer.py --model < OpenVINO_MODEL_PATH > --img-path < TEST_IMAGE_PATH >

# # TFLite Inference

$ python scripts/tflite_infer.py --model < TFLite_MODEL_PATH > --img-path < TEST_IMAGE_PATH > @article{xie2021segformer,

title={SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers},

author={Xie, Enze and Wang, Wenhai and Yu, Zhiding and Anandkumar, Anima and Alvarez, Jose M and Luo, Ping},

journal={arXiv preprint arXiv:2105.15203},

year={2021}

}

@misc{xiao2018unified,

title={Unified Perceptual Parsing for Scene Understanding},

author={Tete Xiao and Yingcheng Liu and Bolei Zhou and Yuning Jiang and Jian Sun},

year={2018},

eprint={1807.10221},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@article{hong2021deep,

title={Deep Dual-resolution Networks for Real-time and Accurate Semantic Segmentation of Road Scenes},

author={Hong, Yuanduo and Pan, Huihui and Sun, Weichao and Jia, Yisong},

journal={arXiv preprint arXiv:2101.06085},

year={2021}

}

@misc{zhang2021rest,

title={ResT: An Efficient Transformer for Visual Recognition},

author={Qinglong Zhang and Yubin Yang},

year={2021},

eprint={2105.13677},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@misc{huang2021fapn,

title={FaPN: Feature-aligned Pyramid Network for Dense Image Prediction},

author={Shihua Huang and Zhichao Lu and Ran Cheng and Cheng He},

year={2021},

eprint={2108.07058},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@misc{wang2021pvtv2,

title={PVTv2: Improved Baselines with Pyramid Vision Transformer},

author={Wenhai Wang and Enze Xie and Xiang Li and Deng-Ping Fan and Kaitao Song and Ding Liang and Tong Lu and Ping Luo and Ling Shao},

year={2021},

eprint={2106.13797},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@article{Liu2021PSA,

title={Polarized Self-Attention: Towards High-quality Pixel-wise Regression},

author={Huajun Liu and Fuqiang Liu and Xinyi Fan and Dong Huang},

journal={Arxiv Pre-Print arXiv:2107.00782 },

year={2021}

}

@misc{chao2019hardnet,

title={HarDNet: A Low Memory Traffic Network},

author={Ping Chao and Chao-Yang Kao and Yu-Shan Ruan and Chien-Hsiang Huang and Youn-Long Lin},

year={2019},

eprint={1909.00948},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@inproceedings{sfnet,

title={Semantic Flow for Fast and Accurate Scene Parsing},

author={Li, Xiangtai and You, Ansheng and Zhu, Zhen and Zhao, Houlong and Yang, Maoke and Yang, Kuiyuan and Tong, Yunhai},

booktitle={ECCV},

year={2020}

}

@article{Li2020SRNet,

title={Towards Efficient Scene Understanding via Squeeze Reasoning},

author={Xiangtai Li and Xia Li and Ansheng You and Li Zhang and Guang-Liang Cheng and Kuiyuan Yang and Y. Tong and Zhouchen Lin},

journal={ArXiv},

year={2020},

volume={abs/2011.03308}

}

@ARTICLE{Yucondnet21,

author={Yu, Changqian and Shao, Yuanjie and Gao, Changxin and Sang, Nong},

journal={IEEE Signal Processing Letters},

title={CondNet: Conditional Classifier for Scene Segmentation},

year={2021},

volume={28},

number={},

pages={758-762},

doi={10.1109/LSP.2021.3070472}

}

@misc{yan2022lawin,

title={Lawin Transformer: Improving Semantic Segmentation Transformer with Multi-Scale Representations via Large Window Attention},

author={Haotian Yan and Chuang Zhang and Ming Wu},

year={2022},

eprint={2201.01615},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@misc{yu2021metaformer,

title={MetaFormer is Actually What You Need for Vision},

author={Weihao Yu and Mi Luo and Pan Zhou and Chenyang Si and Yichen Zhou and Xinchao Wang and Jiashi Feng and Shuicheng Yan},

year={2021},

eprint={2111.11418},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@misc{wightman2021resnet,

title={ResNet strikes back: An improved training procedure in timm},

author={Ross Wightman and Hugo Touvron and Hervé Jégou},

year={2021},

eprint={2110.00476},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@misc{liu2022convnet,

title={A ConvNet for the 2020s},

author={Zhuang Liu and Hanzi Mao and Chao-Yuan Wu and Christoph Feichtenhofer and Trevor Darrell and Saining Xie},

year={2022},

eprint={2201.03545},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@misc{li2022uniformer,

title={UniFormer: Unifying Convolution and Self-attention for Visual Recognition},

author={Kunchang Li and Yali Wang and Junhao Zhang and Peng Gao and Guanglu Song and Yu Liu and Hongsheng Li and Yu Qiao},

year={2022},

eprint={2201.09450},

archivePrefix={arXiv},

primaryClass={cs.CV}

}