tgn

1.0.0

| 動態圖 | TGN |

|---|---|

|  |

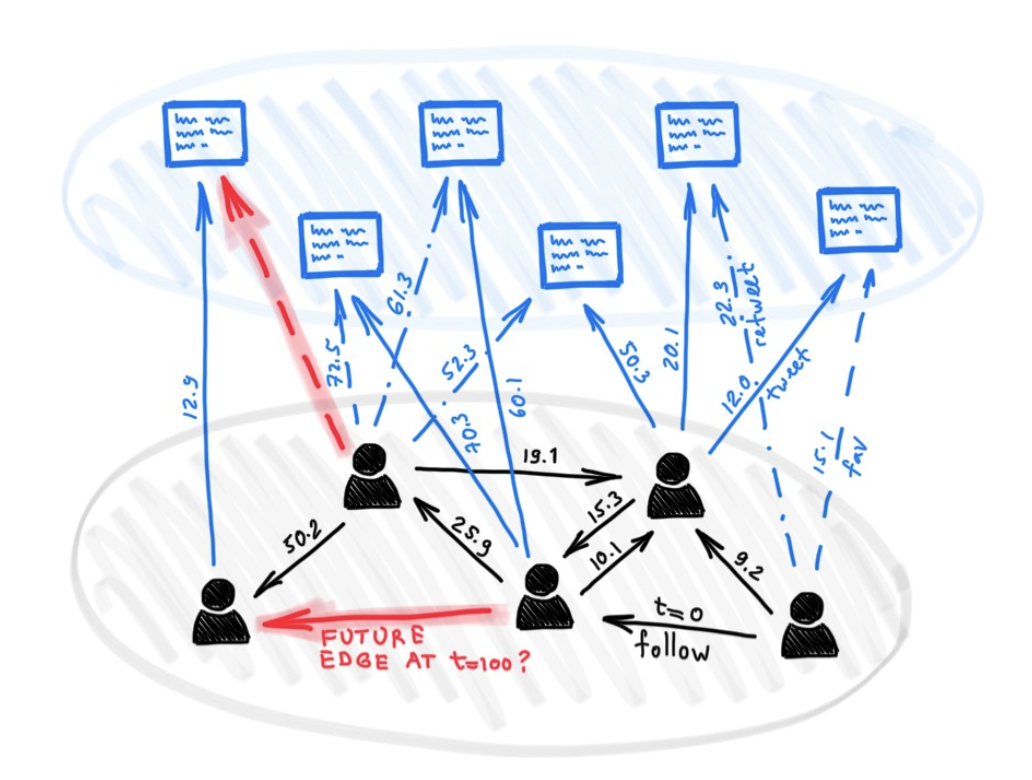

儘管有很多用於圖形深度學習的不同模型,但到目前為止,很少有人提出用於處理某種動態性質的圖表(例如隨著時間的推移而發展的特徵或連接性)。

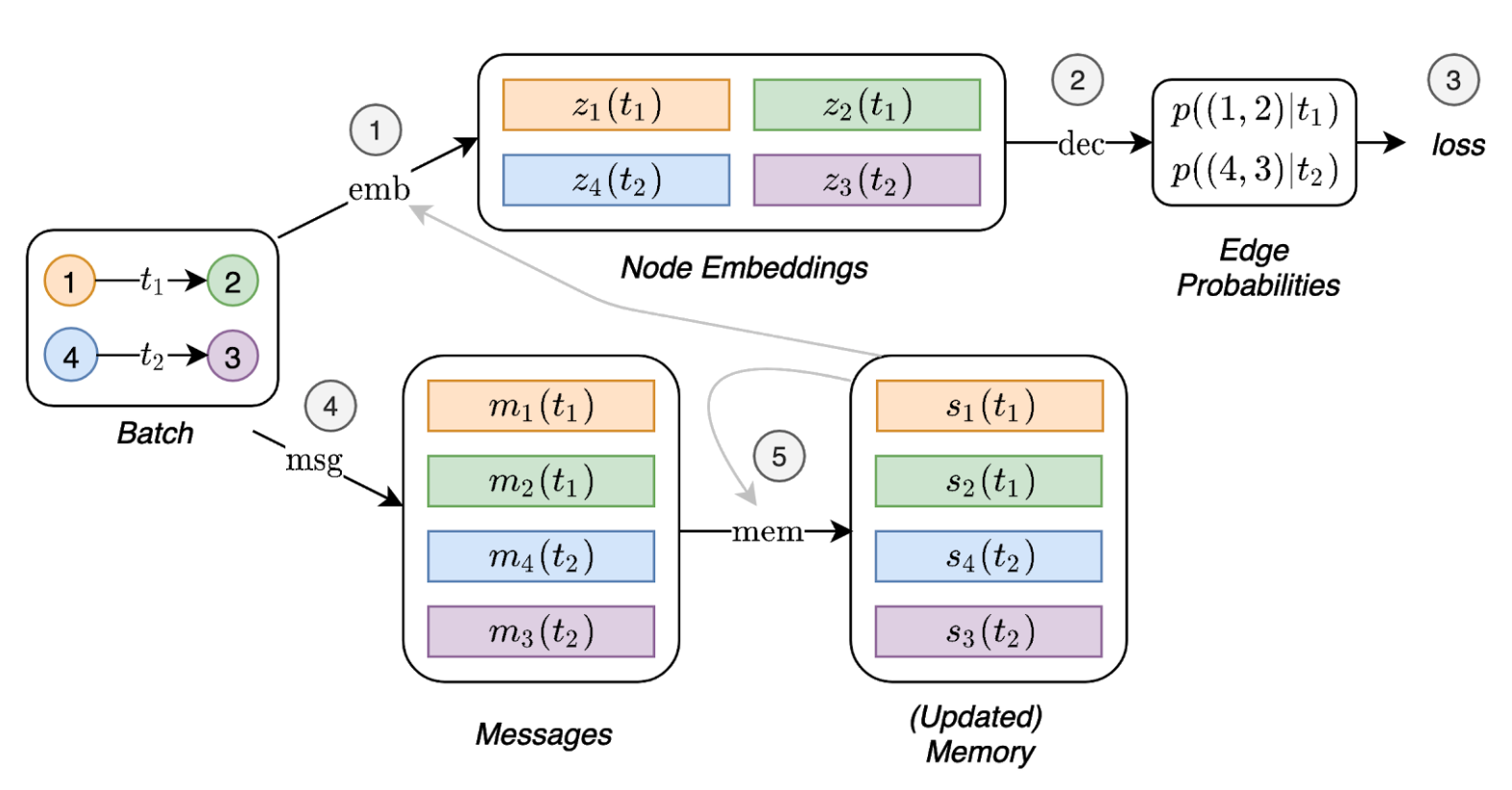

在本文中,我們提出了時間圖網絡(TGNS),這是一個通用,有效的框架,用於在動態圖上進行深度學習,表示為定時事件的序列。得益於內存模塊和基於圖的運算符的新型組合,TGN能夠顯著優於以前的方法,同時更有效地計算了。

我們進一步表明,在動態圖上學習的幾種以前的學習模型可以作為我們框架的特定實例施放。我們對框架的不同組成部分進行了詳細的消融研究,並設計了最佳的配置,該配置可以在動態圖的幾個跨性和歸納性預測任務上實現最新性能。

依賴項(帶Python> = 3.7):

pandas==1.1.0

torch==1.6.0

scikit_learn==0.23.1

從此處下載示例數據集(例如Wikipedia和Reddit),然後將其CSV文件存儲在名為data/文件夾中。

我們使用密集的npy格式以二進制格式保存功能。如果不存在邊緣功能或節點特徵,則將被零的向量取代。

python utils/preprocess_data.py --data wikipedia --bipartite

python utils/preprocess_data.py --data reddit --bipartite

使用鏈接預測任務的自我監督學習:

# TGN-attn: Supervised learning on the wikipedia dataset

python train_self_supervised.py --use_memory --prefix tgn-attn --n_runs 10

# TGN-attn-reddit: Supervised learning on the reddit dataset

python train_self_supervised.py -d reddit --use_memory --prefix tgn-attn-reddit --n_runs 10

對動態節點分類的監督學習(這需要從自我監督任務中進行訓練的模型,例如運行上面的命令):

# TGN-attn: self-supervised learning on the wikipedia dataset

python train_supervised.py --use_memory --prefix tgn-attn --n_runs 10

# TGN-attn-reddit: self-supervised learning on the reddit dataset

python train_supervised.py -d reddit --use_memory --prefix tgn-attn-reddit --n_runs 10

### Wikipedia Self-supervised

# Jodie

python train_self_supervised.py --use_memory --memory_updater rnn --embedding_module time --prefix jodie_rnn --n_runs 10

# DyRep

python train_self_supervised.py --use_memory --memory_updater rnn --dyrep --use_destination_embedding_in_message --prefix dyrep_rnn --n_runs 10

### Reddit Self-supervised

# Jodie

python train_self_supervised.py -d reddit --use_memory --memory_updater rnn --embedding_module time --prefix jodie_rnn_reddit --n_runs 10

# DyRep

python train_self_supervised.py -d reddit --use_memory --memory_updater rnn --dyrep --use_destination_embedding_in_message --prefix dyrep_rnn_reddit --n_runs 10

### Wikipedia Supervised

# Jodie

python train_supervised.py --use_memory --memory_updater rnn --embedding_module time --prefix jodie_rnn --n_runs 10

# DyRep

python train_supervised.py --use_memory --memory_updater rnn --dyrep --use_destination_embedding_in_message --prefix dyrep_rnn --n_runs 10

### Reddit Supervised

# Jodie

python train_supervised.py -d reddit --use_memory --memory_updater rnn --embedding_module time --prefix jodie_rnn_reddit --n_runs 10

# DyRep

python train_supervised.py -d reddit --use_memory --memory_updater rnn --dyrep --use_destination_embedding_in_message --prefix dyrep_rnn_reddit --n_runs 10

命令在不同模塊的消融研究中復制所有結果:

# TGN-2l

python train_self_supervised.py --use_memory --n_layer 2 --prefix tgn-2l --n_runs 10

# TGN-no-mem

python train_self_supervised.py --prefix tgn-no-mem --n_runs 10

# TGN-time

python train_self_supervised.py --use_memory --embedding_module time --prefix tgn-time --n_runs 10

# TGN-id

python train_self_supervised.py --use_memory --embedding_module identity --prefix tgn-id --n_runs 10

# TGN-sum

python train_self_supervised.py --use_memory --embedding_module graph_sum --prefix tgn-sum --n_runs 10

# TGN-mean

python train_self_supervised.py --use_memory --aggregator mean --prefix tgn-mean --n_runs 10

optional arguments:

-d DATA, --data DATA Data sources to use (wikipedia or reddit)

--bs BS Batch size

--prefix PREFIX Prefix to name checkpoints and results

--n_degree N_DEGREE Number of neighbors to sample at each layer

--n_head N_HEAD Number of heads used in the attention layer

--n_epoch N_EPOCH Number of epochs

--n_layer N_LAYER Number of graph attention layers

--lr LR Learning rate

--patience Patience of the early stopping strategy

--n_runs Number of runs (compute mean and std of results)

--drop_out DROP_OUT Dropout probability

--gpu GPU Idx for the gpu to use

--node_dim NODE_DIM Dimensions of the node embedding

--time_dim TIME_DIM Dimensions of the time embedding

--use_memory Whether to use a memory for the nodes

--embedding_module Type of the embedding module

--message_function Type of the message function

--memory_updater Type of the memory updater

--aggregator Type of the message aggregator

--memory_update_at_the_end Whether to update the memory at the end or at the start of the batch

--message_dim Dimension of the messages

--memory_dim Dimension of the memory

--backprop_every Number of batches to process before performing backpropagation

--different_new_nodes Whether to use different unseen nodes for validation and testing

--uniform Whether to sample the temporal neighbors uniformly (or instead take the most recent ones)

--randomize_features Whether to randomize node features

--dyrep Whether to run the model as DyRep

@inproceedings { tgn_icml_grl2020 ,

title = { Temporal Graph Networks for Deep Learning on Dynamic Graphs } ,

author = { Emanuele Rossi and Ben Chamberlain and Fabrizio Frasca and Davide Eynard and Federico

Monti and Michael Bronstein } ,

booktitle = { ICML 2020 Workshop on Graph Representation Learning } ,

year = { 2020 }

}