Stream responses

1.0.0

该代码是用GPT-4 API,CHATGPT API和DENSTICTGPT(GPT-3.5。)模型和STRAMLIT-APP来证明流媒体的用法。

该方法仅使用OpenAI和时间库,并使用Print(End ='',flush = true)重新打印流:

!p ip install - - upgrade openai

import openai

import time

openai . api_key = user_secrets . get_secret ( "OPENAI_API_KEY" )

startime = time . time ()免责声明:生产用法流式传输的缺点是控制适当用法政策的控制:https://beta.openai.com/docs/usage-guidelines,应提前对每个申请进行审查,因此我建议在此策略之前决定使用流媒体。

运行文件流。IPNYB第一部分。

### STREAM GPT-4 API RESPONSES

delay_time = 0.01 # faster

max_response_length = 8000

answer = ''

# ASK QUESTION

prompt = input ( "Ask a question: " )

start_time = time . time ()

response = openai . ChatCompletion . create (

# GPT-4 API REQQUEST

model = 'gpt-4' ,

messages = [

{ 'role' : 'user' , 'content' : f' { prompt } ' }

],

max_tokens = max_response_length ,

temperature = 0 ,

stream = True , # this time, we set stream=True

)

for event in response :

# STREAM THE ANSWER

print ( answer , end = '' , flush = True ) # Print the response

# RETRIEVE THE TEXT FROM THE RESPONSE

event_time = time . time () - start_time # CALCULATE TIME DELAY BY THE EVENT

event_text = event [ 'choices' ][ 0 ][ 'delta' ] # EVENT DELTA RESPONSE

answer = event_text . get ( 'content' , '' ) # RETRIEVE CONTENT

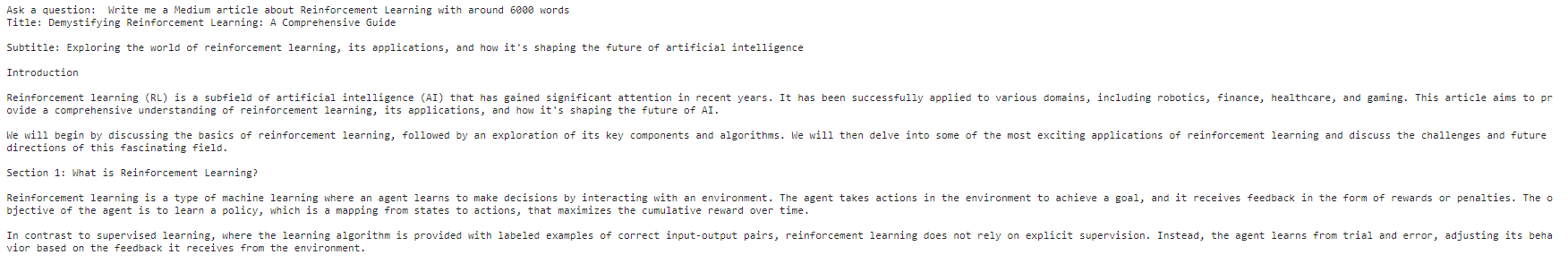

time . sleep ( delay_time )插入用户输入并按Enter后,您应该看到打印的输出:

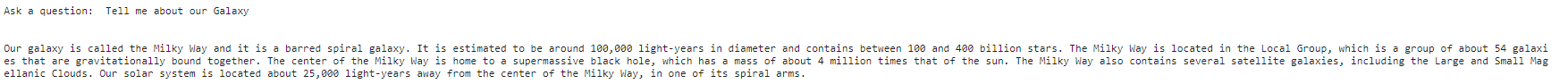

运行文件流。IPNYB第二部分。添加用户输入,您应该看到与以下相似的相似之处:

### STREAM CHATGPT API RESPONSES

delay_time = 0.01 # faster

max_response_length = 200

answer = ''

# ASK QUESTION

prompt = input ( "Ask a question: " )

start_time = time . time ()

response = openai . ChatCompletion . create (

# CHATPG GPT API REQQUEST

model = 'gpt-3.5-turbo' ,

messages = [

{ 'role' : 'user' , 'content' : f' { prompt } ' }

],

max_tokens = max_response_length ,

temperature = 0 ,

stream = True , # this time, we set stream=True

)

for event in response :

# STREAM THE ANSWER

print ( answer , end = '' , flush = True ) # Print the response

# RETRIEVE THE TEXT FROM THE RESPONSE

event_time = time . time () - start_time # CALCULATE TIME DELAY BY THE EVENT

event_text = event [ 'choices' ][ 0 ][ 'delta' ] # EVENT DELTA RESPONSE

answer = event_text . get ( 'content' , '' ) # RETRIEVE CONTENT

time . sleep ( delay_time )

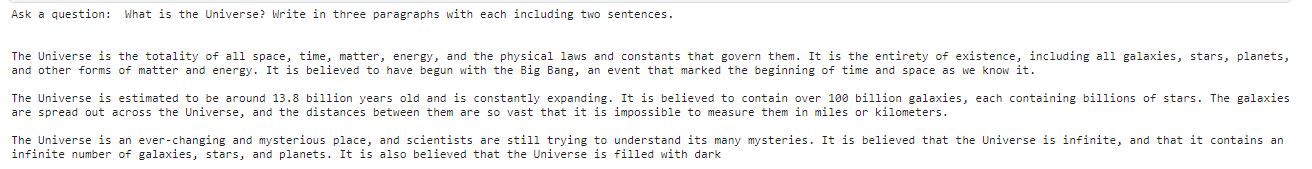

运行文件流。PNYB第三部分。添加用户输入,您应该看到与以下相似的相似之处:

collected_events = []

completion_text = []

speed = 0.05 #smaller is faster

max_response_length = 200

start_time = time . time ()

prompt = input ( "Ask a question: " )

# Generate Answer

response = openai . Completion . create (

model = 'text-davinci-003' ,

prompt = prompt ,

max_tokens = max_response_length ,

temperature = 0 ,

stream = True , # this time, we set stream=True

)

# Stream Answer

for event in response :

event_time = time . time () - start_time # calculate the time delay of the event

collected_events . append ( event ) # save the event response

event_text = event [ 'choices' ][ 0 ][ 'text' ] # extract the text

completion_text += event_text # append the text

time . sleep ( speed )

print ( f" { event_text } " , end = "" , flush = True )

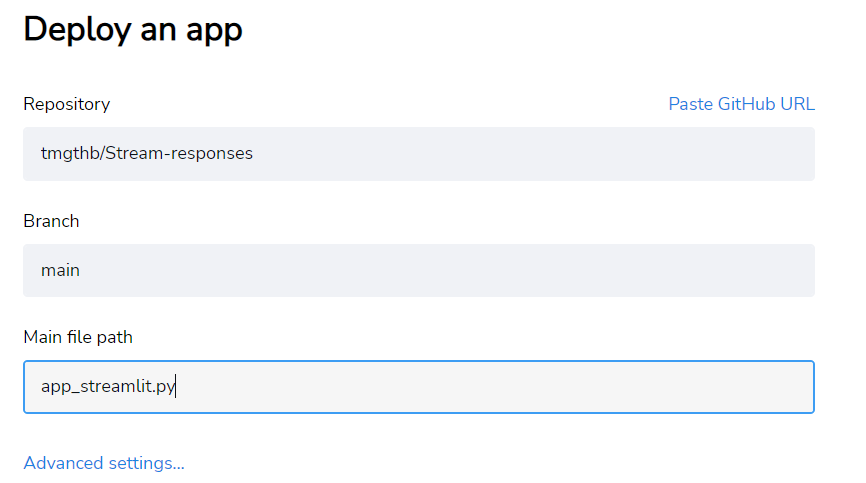

我添加了一个有效的“ app_streamlit.py” -file,您可以用“ unignts.txt”将其分配到存储库中,然后在简化中部署它。

在高级设置中,使用格式添加OpenAI_API_KEY-KEY-KEKEN-VAR-AREVAT:

OPENAI_API_KEY = "INSERT HERE YOUR KEY" 随意叉,并按照许可证进一步改进代码。例如,您可以进一步改进CHATML,以确保流程遵循所需的“系统”规则。我现在将这些空的东西留下来,使这个基本脚本非常通用。我建议您查看有关ChatGpt API的文章,以了解与流媒体,CHATML:带有系统,助理和用户角色以及ChatGpt API简介教程的媒介中的流媒体响应。