With the rapid development of artificial intelligence technology, how to effectively evaluate and compare the strength of different generative AI models has become a highly concerned problem. Traditional AI benchmarking methods are gradually revealing their limitations, and for this reason, AI developers are actively exploring more innovative evaluation methods.

Recently, a website called "Minecraft Benchmark" (MC-Bench) emerged. Its uniqueness is that it uses Microsoft's sandbox-built game "Minecraft" as a platform to allow users to evaluate their performance by comparing AI models based on prompts. What is surprising is that the creator of this novel platform turned out to be a student in the 12th grade.

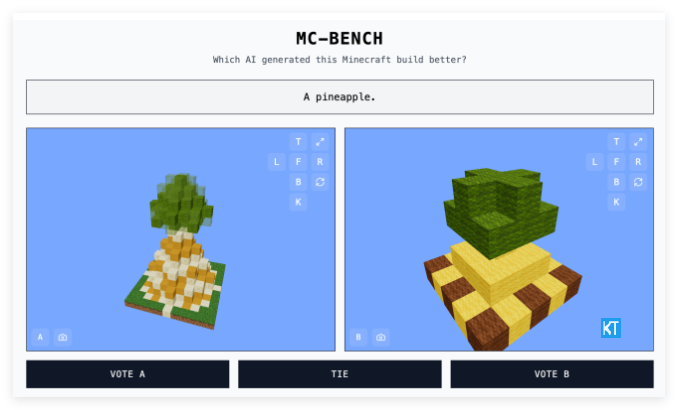

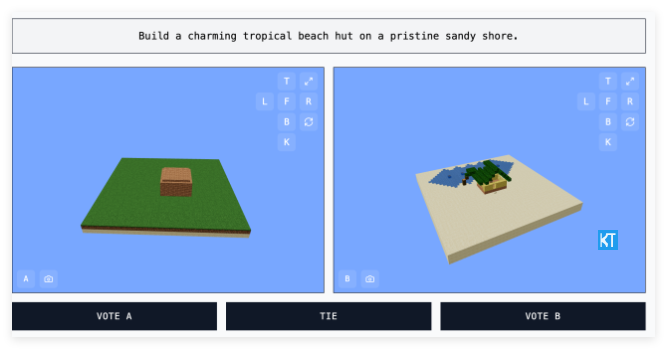

The MC-Bench website provides an intuitive and interesting way to evaluate AI models. Developers enter different prompts into the AI model participating in the test, and the model will generate the corresponding Minecraft building. Users can vote on these buildings without knowing which work is created by which AI model to choose the one they think is more in line with the prompts and better. Only after the vote is over will users see the “creator” behind each building. This "blind selection" mechanism is designed to more objectively reflect the actual generation ability of AI models.

Adi Singh said Minecraft was chosen as the benchmarking platform not just because of the popularity of the game itself - it is the best-selling video game in history. More importantly, the widespread popularity of this game and the familiarity of its visual style make it relatively easy for people who have never played this game to tell which pineapple made of squares looks more realistic. He believes that "Minecraft makes it easier for people to see the progress of [AI development]", a visual assessment method that is more convincing than a mere textual metric.

MC-Bench currently mainly conducts relatively simple building tasks, such as asking AI models to write code to create corresponding game structures based on tips such as "King of Frost" or "Colourful Tropical Huts on Primitive Beaches". This is essentially a programming benchmark, but the cleverness is that users do not need to delve into complex code and can judge the quality of the work based on intuitive visual effects, which greatly improves the project's participation and data collection potential.

The design philosophy of MC-Bench is to allow the public to more intuitively feel the development level of AI technology. “The current rankings are very consistent with my personal experience with these models, which is different from many plain text benchmarks,” Singh said. He believes that MC-Bench may provide a valuable reference for related companies to help them judge whether their AI research and development is correct.

Although MC-Bench was initiated by Adi Singh, it also gathered a group of volunteer contributors behind it. It is worth mentioning that several top AI companies, including Anthropic, Google, OpenAI and Alibaba, have provided subsidies for the project to use their products to run benchmarks. However, MC-Bench's website states that these companies are not otherwise associated with the project.

Singh is also full of prospects for the future of MC-Bench. He said the simple construction currently underway is just a starting point and may be extended to longer-term planning and goal-oriented tasks in the future. He believes that gaming may become a safe and controllable medium for testing AI's "agent reasoning" capabilities, which is difficult to achieve in real life, so it has an advantage in testing.

In addition to MC-Bench, other games such as Street Fighter and You Draw and I Guess have also been used as experimental benchmarks for AI, reflecting that AI benchmarks are a very skillful area in themselves. Traditional standardized evaluations often have a "home-field advantage" because AI models have been optimized for certain types of problems during training, especially on issues that require rote memory or basic inference. For example, OpenAI's GPT-4 achieved 88% excellent results in the LSAT exam, but could not tell how many "R" there are in the word "strawberry".

Anthropic's Claude 3.7Sonnet achieved 62.3% accuracy in standardized software engineering benchmarks, but was not as good as most five-year-olds when it comes to playing Pokémon.

The emergence of MC-Bench provides a novel and easier-to-understand perspective for evaluating the ability of generative AI models. By leveraging the well-known gaming platform, it transforms complex AI technology capabilities into intuitive visual comparisons, allowing more people to participate in the AI evaluation and cognitive process. Although the actual value of this method of evaluation is still under discussion, it undoubtedly provides us with a new window to observe the development of AI.

Project entrance: https://top.aibase.com/tool/mc-bench