In the video generation field, OpenAI Sora has been regarded as an industry benchmark for its high training costs and excellent performance. However, Luchen Technology recently announced the open source of its video generation model Open-Sora2.0, which undoubtedly caused a huge sensation in the industry. Open-Sora2.0 quickly became the new focus of video generation technology with its extremely low training cost and performance close to top models.

The training cost of Open-Sora2.0 is only US$200,000, which is equivalent to the investment of 224 GPUs, but it has successfully trained a commercial-level video generation model with 11 billion parameters. This achievement not only demonstrates Luchen Technology's technological breakthroughs, but also brings new possibilities to the field of video generation.

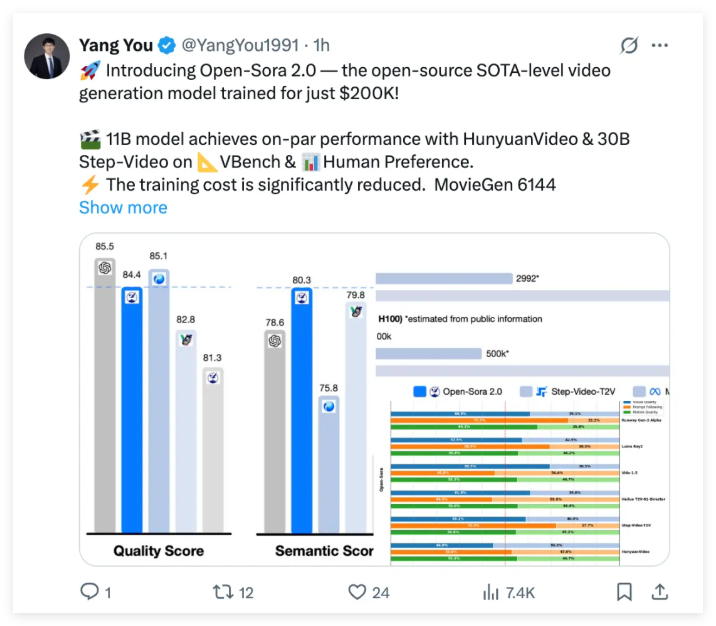

Although Open-Sora2.0 costs much lower than OpenAI Sora, its performance is no less than that. Open-Sora2.0 has performed impressively in authoritative reviews VBench and user preference tests, and can even compete with closed-source models that cost millions of dollars to train on multiple key metrics. Especially in the VBench evaluation, the performance gap between Open-Sora2.0 and OpenAI Sora has narrowed significantly from the previous 4.52% to only 0.69%, almost achieving a comprehensive performance striking.

What is even more exciting is that Open-Sora2.0 scores even surpass Tencent’s HunyuanVideo in VBench, demonstrating its strong strength in video generation technology. This achievement not only proves the technological advantages of Open-Sora2.0, but also sets a new benchmark for open source video generation technology.

In user preference review, Open-Sora2.0 has at least two indicators that surpass the open source SOTA model HunyuanVideo and the business model Runway Gen-3Alpha in the three key dimensions of visual performance, text consistency and action performance. This achievement further consolidates Open-Sora2.0's leading position in the video generation field.

The reason why Open-Sora2.0 can achieve such high performance at such a low cost is due to a series of technological innovations and optimization strategies. First of all, Open-Sora2.0 continues the design idea of Open-Sora1.2, adopts 3D autoencoder and Flow Matching training framework, and introduces a 3D full attention mechanism to further improve the quality of video generation.

In order to pursue the ultimate cost optimization, Open-Sora2.0 starts from multiple aspects: strict data screening ensures high-quality training data input, priority is given to low-resolution training to efficiently learn motion information, priority is given to training graph video tasks to accelerate model convergence, and adopts an efficient parallel training scheme, combining ColossalAI and system-level optimization, greatly improving the utilization of computing resources.

It is estimated that the cost of a single training of more than 10B open source video models on the market is often millions of dollars, while Open-Sora2.0 reduces this cost by 5-10 times. This breakthrough not only lowers the threshold for high-quality video generation, but also gives more developers the opportunity to participate in the research and development of video generation technology.

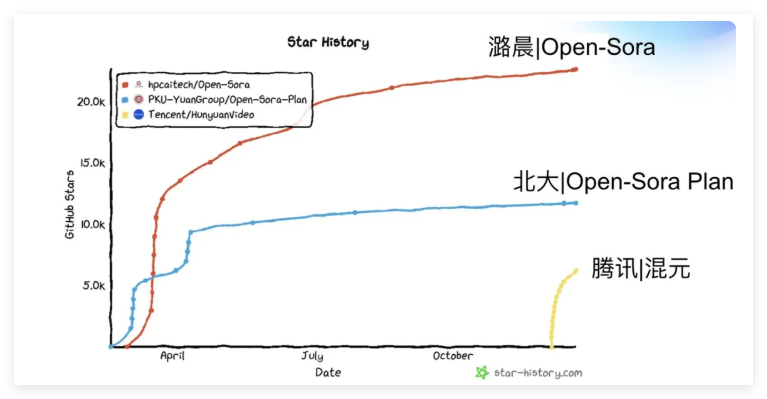

What is even more commendable is that Open-Sora2.0 not only open source model code and weights, but also open source full-process training code, which will undoubtedly greatly promote the development of the entire open source ecosystem. The number of academic paper citations of Open-Sora2.0 has received nearly 100 citations within half a year, ranking first in the global open source influence ranking, becoming one of the world's most influential open source video generation projects.

The Open-Sora2.0 team is also actively exploring the application of high-compression ratio video autoencoder to significantly reduce inference costs. They trained a video autoencoder with high compression ratio (4×32×32) to shorten the inference time of generating 768px and 5-second videos in a single card from nearly 30 minutes to within 3 minutes, and the speed has increased by 10 times. This innovation means that we can generate high-quality video content faster in the future.

The open source video generation model Open-Sora2.0 launched by Luchen Technology, with its low-cost, high-performance and comprehensive open source characteristics, undoubtedly brings a strong "parity" trend to the video generation field. Its emergence not only narrowed the gap with the top closed-source models, but also lowered the threshold for high-quality video generation, allowing more developers to participate and jointly promote the development of video generation technology.

GitHub open source repository: https://github.com/hpcaitech/Open-Sora

Technical report: https://github.com/hpcaitech/Open-Sora-Demo/blob/main/paper/Open_Sora_2_tech_report.pdf